Guide to Large Language Models (LLMs)

by Stephen M. Walker II, Co-Founder / CEO

What is a Large Language Model?

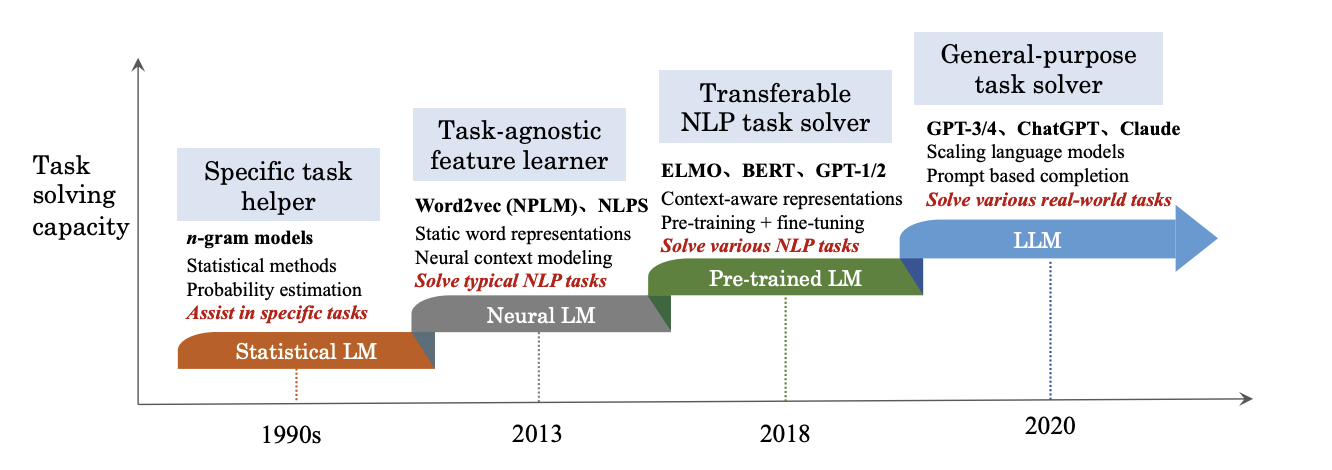

A Large Language Model (LLM) is a type of artificial intelligence (AI) algorithm that uses deep learning techniques and massive data sets to achieve general-purpose language understanding and generation. LLMs are pre-trained on vast amounts of data, often including sources like the Common Crawl and Wikipedia.

LLMs are designed to recognize, summarize, translate, predict, and generate text and other forms of content based on the knowledge gained from their training.

Key characteristics of LLMs include:

-

Transformer Model Architecture — LLMs are based on transformer models, which consist of an encoder and a decoder that extract meanings from a sequence of text and understand the relationships between words.

-

Attention Mechanism — This mechanism allows LLMs to capture long-range dependencies between words, enabling them to understand context.

-

Autoregressive Text Generation — LLMs generate text based on previously generated tokens, allowing them to produce text in different styles and languages.

Some popular examples of LLMs are GPT-3 and GPT-4 from OpenAI, LLaMA 2 from Meta, and Gemini from Google. These models have the potential to disrupt various industries, including search engines, natural language processing, healthcare, robotics, and code generation. 1

Read more details about leading LLMs:

How are LLMs built and trained?

Building and training Large Language Models (LLMs) is a complex process that involves several steps. Initially, a massive amount of text data is collected from various sources such as books, websites, and social media posts. This data is then cleaned and processed into a format that the AI can learn from.

The architecture of the LLMs is designed using deep neural networks with billions of parameters. Different transformer architectures like encoder-decoder, causal decoder, and prefix decoder are used, and the design of the model significantly impacts its capabilities.

The LLMs are then trained using computational power and optimization algorithms. This training tunes the parameters to predict text statistically, and more training leads to more capable models.

Finally, by scaling up data, parameters, and compute power, companies have been able to produce LLMs with capabilities approaching human language use.

- Data Collection — LLMs require huge datasets of text data to train on. This can include books, websites, social media posts, and more. Data is cleaned and processed into a format the AI can learn from.

- Model Architecture — LLMs have a deep neural network architecture with billions of parameters. Different architectures like Transformer or GPT are used. The model design impacts its capabilities.

- Training — LLMs are trained using computational power and optimization algorithms. Training tunes the parameters to predict text statistically. More training leads to more capable models.

- Scaling — By scaling up data, parameters, and compute power, companies have produced LLMs with capabilities approaching human language use.

Large Language Model Operations (LLMOps) concentrates on the effective deployment, monitoring, and upkeep of LLMs in production. It encompasses model versioning, scaling, and performance enhancement.

How are LLMs benchmarked and evaluated?

Large Language Models (LLMs) are evaluated using various benchmarks to assess their performance on different tasks.

Model Performance

Here are the leading models on coding, math, science, humanity, and other benchmarks.

| Model | Creator | Accuracy | Coherence | Groundedness | Fluency | Relevance |

|---|---|---|---|---|---|---|

| gpt-4-turbo-2024-04-09 | OpenAI | 0.877 | 4.974 | -- | 4.947 | 4.039 |

| gpt-4-32k-0314 | OpenAI | 0.875 | 4.93 | 4.202 | 4.962 | 4.104 |

| gpt-4-0314 | OpenAI | 0.874 | 4.929 | 4.254 | 4.96 | 4.08 |

| gpt-4-0613 | OpenAI | 0.874 | 4.877 | 4.296 | 4.924 | 4.334 |

| gpt-4-32k-0613 | OpenAI | 0.873 | 4.881 | 4.139 | 4.925 | 4.381 |

| gpt-4o | OpenAI | 0.854 | 4.947 | 4.09 | 4.951 | 4.19 |

| llama-3-70b-instruct | Meta | 0.842 | 4.785 | 3.911 | 4.818 | 3.346 |

| phi-3-medium-4k-instruct | Microsoft | 0.821 | 4.458 | 4.189 | 4.439 | 4.296 |

| mistral-large | Mistral | 0.817 | 4.506 | 3.892 | 4.564 | 3.63 |

| phi-3-medium-128k-instruct | Microsoft | 0.799 | 4.688 | 4.176 | 4.621 | 4.341 |

| mistral-community-mixtral-8x22b-v0-1 | Mistral | 0.797 | 3.605 | 3.285 | 3.78 | 3.235 |

| mistralai-mixtral-8x22b-instruct-v0-1 | Mistral | 0.797 | 4.513 | 4.115 | 4.526 | 4.146 |

| databricks-dbrx-base | Databricks | 0.792 | 4.771 | 3.786 | 4.864 | 3.585 |

| phi-3-small-128k-instruct | Microsoft | 0.786 | 4.55 | 4.239 | 4.583 | 4.043 |

| phi-3-small-8k-instruct | Microsoft | 0.783 | 4.163 | 3.71 | 4.247 | 3.81 |

| llama-3-70b | Meta | 0.769 | -- | -- | -- | -- |

| databricks-dbrx-instruct | Databricks | 0.764 | 4.647 | 4.002 | 4.729 | 4.011 |

| mistralai-mixtral-8x22b-v0-1 | Mistral | 0.763 | 3.622 | 3.259 | 3.82 | 3.24 |

| cohere-command-r-plus | Cohere | 0.761 | 4.715 | 4.237 | 4.836 | 3.995 |

| gpt-35-turbo-0301 | OpenAI | 0.756 | 4.855 | 4.198 | 4.913 | 4.099 |

| gpt-35-turbo-0613 | OpenAI | 0.752 | 4.849 | 3.595 | 4.913 | 3.56 |

| phi-3-mini-128k-instruct | Microsoft | 0.729 | 4.436 | 4.018 | 4.417 | 4.214 |

| phi-3-mini-4k-instruct | Microsoft | 0.728 | 4.166 | 4.099 | 4.204 | 4.251 |

| llama-3-8b-instruct | Meta | 0.708 | 4.658 | 4.112 | 4.737 | 3.702 |

| mistralai-mixtral-8x7b-instruct-v01 | Mistral | 0.703 | 4.838 | 4.228 | 4.915 | 4.129 |

| llama-2-70b | Meta | 0.692 | 3.947 | 2.718 | 4.262 | 2.697 |

| cohere-command-r | Cohere | 0.687 | 4.825 | 4.24 | 4.939 | 4.024 |

| mistralai-mixtral-8x7b-v01 | Mistral | 0.673 | 3.888 | 3.585 | 4.17 | 3.483 |

Some of the key benchmarks for LLMs include:

-

GAIA (Multi-Task Model Evaluation) — The General AI Assistants (GAIA) benchmark, rigorously tests AI systems' multitasking abilities across complex, real-world scenarios. It assesses accuracy and the AI's handling layered queries.

-

MMLU (Multi-Task Model Evaluation) — This benchmark measures how well LLMs can multitask by evaluating their performance on a variety of tasks, such as question answering, text classification, and document summarization.

-

MMLU Pro — An enhanced benchmark that evaluates language understanding across a broader, more challenging set of tasks, building upon the original MMLU dataset with increased difficulty and robustness.

-

GPQA (Graduate-Level Google-Proof Q&A Benchmark) — The GPQA (Graduate-Level Google-Proof Q&A Benchmark) serves as a rigorous test to assess the capabilities of Large Language Models (LLMs) and their scalable oversight mechanisms. The questions are meticulously crafted by domain experts to guarantee both high quality and a challenging level of difficulty.

-

MMMU (Massive Multi-discipline Multimodal Understanding) — This benchmark evaluates the proficiency of LLMs in understanding and generating responses across multiple modalities, including text, images, and audio. It assesses the models' ability to perform tasks like image captioning, audio transcription, and cross-modal question answering.

-

MT-Bench (Multi-Turn Benchmark) — This benchmark measures how LLMs engage in coherent, informative, and engaging conversations. It is designed to assess the conversation flow and instruction-following capabilities.

-

LMSYS Chatbot Arena — A platform for evaluating and comparing the performance of various chatbots and language models in a competitive setting.

-

AlpacaEval — AlpacaEval is an automated benchmarking tool that evaluates the performance of LLMs in following instructions. It uses the AlpacaFarm dataset to measure models' ability to generate responses that align with human expectations, providing a rapid and cost-effective assessment of model capabilities.

-

RewardBench — Designed to evaluate the effectiveness and safety of reward models (RMs) used in Supervised Fine-Tuning (SFT). These models are crucial for aligning language models with human preferences, especially when employing Reinforcement Learning from Human Feedback (RLHF).

-

HELM (Holistic Evaluation of Language Models) — HELM is a comprehensive benchmark that evaluates LLMs on a wide range of tasks, including text generation, translation, question answering, code generation, and commonsense reasoning.

-

HellaSwag — HellaSwag is a challenging new benchmark in AI for understanding and predicting human behavior. It involves predicting the ending of an incomplete video or text narrative, requiring a deep understanding of the world and human behavior.

-

GSM8k (Grade School Math 8k) — GSM8K, or Grade School Math 8K, is a dataset of 8,500 high-quality, linguistically diverse grade school math word problems. The dataset was created to support the task of question answering on basic mathematical problems that require multi-step reasoning.

-

GLUE (General Language Understanding) — GLUE is a benchmark that focuses on evaluating LLMs on natural language understanding tasks, such as question answering, text classification, and document summarization. The benchmark consists of two sub-benchmarks: HellaSWAG and MRPC.

-

SuperGLUE — SuperGLUE is an updated version of GLUE that includes more challenging tasks, providing a more thorough evaluation of LLMs' capabilities.

Evaluating LLMs requires a mix of benchmarks and human assessment for a thorough understanding of their capabilities. The task's specific needs should guide the choice of benchmark. For instance, GLUE or SuperGLUE are suitable for natural language inference tasks, while HELM or MMLU are better for chatbot assistance or code generation tasks.

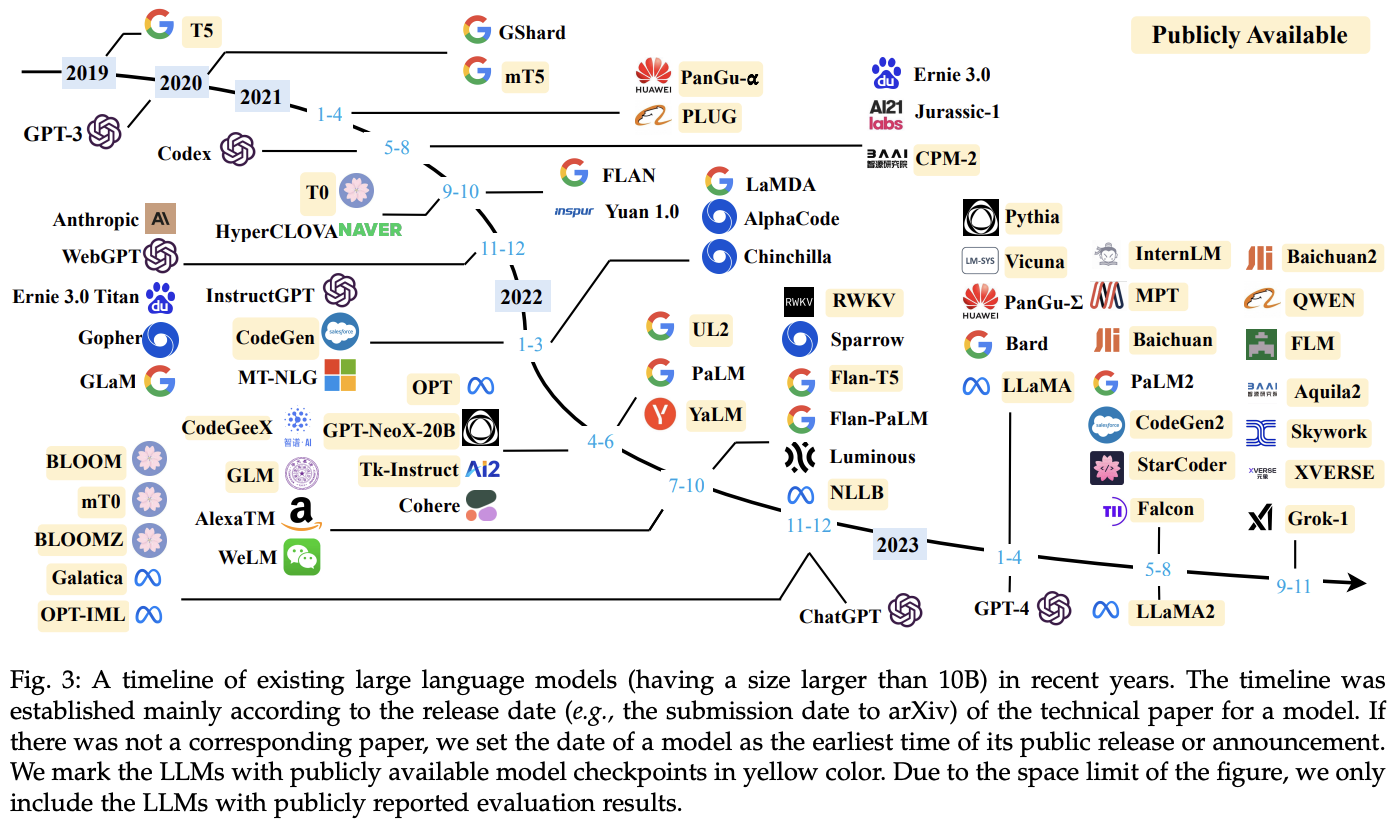

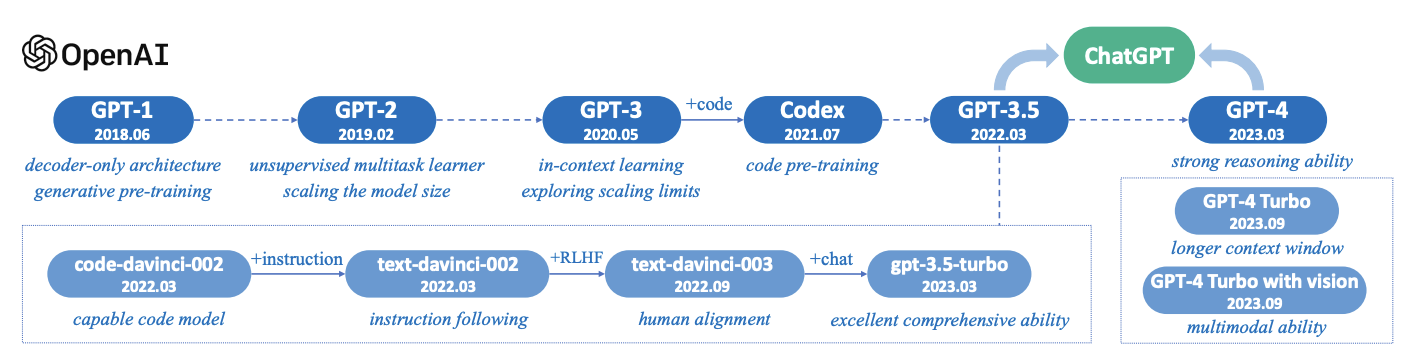

What are the leading LLMs in 2024?

In this section, we present a comparison of the leading Large Language Models (LLMs) as of 2024. Since its release in spring of 2023, GPT-4 is the reigning foundation model. However, as of June 2024, OpenAI GPT-4o now leads the LLM Elo rating. The models are evaluated based on their performance on the MT-bench and MMLU benchmarks. Please note that the scores may vary as the models are continuously updated and improved.

| Model | Arena Elo rating | MT Bench | MMLU |

|---|---|---|---|

| GPT-4o-2024-05-13 | 1287 | — | 88.7 |

| Claude 3.5 Sonnet | 1272 | 9.22 | 87.0 |

| Gemini-Advanced-0514 | 1267 | — | — |

| Gemini-1.5-Pro-API-0514 | 1263 | — | 85.9 |

| GPT-4-Turbo-2024-04-09 | 1257 | — | — |

| Gemini-1.5-Pro-API-0409-Preview | 1257 | — | 81.9 |

| Claude 3 Opus | 1253 | 9.45 | 87.1 |

| GPT-4-1106-preview | 1251 | 9.40 | — |

| GPT-4-0125-preview | 1248 | 9.38 | — |

| Yi-Large-preview | 1240 | — | — |

| Gemini-1.5-Flash-API-0514 | 1229 | — | 78.9 |

| Yi-Large | 1215 | — | — |

| Bard (Gemini Pro) | 1203 | 9.18 | — |

| GLM-4-0520 | 1208 | — | — |

| Llama-3-70b-Instruct | 1207 | — | 82.0 |

| Claude 3 Sonnet | 1216 | 9.22 | 87.0 |

| GPT-4-0314 | 1185 | 8.96 | 86.4 |

| Claude 3 Haiku | 1179 | 9.10 | 86.9 |

| GPT-4-0613 | 1155 | 9.18 | — |

| Mistral-Large-2402 | 1159 | 8.63 | 75.5 |

| Mistral Medium | 1148 | 9.18 | — |

| Claude-1 | 1149 | 7.9 | 77 |

| Claude-2.0 | 1131 | 8.06 | 78.5 |

| Mixtral-8x7b-Instruct-v0.1 | 1121 | 8.3 | 70.6 |

| Gemini Pro (Dev API) | 1127 | — | 72.3 |

| Claude-2.1 | 1118 | 8.18 | — |

| GPT-3.5-Turbo-0613 | 1115 | 8.39 | — |

| Claude-Instant-1 | 1109 | 7.85 | 73.4 |

| Tulu-2-DPO-70B | 1108 | 7.89 | — |

| Yi-34B-Chat | 1109 | — | 73.5 |

| Gemini Pro | 1109 | — | 71.8 |

| GPT-3.5-Turbo-0314 | 1104 | 7.94 | 70 |

How is LLM performance maximized?

To improve the performance of Large Language Models (LLMs), several techniques can be applied. Some of these techniques include:

Architecture Changes

-

Multi-Query Attention (MQA) — This technique significantly improves machine performance and efficiency for language inference tasks such as summarization, question answering, and retrieval-augmented generation. By using MQA-based efficiency techniques, users can get 11x better throughput and 30% lower latency on inference. Models that use Multi-Query Attention include LLaMA-v2 and Falcon. A variant of MQA, called Grouped-Query Attention (GQA), uses an intermediate number of key-value heads, achieving quality close to multi-head attention with comparable speed to MQA.

-

Sliding Window Attention — This attention pattern was proposed as part of the Longformer architecture. It employs a fixed-size window attention surrounding each token. Using multiple stacked layers of such windowed attention results in a large receptive field, where top layers have access to all input locations and have the capacity to build representations that incorporate information across the entire input.

-

Data Augmentation — This approach generates new training samples by modifying existing ones, helping to improve the model's performance on limited training data.

Post-training Model Changes

-

Fine-tuning — This involves adapting the model for specific tasks using a task-specific labeled dataset. Techniques like LoRA (Low-Rank Adapters) involve adding low-rank matrices to pre-existing layers within a large pre-trained model, fine-tuning only these added low-rank matrices while keeping the original large-scale parameters fixed.

-

Parameter-Efficient Fine-Tuning (PEFT) — This technique focuses on reducing the number of parameters in the model, which can lead to more efficient fine-tuning and better performance on specific tasks.

-

Attention Sinks — This technique involves using window attention with attention sink tokens, which allows pretrained chat-style LLMs to maintain fluency over long conversations.

-

Operator Fusion — Combining different adjacent operators together often results in better latency.

-

Quantization — Activations and weights are compressed to use a smaller number of bits, reducing the model's size and computational requirements.

-

Compression — Techniques like sparsity or distillation can help reduce the model's size and improve its performance.

-

Parallelization — Tensor parallelism across multiple devices or pipeline parallelism for larger models can help improve latency and throughput.

Application Changes

-

Prompt Engineering — Crafting high-quality prompts or instructions can help enhance LLM performance. This involves careful prompting of models to provide step-by-step explanations of their solutions, breaking down tasks into simpler steps.

-

Retrieval-Augmented Generation (RAG) — This method involves retrieving relevant information from a database or knowledge base to augment the LLM's responses, improving the quality and relevance of the generated outputs.

By applying these techniques, you can enhance the performance of LLMs in various ways, such as improving their ability to adapt to specific tasks, generating more relevant and precise outputs, and reducing computational requirements.

How can Enterprises easily deploy LLMs?

Major cloud providers like Google Cloud Platform (GCP), Amazon Web Services (AWS), and Azure offer various platforms and services to access Large Language Models (LLMs) easily. Some of the key offerings include:

-

Google Cloud — Google Cloud offers generative AI solutions on Vertex AI, which provides access to its large generative AI models for testing and deployment. Additionally, Google Cloud's TPU series is optimized for LLM training and offers some of the fastest training times on MLPerf -0 benchmarks.

-

Amazon Bedrock — Amazon Bedrock enables on-demand deployment via APIs. AWS is developing its own homegrown LLM, Titan, and offers a flexible platform for developers to access and deploy LLMs. AWS also provides discounted foundation model training for partners to encourage the adoption of LLMs on its platform.

-

Azure — Azure has partnered with OpenAI to offer LLMs and has also invested in its own LLM, LLaMA. Azure's LLM offerings cater to a wide range of use cases and industries.

-

Anyscale — Anyscale is a platform that accelerates AI and LLM app development, optimizes compute availability, and reduces costs. It offers advanced controls for teams that require them and ensures data privacy by deploying the technology stack within a Virtual Private Cloud (VPC).

These cloud providers have made significant investments in LLMs and offer various platforms to access and utilize them. The choice of platform depends on factors such as specific use cases, budget, and security requirements.

What are common applications of LLMs?

Large Language Models (LLMs) have a wide range of applications. They are extensively used in natural language processing to understand text, answer questions, summarize, translate, and more. The larger the model, the better it performs at language tasks. LLMs are also used for text generation, where they can generate coherent, human-like text for a variety of applications like creative writing, conversational AI, and content creation. They can store world knowledge learned from data and reason about facts and common sense concepts, which is a key aspect of knowledge representation. LLMs are also being adapted for multimodal learning, where they can understand and generate images, code, music, and more when trained on diverse data. Lastly, LLMs can be fine-tuned on niche data to produce customized assistants, writers, and agents for specific domains.

- Sentiment Analysis — LLMs can be used to analyze the sentiment of text data, which is useful in fields like market research and customer feedback analysis.

- Sales Automation — LLMs can automate certain aspects of the sales process, such as generating personalized emails or identifying potential leads based on text data.

- Keyword Research — In the field of SEO, LLMs can help identify relevant keywords for content creation and optimization.

- Market Research — LLMs can analyze large amounts of text data to provide insights into market trends and consumer behavior.

- Transcription — LLMs can transcribe spoken language into written text, useful in fields like journalism and legal proceedings.

- Content Generation — LLMs can generate high-quality content, including articles, blog posts, and social media posts.

- Chatbots and Virtual Assistants — One of the most popular applications of LLMs is the development of chatbots and virtual assistants that can understand and respond to user queries in a natural, human-like way.

- Scientific Research and Discovery — LLMs can parse, analyze, and synthesize vast corpuses of scientific literature, accelerating the research process and facilitating the discovery of new treatments and advancements.

- Financial Services — LLMs have found numerous use cases in the financial services industry, transforming how financial institutions operate and interact with customers. They can analyze market trends, assess credit risks, and enhance security measures.

- Biomedicine — LLMs are often used for literature review and research analysis in biomedicine. They can process and analyze vast amounts of scientific literature, helping researchers extract relevant information, identify patterns, and generate valuable insights.

- Computational Biology — In biology, LLMs help understand proteins, genes, and DNA. They can even help design new drugs and spot diseases.

- Code Generation — LLMs can generate and complete computer programs in various programming languages, making writing software easier.

These are just a few examples, and the potential applications of LLMs are vast and continually expanding as the technology evolves.

How are LLMs impacting natural language AI?

Large Language Models (LLMs) are having a significant impact on natural language AI. Thanks to scaling laws, LLMs are rapidly advancing to match more human language capabilities with enough data and compute. Their versatility is enabling natural language AI across many industries and use cases. However, as LLMs become more capable, it is important to balance innovation with ethics. Issues around bias, misuse, and transparency need addressing. LLMs represent a shift to more generalized language learning versus task-specific engineering. This scales better but requires care and constraints.

- Rapid progress — thanks to scaling laws, LLMs are rapidly advancing to match more human-like language capabilities with enough data and compute.

- Broad applications — the versatility of LLMs is enabling natural language AI across many industries and use cases.

- Responsible deployment — balancing innovation with ethics is important as LLMs become more capable. Issues around bias, misuse, and transparency need addressing.

- New paradigms — LLMs represent a shift to more generalized language learning vs task-specific engineering. This scales better but requires care and constraints.

FAQs

What is a foundation model?

Foundation models are a type of machine learning model that is designed to be general-purpose and serve as a foundation for developing solutions for a variety of downstream tasks. Some key characteristics of foundation models:

-

They are usually pre-trained on large and diverse datasets to learn very general patterns in data like language, vision, code, etc. For example, foundation models are trained on vast text corpuses from the web.

-

They demonstrate an ability to transfer knowledge from their initial training to new tasks and domains with minimal modification. This transfer learning capability makes them adaptable.

-

They have a wide range of downstream use cases. Rather than serving one specialized purpose, foundation models have shown success on dozens to hundreds of tasks like translation, summarization, question answering, etc.

-

They tend to have very large model architectures (billions and trillions of parameters) which allows them to develop very comprehensive understanding of things like text, visuals, code, and more in their foundational training.

Some examples of popular foundation models are BERT, GPT-3, CLIP, and Codex for language, vision, multimodal learning, and code respectively. These models display strong adaptability and multi-functionality which allows using them in pre-trained form for developing production solutions faster and more effectively. Foundation models are evolving to be core tools in AI development stacks.

How do these models understand natural language and generate text?

Large language models like GPT-4 understand natural language and generate human-like text through two key capabilities they develop during foundational training:

-

Understanding Linguistic Context: These models are trained on vast datasets of text from books, websites, and other sources. By exposing them to such large volumes of natural language examples, they learn to deeply understand nuances like grammar, semantics, terminology, topical connections, discourse, and more in different contexts. Generating human-like text requires larger models and cleaned data. For example, when trained on scientific papers and news articles, a model understands that language and topics discussed in those domains are different from casual conversational text.

-

Text Generation: In the training process, these models are asked to predict the next word or sequence of words based on previous text. By practicing this word and sequence prediction across millions of examples, the models develop strong generative capabilities. When provided a text prompt as input, the model can generate a continuation that is remarkably coherent by predicting the most probable words to follow based on patterns it recognizes in its extensive training. With large enough models and data, this process results in human readable synthetic text. Additionally, techniques like fine-tuning the models on specialized datasets equip them to adapt their generative skills to new domains. The knowledge transfer from foundational pre-training combined with adaptable generation makes language models adept at producing diverse, high-quality textual content.

How do large language models work?

Large language models large language models large language models are a type of foundation models foundation models foundation models that utilize machine learning machine learning machine learning techniques like deep learning models and neural networks neural networks neural networks to generate text. They are built on transformer models transformer model transformer model transformer model like bidirectional encoder representations from transformers (BERT). These large language models large language models large language models large language models are a form of artificial intelligence artificial intelligence artificial intelligence that aim to understand natural language generate text by processing input text input text input text input text from their extensive training data.

Popular large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models such as GPT-3 are able to generate human readable text, translate between languages, write software code in programming languages, and more based on the linguistic patterns they have learned. Their performance continues to improve as researchers find ways to maintain large language models and train them on more data. The goal is to someday have large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models large language models that can understand natural language generate text like humans in any domain.

Artificial intelligence continues to transform the landscape of various industries by automating complex tasks and providing insights from large datasets.

Neural networks are at the core of these large language models, enabling them to process and generate language with remarkable efficiency.

By leveraging a deep learning model, these large language models are not only adept at writing software code but also excel in translating languages across various programming languages. The input text input text input text input text they receive during training is meticulously processed, allowing them to learn from a vast array of training data and perform tasks with high efficiency.

How does a transformer model work?

A What is a transformer model transformer model is a type of generative ai generative ai and large language model llm large language model llm. Transformer models utilize deep learning algorithms and artificial neural networks for language modeling and language translation.

What is a model?

Some people ask why are large language models important - these very large models large language models leverage the statistical relationships and self attention mechanism learned during unsupervised learning on massive data to demonstrate capabilities like in context learning, few shot learning and zero shot learning for language generation.

What is a transformer model?

How do transformer models work human brain training models artificial neural network ai model computer program broad range conversational ai conversational ai few shot learning distributed software generative pre trained transformer large language model large language model large language model large language model large language model large language model language model language model language model language model language model language model large language models work multiple fully connected layers

What is a transformer model?

The transformer architecture contains no convolutional or recurrent layers unlike previous machine learning models. Instead it relies solely on self attention mechanism self attention to draw global dependencies between input and output. The absence of recurrence allows for better parallelization and training of very large models large language models like GPT-3.

What is a transformer model?

Transformers learn representations of language by predicting missing words in sentences during supervised learning and language modeling. After training, transformers can generate text, translate between multiple languages, write code and more using their understanding of linguistic patterns.

Large language models large language models large language models large language models like generative pre trained transformers have proven surprisingly adept at creative applications like generating writing, answering questions, summarizing text and even producing computer programs without needing specialized technical expertise. Their broad applicability across domains makes ai model valuable in industries from healthcare to the job market.

So while not yet at human brain levels of intelligence, large language models large language models large language models large language models demonstrate how self supervised generative ai generative ai can yield results comparable to human experts when trained on enough data. Their flexible web interface allows democratizing these large scale models for practical use.

How do you assess a model's performance?

Assessing a language model's performance involves a combination of automated metrics, human evaluations, and real-world application testing. Automated metrics can provide quick and scalable assessments, while human evaluations offer insights into the model's fluency, coherence, and ability to handle nuanced language. Real-world tests reveal how the model performs in practical scenarios. Together, these methods provide a comprehensive understanding of a language model's capabilities and limitations.

What is a popular, oldschool open source foundation model?

Bidirectional Encoder Representations from Transformers, or BERT, is a groundbreaking method in natural language processing (NLP) that involves training language models to understand the context of a word based on its surroundings in a sentence. Unlike previous models that read text input sequentially from left to right or right to left, BERT reads text in both directions at once. This bidirectionality allows the model to capture a more nuanced understanding of language context and word relationships, leading to improvements in various NLP tasks such as question answering, language inference, and named entity recognition.

What are popular large language models?

Popular large language models include GPT-4 and GPT-4 Turbo by OpenAI, BERT by Google, and RoBERTa by Facebook AI. These models have significantly advanced the field of natural language processing, offering capabilities such as text generation, translation, and semantic understanding at scale.

What is a transformer model?

A transformer model is a type of neural network architecture that has become the foundation for many state-of-the-art natural language processing (NLP) models. It was introduced in the paper "Attention Is All You Need" by Vaswani et al. in 2017. The key innovation of the transformer is the use of self-attention mechanisms, which allow the model to weigh the importance of different parts of the input data differently. This is particularly useful for understanding the context and relationships between words in a sentence.

Unlike previous architectures that processed data sequentially (like RNNs and LSTMs), transformers process all words or tokens in parallel, which significantly speeds up training and allows for more complex and nuanced understanding of language. This parallel processing is facilitated by the attention mechanism, which dynamically adjusts how each token should attend to all other tokens in the sequence.

Transformers consist of an encoder to process the input text and a decoder to generate the output text. Each of these parts is composed of multiple layers of self-attention and feed-forward neural networks. Since their introduction, transformers have been the basis for many NLP breakthroughs and have led to the development of models like BERT, GPT-3, and RoBERTa, which are capable of a wide range of language understanding and generation tasks.

Footnotes

-

Reference: A Survey of Large Language Models ↩