What is Zephyr 7B?

by Stephen M. Walker II, Co-Founder / CEO

Zephyr 7B Model Card

Zephyr 7B, specifically the Zephyr-7B-β model, is the second in the Zephyr series of language models developed by Hugging Face. It is a fine-tuned version of the Mistral-7B-v0.1 model, trained on a mix of publicly available and synthetic datasets using Direct Preference Optimization (DPO).

MMLU (5-shot) & MT-bench Leaderboard

| Model | Arena Elo rating | MT-bench (score) | MMLU | License |

|---|---|---|---|---|

| Zephyr 7b | 1049 | 7.34 | 73.5 | Apache 2.0 |

The model is primarily designed to act as a helpful assistant, capable of generating fluent, interesting, and helpful conversations.

You can chat with an online version here.

How can I download and use Zephyr 7B?

If you want to quickly run the Zephyr model, we recommend starting with Ollama.

If you want to use the model offline, you can use Ollama.ai. After installing the tool, you can download the necessary files and use the command ollama run zephyr in your terminal to run the model.

To download and use the Zephyr 7B model, you can follow these steps:

- Install the Hugging Face Hub Python library — This library is used to download the model. You can install it using pip, a package installer for Python. Open your terminal and run the following command:

pip3 install huggingface-hub- Download the Zephyr 7B model — You can download the model using the

huggingface-clicommand. For example, to download thezephyr-7b-beta.Q4_K_M.ggufmodel, you can use the following command:

huggingface-cli download TheBloke/zephyr-7B-beta-GGUF zephyr-7b-beta.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks FalseThis command will download the model to the current directory.

- Use the model — After downloading the model, you can use it for various tasks such as text generation or chat. You can use the

pipeline()function from the Transformers library to run the model. If you're using a version ofTransformers <= v4.34, you'll need to install it from source.

Please note that the Zephyr 7B model is a large language model and requires substantial computational resources to run. We recommend using a Macbook Pro M2 or later. Make sure your machine has enough resources to handle the model.

If you want to use the model in a web interface, you can use the text-generation-webui tool. You can download the model using the tool's interface by entering the model repo (TheBloke/zephyr-7B-beta-GGUF) and the filename (zephyr-7b-beta.Q4_K_M.gguf) under the "Download Model" section. After the model is downloaded, you can load it and use it for text generation.

Model Description

Zephyr 7B is a 7 billion parameter GPT-like model, primarily trained in English. It is licensed under the MIT license and is fine-tuned from the Mistral-7B-v0.1 model. The model was trained using a technique called Direct Preference Optimization (DPO), which has proven to be effective in enhancing the performance of language models.

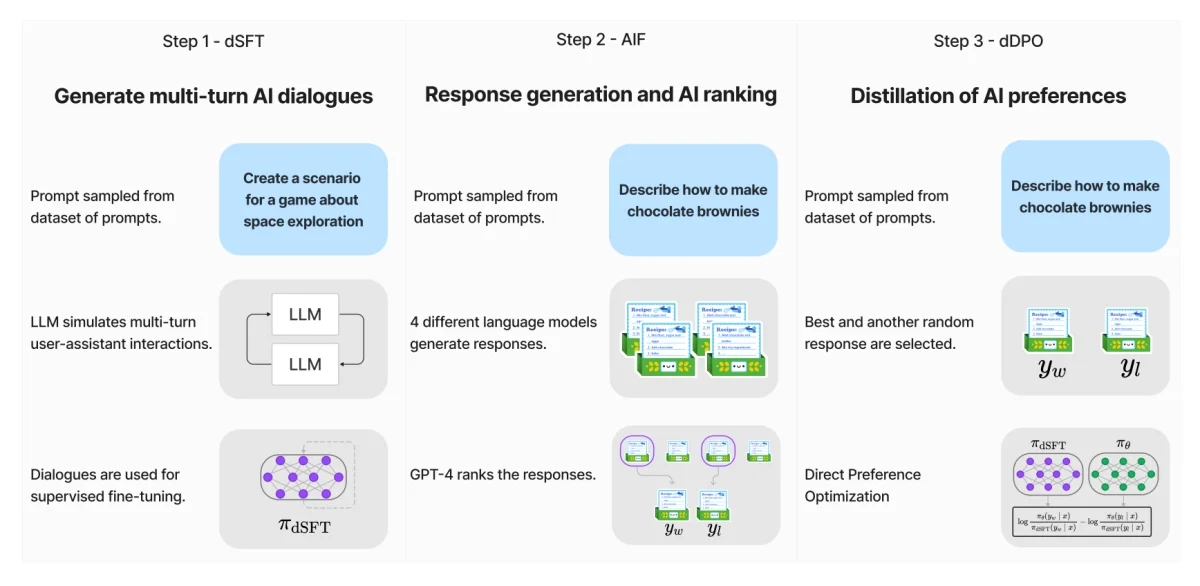

The fine-tuning process of Zephyr 7B involved three key steps:

- Large-scale dataset construction, self-instruct-style, using the UltraChat dataset, followed by distilled supervised fine-tuning (dSFT).

- Gathering AI feedback (AIF) through an ensemble of chat model completions and subsequent scoring by GPT-4 (UltraFeedback), which is then transformed into preference data. Utilizing RLAIF instead of RLHF is a significant breakthrough compared to other models.

- Distilled direct preference optimization (dDPO) applied to the dSFT model using the collected feedback data.

Performance

At the time of its release, Zephyr-7B-β was the highest-ranked 7B chat model on the MT-Bench and AlpacaEval benchmarks. It achieved a score of 7.34 on MT-Bench and a win rate of 90.60% on AlpacaEval, outperforming many larger models.

In comparison to larger open models like Llama2-Chat-70B, Zephyr-7B-β has shown strong performance on several categories of MT-Bench. However, it is noted that its performance can lag behind proprietary models on more complex tasks like coding and mathematics.

Usage

Zephyr 7B can be used in a variety of applications, including:

- Conversational AI: It is capable of engaging in human conversations, providing assistance, and answering queries.

- Text generation: It can generate text for a variety of tasks, including answering questions, telling stories, and writing poems.

- Research and education: It can be used as a tool for research and educational purposes.

However, it's important to note that Zephyr 7B can produce problematic outputs, especially when prompted to do so. Therefore, it is recommended for use only for educational and research purposes.

Limitations

While Zephyr 7B has shown impressive performance, it has some limitations. It has not been aligned to human preferences with techniques like RLHF or deployed with in-the-loop filtering of responses like ChatGPT. This means that the model can produce problematic outputs, especially when prompted to do so.

The size and composition of the corpus used to train the base model (Mistral-7B-v0.1) are unknown, but it is likely to have included a mix of Web data and technical sources like books and code.

What is Zephyr 7B?

Zephyr 7B is a sophisticated large language model (LLM) developed by Hugging Face. It's a fine-tuned version of the Mistral-7B-v0.1 model, trained on a mix of publicly available and synthetic datasets. The model is designed to excel in various language-based tasks such as generating coherent text, translating across different languages, summarizing important information, analyzing sentiment, and answering questions based on context.

Zephyr 7B is part of the Zephyr series of language models, which includes Zephyr-7B-α and Zephyr-7B-β. These models are designed to act as helpful AI assistants, with Zephyr-7B-β being the highest-ranked 7B chat model on the MT-Bench and AlpacaEval benchmarks at the time of its release.

The model is trained using techniques such as Distilled Supervised Fine-Tuning (dSFT), Direct Preference Optimization (DPO), and Distilled Direct Preference Optimization (dDPO). These techniques help to improve the model's performance on various tasks, making it competitive with larger models.

Zephyr 7B can be accessed and used via the Hugging Face Transformers library, and it's also possible to run the model on your own device using the Rust + Wasm stack. The model is licensed under the MIT license and is primarily trained in English.

In terms of practical applications, Zephyr 7B can be used to build advanced conversational AI systems, as demonstrated in a Python script for building a question-answering system using the Zephyr-7B model.