Ollama: Easily run LLMs locally

by Stephen M. Walker II, Co-Founder / CEO

What is Ollama?

Ollama is a streamlined tool for running open-source LLMs locally, including Mistral and Llama 2. Ollama bundles model weights, configurations, and datasets into a unified package managed by a Modelfile.

Ollama supports a variety of LLMs including LLaMA-2, uncensored LLaMA, CodeLLaMA, Falcon, Mistral, Vicuna model, WizardCoder, and Wizard uncensored.

Ollama Models

Ollama supports a variety of models, including Llama 2, Code Llama, and others, and it bundles model weights, configuration, and data into a single package, defined by a Modelfile.

The top 5 most popular models on Ollama are:

| Model | Description | Pulls | Updated |

|---|---|---|---|

| llama2 | The most popular model for general use. | 220K | 2 weeks ago |

| mistral | The 7B model released by Mistral AI, updated to version 0.2. | 134K | 5 days ago |

| codellama | A large language model that can use text prompts to generate and discuss code. | 98K | 2 months ago |

| dolphin-mixtral | An uncensored, fine-tuned model based on the Mixtral MoE that excels at coding tasks. | 84K | 10 days ago |

| mistral-openorca | Mistral 7b fine-tuned using the OpenOrca dataset. | 57K | 3 months ago |

| llama2-uncensored | Uncensored Llama 2 model by George Sung and Jarrad Hope. | 44K | 2 months ago |

Ollama also supports the creation and use of custom models. You can create a model using a Modelfile, which includes passing the model file, creating various layers, writing the weights, and finally, seeing a success message.

Some of the other models available on Ollama include:

- Llama2: Meta's foundational "open source" model.

- Mistral/Mixtral: A 7 billion parameter model fine-tuned on top of the Mistral 7B model using the OpenOrca dataset.

- Llava: A multimodal model called LLaVA (Large Language and Vision Assistant) which can interpret visual inputs.

- CodeLlama: A model trained on both code and natural language in English.

- DeepSeek Coder: Trained from scratch on both 87% code and 13% natural language in English.

- Meditron: An open-source medical large language model adapted from Llama 2 to the medical domain.

Installation and Setup of Ollama

- Download Ollama from the official website.

- After downloading, the installation process is straightforward and similar to other software installations. For MacOS and Linux users, you can install Ollama with one command:

curl https://ollama.ai/install.sh | sh. - Once installed, Ollama creates an API where it serves the model, allowing users to interact with the model directly from their local machine.

Ollama is compatible with macOS and Linux, with Windows support coming soon. It can be easily installed and used to run various open-source models locally. You can select the model you want to run locally from the Ollama library.

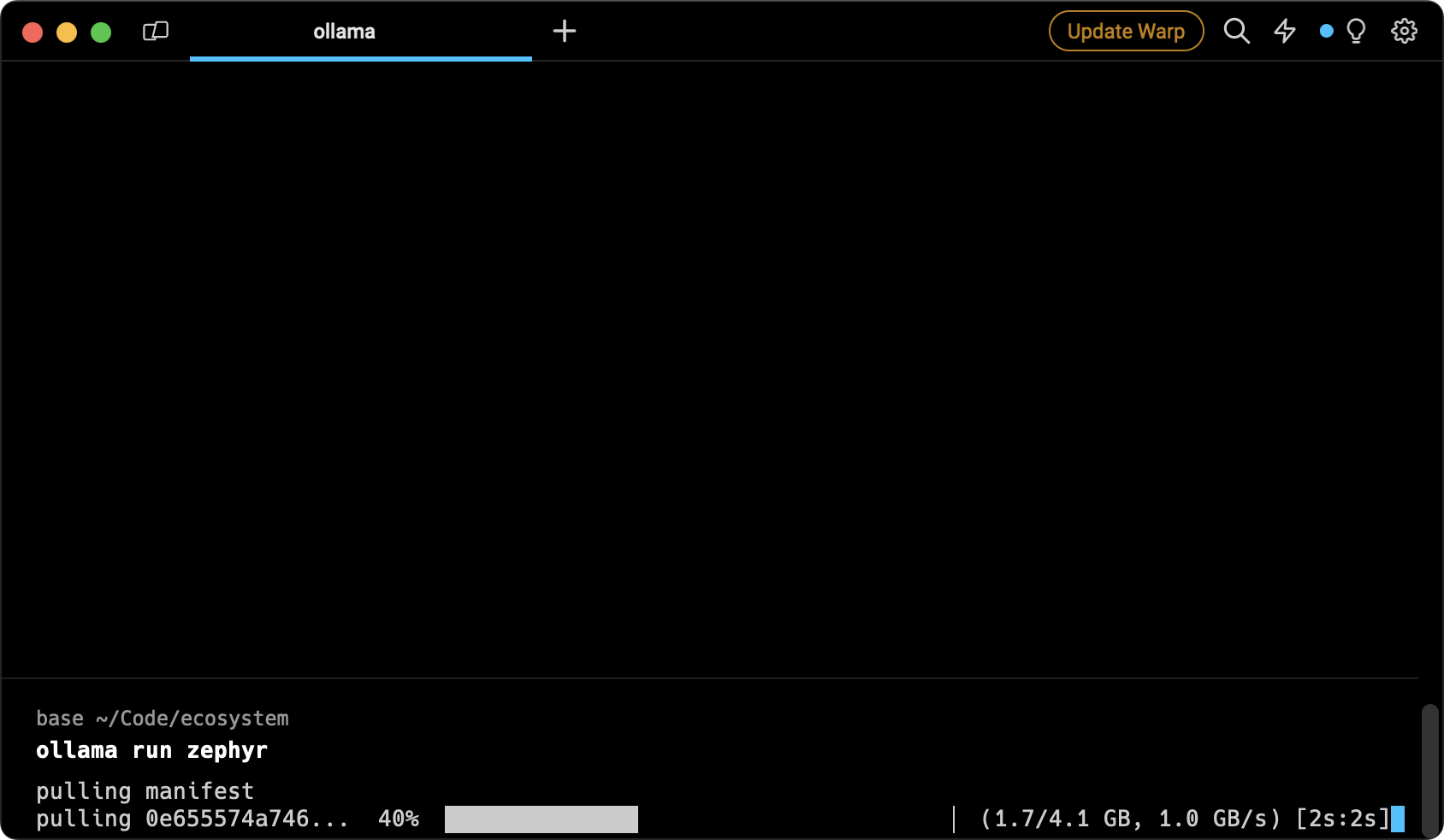

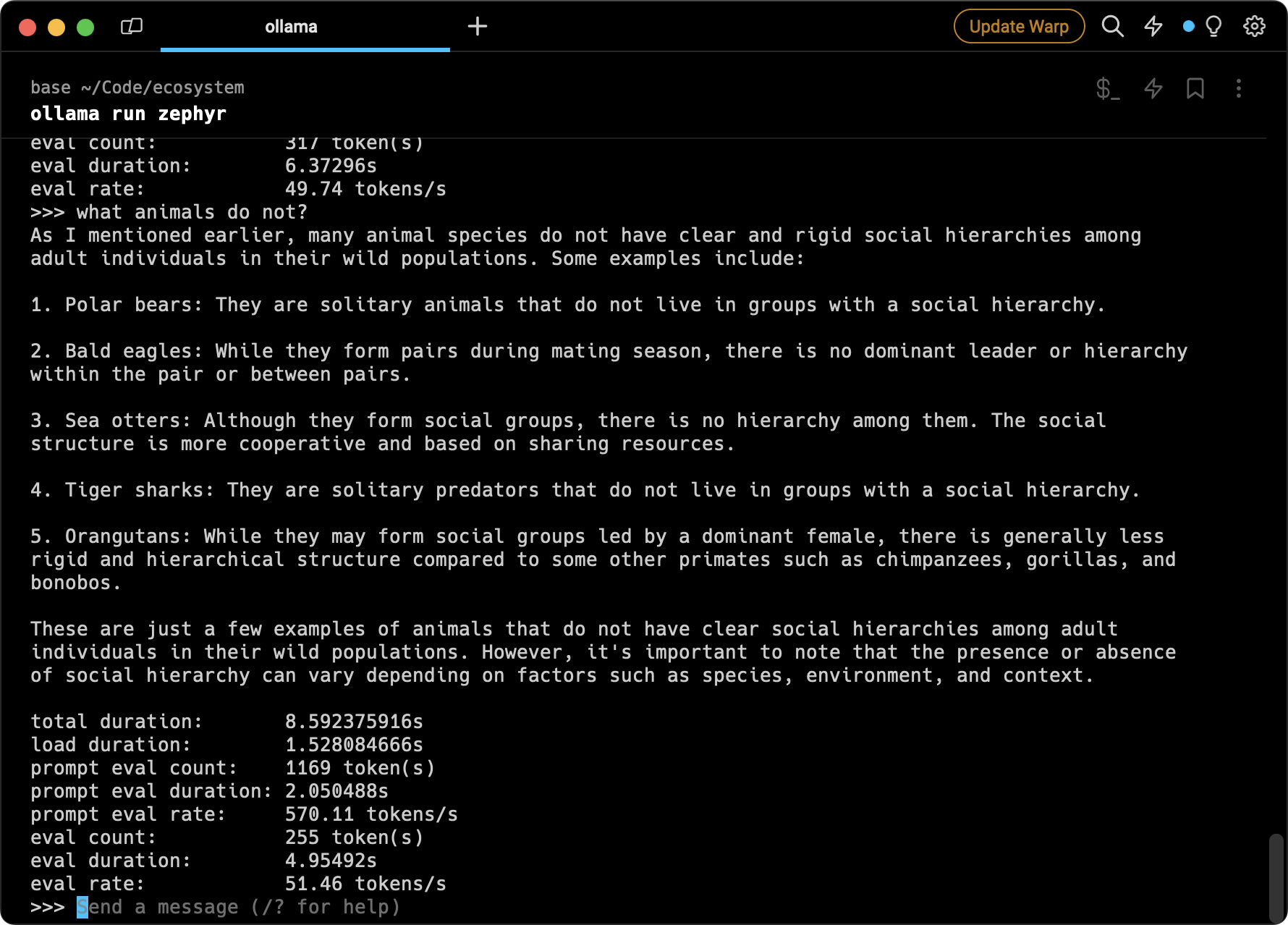

Running Models Using Ollama

Running models using Ollama is a simple process. Users can download and run models using the run command in the terminal. If the model is not installed, Ollama will automatically download it first. For example, to run the Code Llama model, you would use the command ollama run codellama.

| Model | RAM | Download Command |

|---|---|---|

| Llama 2 | 16GB | ollama pull llama2 |

| Llama 2 Uncensored | 16GB | ollama pull llama2-uncensored |

| Llama 2 13B | 32GB | ollama pull llama2:13b |

| Orca Mini | 8GB | ollama pull orca |

| Vicuna | 16GB | ollama pull vicuna |

| Nous-Hermes | 32GB | ollama pull nous-hermes |

| Wizard Vicuna Uncensored | 32GB | ollama pull wizard-vicuna |

Running Ollama Chat UI

Now that you have Ollama running, you can also use Ollama as a local ChatGPT alternative. Download the Ollama WebUI and follow these steps to be running in minutes.

- Clone the Ollama Web UI repository from GitHub

- Follow the README instructions to set up the Web UI.

git clone https://github.com/ollama-webui/ollama-webui.gitThen run the docker run command.

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v ollama-webui:/app/backend/data --name ollama-webui --restart always ghcr.io/ollama-webui/ollama-webui:mainOr, if you're allergic to Docker, you can use the lite version instead.

git clone https://github.com/ollama-webui/ollama-webui-lite.gitcd ollama-webui-lite

pnpm i && pnpm run devAnd in either case, visit http://localhost:3000 and you're ready for local LLM magic.

Where are Ollama models stored?

Ollama models are stored in the ~/.ollama/models directory on your local machine. This directory contains all the models that you have downloaded or created. The models are stored in a subdirectory named blobs.

When you download a model using the ollama pull command, it is stored in the ~/.ollama/models/manifests/registry.ollama.ai/library/<model family>/latest directory. If you specify a particular version during the pull operation, the model is stored in the ~/.ollama/models/manifests/registry.ollama.ai/library/<model family>/<version> directory.

If you want to import a custom model, you can create a Modelfile with a FROM instruction that specifies the local filepath to the model you want to import. After creating the model in Ollama using the ollama create command, you can run the model using the ollama run command.

Please note that these models can take up a significant amount of disk space. For instance, the 13b llama2 model requires 32GB of storage. Therefore, it's important to manage your storage space effectively, especially if you're working with multiple large models.

Using Ollama with Python

You can also use Ollama with Python. LiteLLM is a Python library that provides a unified interface to interact with various LLMs, including those run by Ollama.

To use Ollama with LiteLLM, you first need to ensure that your Ollama server is running. Then, you can use the litellm.completion function to make requests to the server. Here's an example of how to do this:

from litellm import completion

response = completion(

model="ollama/llama2",

messages=[{ "content": "respond in 20 words. who are you?", "role": "user"}],

api_base="http://localhost:11434"

)

print(response)In this example, ollama/llama2 is the model being used, and the messages parameter contains the input for the model. The api_base parameter is the address of the Ollama server.

The use case this unlocks is the ability to run LLMs locally, which can be beneficial for several reasons:

- Development — Quickly iterate locally without needing to deploy model changes.

- Privacy and Security — Running models locally means your data doesn't leave your machine, which can be crucial if you're working with sensitive information.

- Cost — Depending on the volume of your usage, running models locally could be more cost-effective than making API calls to a cloud service.

- Control — You have more control over the model and can tweak it as needed.

Moreover, LiteLLM's unified interface allows you to switch between different LLM providers easily, which can be useful if you want to compare the performance of different models or if you have specific models that you prefer for certain tasks.

In this example, base_url is the URL where Ollama is serving the model (by default, this is http://localhost:11434), and model is the name of the model you want to use (in this case, llama2).

Additional Features

One of the unique features of Ollama is its support for importing GGUF and GGML file formats in the Modelfile. This means if you have a model that is not in the Ollama library, you can create it, iterate on it, and upload it to the Ollama library to share with others when you are ready.

Available Models

Ollama supports a variety of models, and you can find a list of available models on the Ollama Model Library page.

Ollama supports a variety of large language models. Here are some of the models available on Ollama:

- Mistral — The Mistral 7B model released by Mistral AI.

- Llama2 — The most popular model for general use.

- CodeLlama — A large language model that can use text prompts to generate and discuss code.

- Llama2-Uncensored — Uncensored Llama 2 model by George Sung and Jarrad Hope.

- Orca-Mini — A general-purpose model ranging from 3 billion parameters to 70 billion, suitable for entry-level hardware.

- Vicuna — General use chat model based on Llama and Llama 2 with 2K to 16K context sizes.

- Wizard-Vicuna-Uncensored — Wizard Vicuna Uncensored is a 7B, 13B, and 30B parameter model based on Llama 2 uncensored by Eric Hartford.

- Phind-CodeLlama — Code generation model based on CodeLlama.

- Nous-Hermes — General use models based on Llama and Llama 2 from Nous Research.

- Mistral-OpenOrca — Mistral OpenOrca is a 7 billion parameter model, fine-tuned on top of the Mistral 7B model using the OpenOrca dataset.

- WizardCoder — Llama based code generation model focused on Python.

- Wizard-Math — Model focused on math and logic problems.

- Fine-tuned Llama 2 model — To answer medical questions based on an open source medical dataset.

- Wizard-Vicuna — Wizard Vicuna is a 13B parameter model based on Llama 2 trained by MelodysDreamj.

- Open-Orca-Platypus2 — Merge of the Open Orca OpenChat model and the Garage-bAInd Platypus 2 model. Designed for chat and code generation.

You can find a complete list of available models on the Ollama Model Library page.

Remember to ensure you have adequate RAM for the model you are running. For example, the Code Llama model recommends 8GB of memory for a 7 billion parameter model, 16GB for a 13 billion parameter model, and 32GB for a 34 billion parameter model.

Conclusion

Ollama is a powerful tool for running large language models locally, making it easier for users to leverage the power of LLMs. Whether you're a developer looking to integrate AI into your applications or a researcher exploring the capabilities of LLMs, Ollama provides a user-friendly and flexible platform for running these models on your local machine.

FAQs

Is Ollama open source?

Yes, Ollama is open source. It is a platform that allows you to run large language models, such as Llama 2, locally. Ollama bundles model weights, configuration, and data into a single package, defined by a Modelfile. It optimizes setup and configuration details, including GPU usage.

The source code for Ollama is publicly available on GitHub. In addition to the core platform, there are also open-source projects related to Ollama, such as an open-source chat UI for Ollama.

Ollama supports a list of open-source models available on its library. These models are trained on a wide variety of data and can be downloaded and used with the Ollama platform.

To use Ollama, you can download it from the official website, and it is available for macOS and Linux, with Windows support coming soon. There are also tutorials available online that guide you on how to use Ollama to build open-source versions of various applications.

What does Ollama do?

Ollama is a tool that allows you to run open-source large language models (LLMs) locally on your machine. It supports a variety of models, including Llama 2, Code Llama, and others. It bundles model weights, configuration, and data into a single package, defined by a Modelfile.

Ollama is an extensible platform that enables the creation, import, and use of custom or pre-existing language models for a variety of applications, including chatbots, summarization tools, and creative writing aids. It prioritizes privacy and is free to use, with seamless integration capabilities for macOS and Linux users, and upcoming support for Windows.

The platform streamlines the deployment of language models on local machines, offering users control and ease of use. Ollama's library (ollama.ai/library) provides access to open-source models such as Mistral, Llama 2, and Code Llama, among others.

System requirements for running models vary; a minimum of 8 GB of RAM is needed for 3B parameter models, 16 GB for 7B, and 32 GB for 13B models. Additionally, Ollama can serve models via a REST API for real-time interactions.

Use cases for Ollama are diverse, ranging from LLM-powered web applications to integration with local note-taking tools like Obsidian. In summary, Ollama offers a versatile and user-friendly environment for leveraging the capabilities of large language models locally for researchers, developers, and AI enthusiasts.