Prompt Engineering Guide: A Comprehensive Examination of Prompt Techniques

by Stephen M. Walker II, Co-Founder / CEO

Top tip

Updated on January 7, 2025 with additional strategies, techniques, and best practices.

Overview

At Klu, we've had the unique opportunity to learn from thousands of AI teams and engineers building generative prompts. This guide distills learnings from conversations with leading teams — from Google DeepMind to OpenAI to Maze to Superhuman — at the forefront of building with AI.

Prompt engineering is the key to unlocking the potential of LLM systems. However, opinions on it vary widely — from those who consider it overrated to those who believe it's only for individuals with advanced degrees.

After surveying the breakout and highly successful generative AI products or features — OpenAI ChatGPT, Github Copilot, Notion AI, Midjourney — one thing is clear: you can still count the number of exceptional generative products on one hand, and all effectively utilize exceptional prompts. Effective prompt engineering is not difficult, but far from mastered by all.

After reviewing numerous prompt engineering guides it became clear that they were not authored by individuals actively developing applications powered by LLMs. While some are good, they often lack real-world learnings across different models.

This guide is designed specifically for those working on Generative AI products — and written by someone with years of prompt engineering experience.

Key aspects of prompt engineering include:

-

Crafting Prompts — Selecting the appropriate words, phrases, sentence structures, and examples to effectively prompt the model.

-

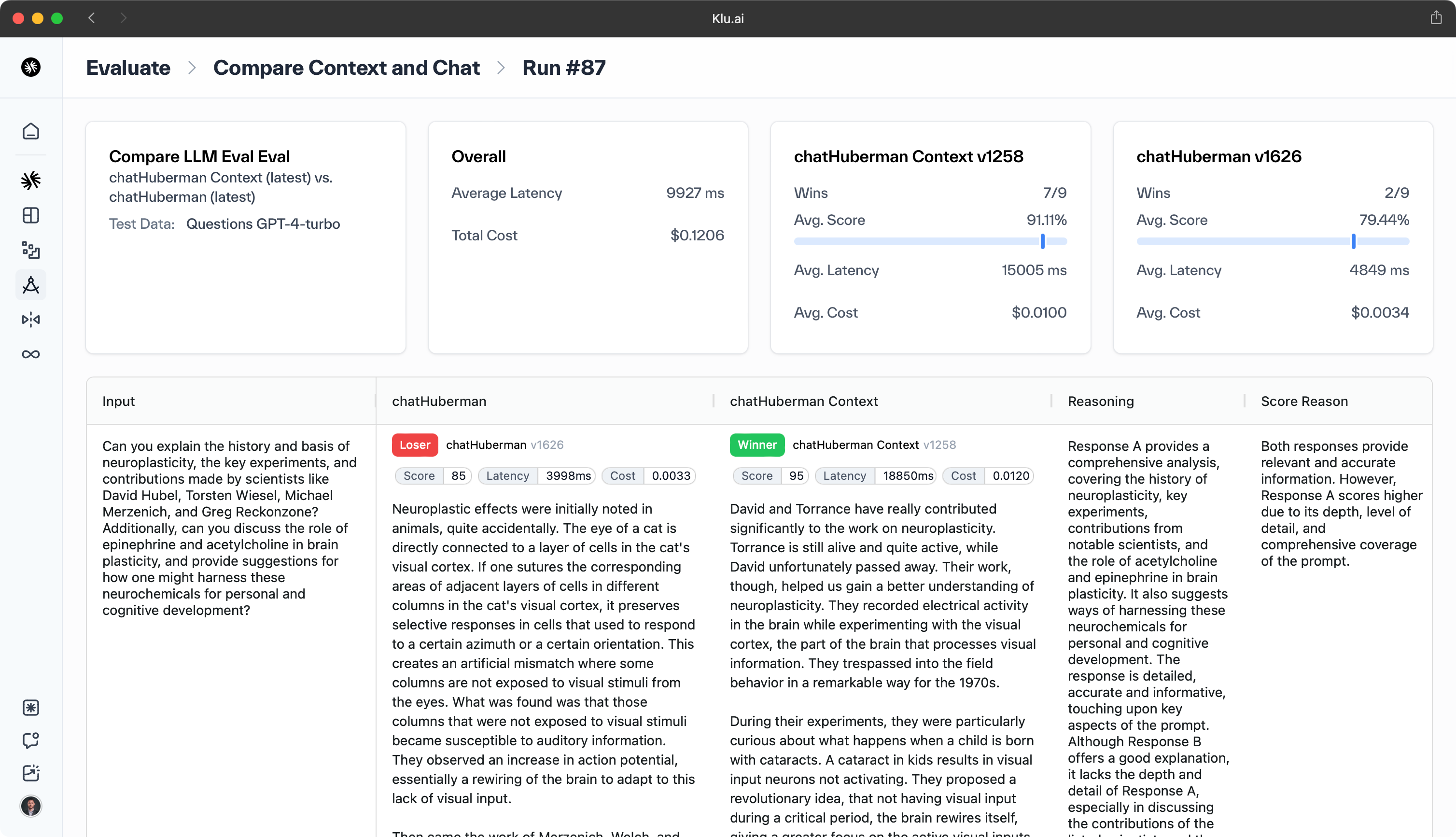

Prompt Evaluation — Evaluating performance of prompts on differerent models or example test datasets with a diverse array of inputs.

-

Iterating and Experimentation — Continuously improving the accuracy and relevance of generated outputs by adjusting the prompts.

-

Interdisciplinary Collaboration — Prompt engineering requires the integration of expertise from linguistics and computer science, emphasizing the need for cross-disciplinary collaboration, particularly with domain task experts.

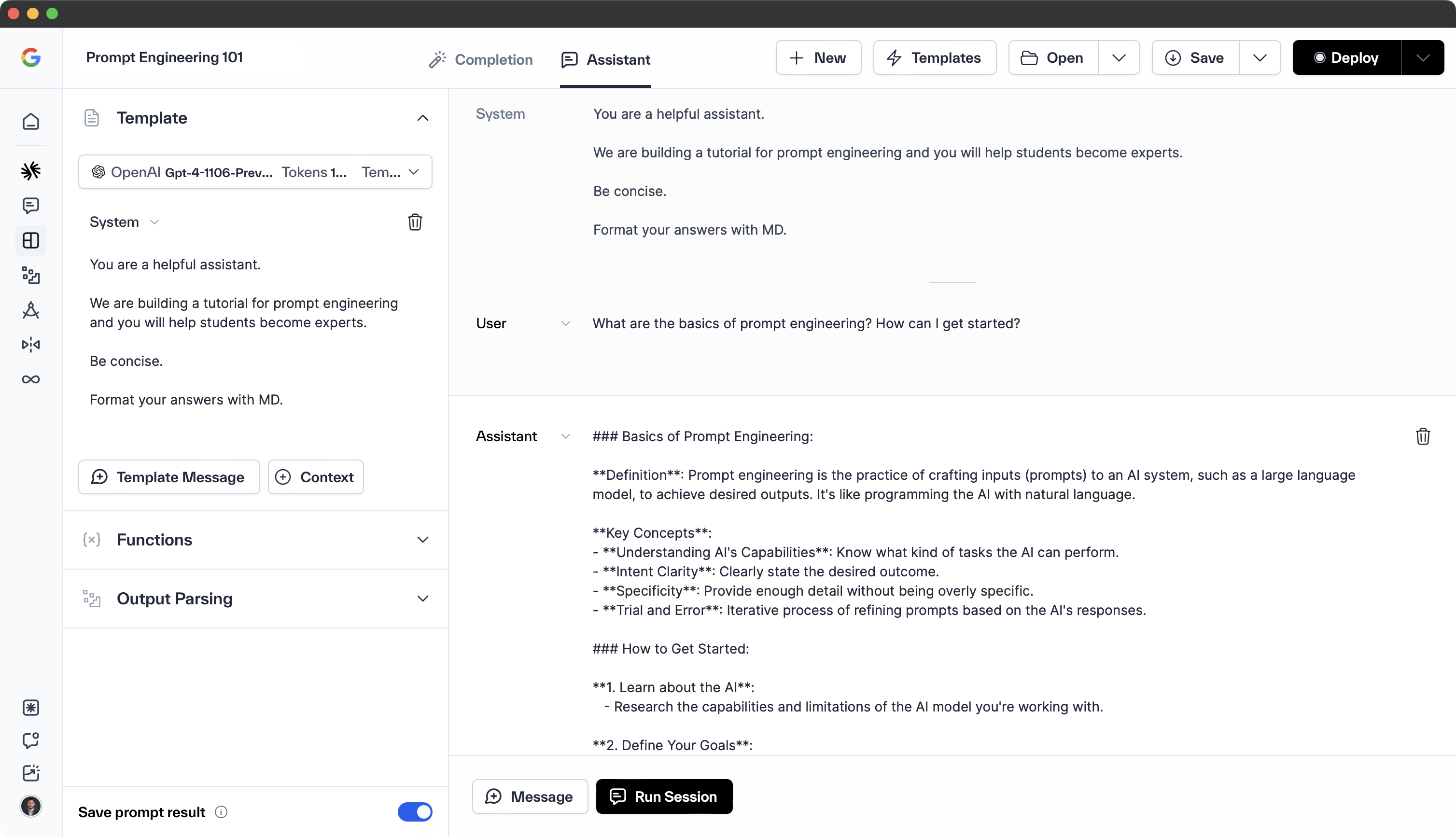

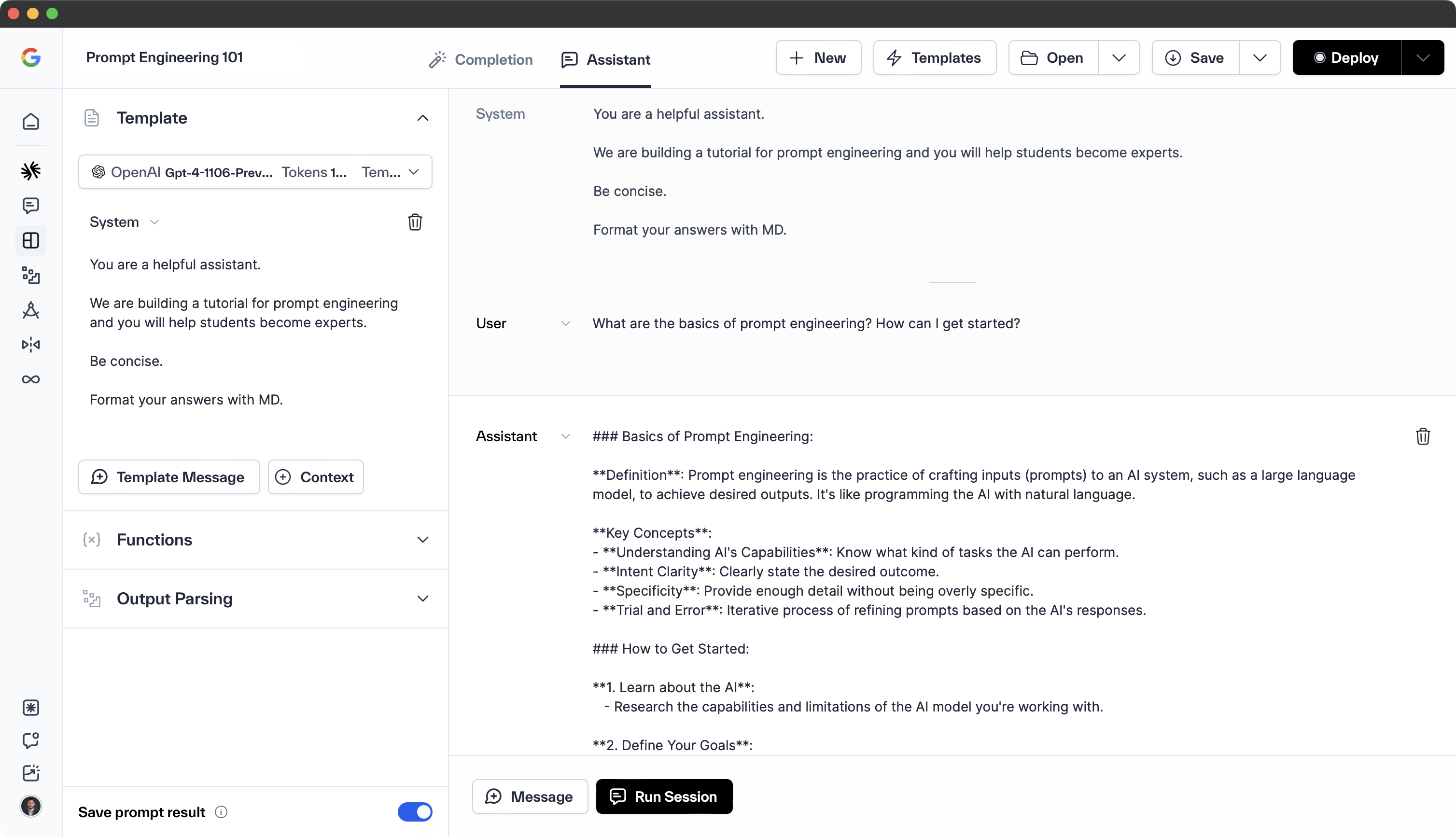

Prompt engineering in Klu Studio

Prompt engineers, while diverse in their backgrounds and skills, share common traits and expertise in their field.

They have strong written and verbal communication skills, an inuition for how foundation models will react to their prompts, knowledge of natural language processing (NLP) principles, and programming skills, such as Python.

Although not a requirement, possessing a degree in computer science or creative writing can significantly enhance your prompt engineering skills, given its blend of both art and science elements.

Mastering prompt design requires understanding key technical elements around instructions, output indicators, and creativity controls. It also necessitates an iterative approach to systematically test prompts based on objective metrics.

Start simple before layering complexity.

This guide primarily focuses on language models and simplifies prompt engineering with practical examples and techniques.

It covers fundamentals such as balancing determinism and creativity in model outputs, understanding the nuances between different frontier models, and exploring advanced prompting methods for complex tasks.

All examples use Klu Studio for prompt management. Additionally, we have prompt engineering tips in our developer documentation.

Let's get started.

Key Takeaways

- Brevity — Start simple with precise wording focused on what to do. Gradually increase complexity incorporating common sense reasoning to handle more advanced tasks. Evaluate feasibility.

- Iterate — Iteratively refine prompts through evaluation, error analysis and testing edge cases. Set clear metrics and systematically improve prompts.

- Experiment — Learn the fundamentals of prompt engineering including key components like instructions, context, input/output indicators that guide AI models. Experiment with these elements to optimize outputs.

- Provide Examples — Show the model exactly what you expect with single-shot, few-shot etc. and select appropriate prompt based performance.

- Compound — Utilize chain-of-thought and chain-of-prompts techniques to build context and enhance outputs over multiple interactions with LLMs.

Prompt Engineering Best Practices

After talking to over a thousand teams building apps powered by LLMs, I began to notice several patterns in their best practices. Regardless of the LLM or use case, here are some of the best practices used by leading teams:

-

Understand the Model's Capabilities — Know what the model does well and its limitations. This knowledge will help you tailor prompts to the model's strengths and weaknesses.

-

Be Specific — Vague prompts will lead to ambiguous or frustrating results. Specific prompts help the model understand the task and generate more accurate and desirable responses.

-

Include Context — Context helps the model understand the scenario better and often provides unique data. Providing relevant background information will improve the quality of the output.

-

Iterate and Refine — Prompt engineering often requires iteration. Most teams get to an acceptable first version after 20-30 minutes of rapid iteration. Use the model's response to guide prompt changes.

-

Avoid Leading Questions — Leading questions can and will bias the model's responses. Ensure prompts are neutral to get unbiased outputs.

-

Employ Role-Play Prompting — Assigning a role to the model will steer its responses in a specific direction and domain, making outputs more relevant.

-

Use Cognitive Verifiers — Incorporate steps that require the model to verify its reasoning or the information it provides, which will enhance the accuracy of the output. Second prompts are often utilized in this scenario.

-

Be Mindful of Length — Overly long prompts can be cumbersome and may not improve the response. In fact, it might make the response worse. Keep prompts concise but informative. Try removing sections from overly long prompts and test the results.

-

Choose Words Carefully — The language used in the prompt can influence the model's tone and style of response. Use language that aligns with the domain.

-

Test and Revise — Don't be afraid to experiment with different prompts and revise based on the outcomes. Prompt engineering is an iterative process.

-

Test with Golden Data Set — Utilize a golden data set to benchmark prompt revisions and measure performance against known outcomes. This approach ensures systematic improvement and consistency in prompt engineering.

-

Use Markers — When providing context or data, clearly indicate where it begins and ends, so the model knows what information to consider.

-

Leverage Existing Templates — Look for prompt templates that have been effective for others and adapt them to your needs. Sometimes you only need to tweak 20% of the prompt to adapt to your use case.

-

Stay Updated — Language models evolve, so keep abreast of the latest developments and adjust your prompt engineering strategies accordingly. Teams using Davinci-003 prompts with GPT-4 are potentially not getting the best from the model.

By following these best practices, you can craft prompts that are more likely to elicit the desired responses from LLMs, making your interactions with these models more effective and efficient.

Check out the Klu prompt engineering documentation for more best practices.

Unlocking LLMs: Why Prompt Engineering Matters

Prompt engineering is essential for leveraging the full capabilities of Large Language Models (LLMs).

By honing the art of prompt engineering, we can guide AI tools to produce more accurate, relevant, and contextually appropriate responses. This skill is pivotal in transforming basic interactions into sophisticated and nuanced exchanges, unlocking new possibilities for a wide array of applications ranging from simple daily tasks to complex problem-solving scenarios.

Here's why prompt engineering matters:

- Guiding Model Behavior — Prompts direct LLMs to generate purposeful and contextually relevant content. Without them, models may produce aimless and unwanted text.

- Enhancing Text Quality and Relevance — Prompts ensure the model outputs meet the required quality and relevance standards, thereby enhancing the caliber and intent-match of the generated content.

- Eliciting Specific Outputs — Prompts can be tailored to extract specific outputs from the AI model, such as text summarization, alternate phrasing suggestions, storyline creation, sentiment analysis, or data extraction.

- Aligning AI and Human Intent — Prompts bridge the gap between AI-generated content and the user's intent, catering to individual preferences and needs.

- Minimizing Inaccuracies and Ambiguity — Clear, concise, and complete prompts prevent the model from making assumptions or providing unclear information, thereby reducing inaccuracies and ambiguities in responses.

Basic Prompting

Large Language Models (LLMs) like OpenAI's GPT-4 can generate human-like text when given a prompt. The prompt is the initial input that the model uses to generate a response.

For example, if the prompt is "Translate the following English text to Spanish:, the model understands that it needs to translate the subsequent text into Spanish.

Translate the following English text to Spanish:

Large Language Models (LLMs) like OpenAI's GPT-4 can generate human-like text when given a prompt. The prompt is the initial input that the model uses to generate a response.

Los Modelos de Lenguaje a Gran Escala (MLGs) como GPT-4 de OpenAI pueden generar texto similar al humano cuando se les da un indicador. El indicador es la entrada inicial que el modelo usa para generar una respuesta.

Similarly, if the prompt is "Write a paragraph about AI," the model will generate a paragraph on that topic. The prompt sets the context and the task for the model. In LLMs, the quality of the output often depends on the clarity and specificity of the prompt.

Write a paragraph about AI

Artificial Intelligence (AI) is a branch of computer science that focuses on creating intelligent machines capable of performing tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, perception, and language understanding. Large Language Models (LLMs), a subset of AI, are models trained on a vast amount of text data. They can generate human-like text and are used in a variety of applications, including translation, question answering, and content generation. Examples of LLMs include OpenAI's GPT-4 and Google's Gemini. These AI models are transforming many sectors, such as healthcare, education, and finance, by automating and enhancing various tasks.

Prompt engineering guides AI model behavior, enhances text quality and relevance, elicits specific outputs, aligns AI and human intent, and minimizes inaccuracies and ambiguities.

Harnessing AI Through Prompt Engineering

Prompt engineering, crucial for optimal AI performance, facilitates seamless human-AI communication with large language models like GPT-4. Let's explore AI prompts and learn to craft effective ones for various tasks.

AI Prompts Basics

AI prompts guide language models to desired outputs. Effective prompts consider:

- Instruction — "Generate a summary of this research paper on AI in transportation: {{Document}}" or "Compose an email to Elon on 'The Impact of AI in Autonomous Vehicles'".

Here's the output Mistral 8x7b model for the latter.

Subject: The Impact of AI in Autonomous Vehicles

Dear Elon,

I hope this email finds you well. I wanted to discuss the impact of artificial intelligence (AI) and Large Language Models (LLMs) in the development of autonomous vehicles, particularly in the context of Tesla's Full Self-Driving (FSD) technology.

AI and LLMs have played a significant role in advancing autonomous vehicle technology. Tesla's FSD system, for example, has been trained on billions of frames of video, allowing it to learn from human driving behavior and adapt its algorithms accordingly. This approach has led to impressive capabilities, such as Tesla's AI predicting a driver's destination without being explicitly told.

However, there have been some challenges and controversies surrounding Tesla's FSD technology. For instance, leaked emails have revealed that Tesla's "full self-driving" beta will remain largely unchanged, and the software does not allow for autonomous driving. Additionally, a 2016 video that exaggerated Tesla's self-driving capabilities was found to have been overseen by you, Elon.

Despite these setbacks, Tesla continues to push the boundaries of AI in autonomous vehicles. You have mentioned that you expect Tesla's FSD system to have its own "ChatGPT moment" soon, captivating the public's attention and potentially increasing the value of Tesla vehicles. Furthermore, Tesla is working on developing a humanoid robot prototype, the Tesla Bot, which could further expand the applications of AI in the automotive industry.

In conclusion, AI and LLMs have had a profound impact on the development of autonomous vehicles, particularly in Tesla's FSD technology. While there have been challenges and controversies, the potential benefits and advancements in AI research continue to drive innovation in the field. I look forward to seeing how Tesla's AI and LLM applications evolve and contribute to the future of autonomous vehicles.

Best regards,

[Your Name]

-

Context — "Based on our discussion about AI superalignment, write a blog post outlining the challenges and potential solutions".

-

Input Data — In a coding context, provide a partial code snippet and ask the AI to complete it: "Complete this Python code for a simple neural network: {{Insert Code}}".

-

Output Indicators — "Write a 500-word blog post about AI in cryptography" or "Create a social post for an AI conference". These prompts specify the output format and length, helping the model imitate expected outputs without needing examples.

Experimenting with these elements and tailoring prompts to needs enhances AI interaction.

Precision in Prompts

Clear, concise prompts yield superior results. Focusing on the task and using specific language relevant to the domain or task, guides the LLM to expected responses.

Successful prompt engineering requires iterative refinement. This involves testing prompts with various models and datasets, analyzing outcomes, and adjusting for improvement. Key evaluation considerations include instruction clarity, context relevance, input data adequacy, and output indicator specificity.

Through systematic evaluation and refinement, we can maximize prompt performance and yield desirable results.

Prompt Formats

Prompt formats can vary across different language models. Here's a breakdown of how the prompt formats change across OpenAI, Anthropic, Llama, and generic models:

OpenAI

OpenAI models, such as Davinci-003 and GPT-4, recommend placing instructions at the beginning of the prompt and using ### or """ to separate the instruction and context. With models like GPT-3.5-turbo and GPT-4 supporting Chat Completion messages, we recommend separating the instructions and context as a system message and user message, respectively. The prompts should be specific, descriptive, and detailed about the desired context, outcome, length, format, style, etc. For example:

######

You are an expert summarizer.

Summarize the text below as a bullet point list of the most important points.

######

Text: {text input here}

This format helps the model understand the task better and generate more accurate responses.

Anthropic

Anthropic's Claude model uses a conversational format for prompts. The model was trained using special tokens to mark who is speaking. The \n\nHuman: token is used to ask a question or give instructions, and the \n\nAssistant: token is used for the model's response. For example:

\n\nHuman: {Your question or instruction here}

\n\nAssistant:This format is crucial for Claude as it was trained as a conversational agent using these special tokens. Developers not using this format report unpredictable or worse model performance.

Mistral

Mistral 7b and Mixtral 8x7b use a similar prompt format to Llama, but with some changes. The format is as follows:

When developing a chat application with Llama, mark the start of user input with [INST] and end it with [/INST]. The model's output doesn't require any specific markers. The <s> tag denotes previous conversation pairs. The <SYS> tag specifies the model system message.

<s>[INST] Instruction [/INST] Model answer</s>[INST] Follow-up instruction

[/INST]This format is important as it should match the training procedure. If a different prompt structure is used, the model might not perform as expected, especially after several turns in conversational applications.

Llama 2

Meta's Llama 2 uses a unique format that includes system prompts and user messages. The format is as follows:

When developing a chat application with Llama, mark the start of user input with [INST] and end it with [/INST]. The model's output doesn't require any specific markers. The <s> tag denotes previous conversation pairs. The <SYS> tag specifies the model system message.

<s>[INST] <<SYS>> {{ system_prompt }} <</SYS>> {{ user_message }} [/INST]This format is important as it should match the training procedure. If a different prompt structure is used, the model might not perform as expected, especially after several turns in conversational applications.

Generic

For generic models, the best prompts incorporate relevant details to help the platform understand the specific scenario or context. For example, with a generic prompt like “Write a sales pitch,” you can add specific details like the product, brand, and target audience to get a more tailored response. The more specific, clear, and concise the prompt, the more useful the output.

Translate the following English text to Korean.

Text: {Your text here}

Prompt Engineering Techniques

Effectively building on LLMs hinges on the use of well-crafted prompts that guide the model to generate desired responses. While some use cases work flawlessly out of the box, additional techniques exist to demonstrate how the model should behave, as well as to give the model more space for reasoning or for generating specific tasks.

There are various types of prompts that can be used to communicate with LLMs, including:

- Zero-shot prompting

- One-shot prompting

- Few-shot prompting

- Chain-of-thought prompting

- Chain-of-prompt sequences

Understanding the distinct characteristics and applications of each prompt type allows for the effective selection of the most suitable prompt for a given AI interaction task.

Types of Prompts

Prompt generation techniques range from basic engineering to advanced strategies and collaborative power tips, enabling more effective AI interactions. These techniques can help you create prompts that are precise, context-aware, and optimized for the desired output.

Mastering a variety of prompt generation techniques readies you to handle complex tasks and challenges in the continuously advancing realm of AI.

Zero-shot to Few-shot prompting

Zero-shot prompting provides a language model with a prompt that does not include any examples or prior context. The model is expected to understand the instruction and generate a response based solely on its pre-existing knowledge and training. This approach is used when you want the model to produce an output without giving it any specific examples to follow.

Few-shot prompting, on the other hand, involves providing the model with a small number of examples to guide its response. These examples act as a context for the task at hand, helping the model to better understand what is expected and to generate more accurate and relevant responses. Few-shot prompting is particularly useful for more complex tasks or when dealing with domain-specific content.

The choice between these prompting techniques hinges on the task's complexity, the need for speed versus accuracy, and the availability of quality examples.

Zero-shot prompting

Zero-shot prompting allows a language model to generate responses based solely on its pre-existing knowledge, without any examples. This method is quick but may lack accuracy for complex tasks.

Single-shot prompting

Single-shot prompting provides a single example to guide the model, which can be effective for simple tasks or when a strong example is available.

Few-shot prompting

Few-shot prompting offers multiple examples, usually two to five, giving the model a richer context to produce more precise and relevant responses, making it suitable for complex or nuanced tasks.

Chain-of-thought

Chain-of-thought (CoT) prompting is a technique for enhancing the reasoning capabilities of large language models (LLMs). It involves guiding LLMs through a sequence of intermediate reasoning steps to solve problems, leading to a final answer. This method not only improves LLMs' problem-solving skills but also addresses challenges in complex reasoning tasks.

There are two primary approaches to CoT prompting. The first approach prompts the model with cues like "Let's think step by step," encouraging it to break down the problem. The second approach involves creating manual demonstrations with a question followed by a detailed reasoning process that culminates in an answer. While the latter can yield better results, it is less scalable due to the extensive manual effort required and depends on the skill of the prompt engineer.

To overcome the scalability issue, an automated version of CoT prompting, known as Auto-CoT, has been developed. This method uses LLMs to autonomously generate reasoning chains for a diverse set of questions, thereby constructing demonstrations without manual intervention. Although Auto-CoT may produce errors in reasoning, the variety of generated demonstrations can compensate for these inaccuracies, enhancing the overall effectiveness of the technique.

Chain-of-prompt sequences

Chain-of-prompt sequencing borrows from the principles of Chain-of-thought prompts: give the model more room to think through a task. By breaking down complex workflows into individual actions or tasks, the model is able to focus entirely on one generation at a time. This enables prompt engineers to use few-shot prompt examples to align and shape the generative outputs.

Here is an example of this technique. At Klu, we see leading AI Teams automating the first draft of complex documents using this technique.

Example of Chain-of-Prompt Sequences for Product Strategy Generation Workflow with Contextual Continuity

-

Product Overview Prompt

- "Given the inputs: [product name], [target audience], [key features], generate a detailed overview of the product."

-

Market Analysis Prompt

- "Considering the product overview for [product name], analyze the current market trends in the [industry name] industry and summarize the key factors driving growth."

-

Competitor Analysis Prompt

- "Using the market analysis summary, identify the top three competitors in the [industry name] industry and evaluate their strengths and weaknesses relative to [product name]."

-

Customer Needs Assessment Prompt

- "Based on the product overview and market analysis, describe the primary needs and pain points of customers in the [industry name] industry that [product name] could address."

-

Product Differentiation Prompt

- "Leveraging the insights from the [competitor analysis] and [customer needs assessment], suggest unique selling points for [product name] that would differentiate it in the market."

-

Go-to-Market Strategy Prompt

- "Considering the [product differentiation factors] and the [product strategy overview], propose a go-to-market strategy for [product name] that addresses the identified customer needs and stands out from the competition."

-

Risk Assessment Prompt

- "What potential risks should we consider when launching [product name], based on the [go-to-market strategy], and how can we mitigate them?"

This updated sequence of prompts ensures that each step builds upon the outputs of the previous steps, providing the model with additional context to generate a more coherent and comprehensive product strategy.

Evaluation of Prompts

Evaluating prompts is an essential aspect of prompt engineering, as it helps to determine the effectiveness of the prompts and identify areas for improvement. To optimize AI responses and enhance the overall quality of AI interactions, you can:

- Design metrics to measure the performance of the prompts

- Identify edge cases to ensure the prompts handle a wide range of inputs

- Conduct error analysis and troubleshooting to identify and fix any issues

By following these steps, you can improve the prompts and create a better user experience with AI interactions.

Insights derived from prompt evaluation can be utilized to refine and enhance the design and effectiveness of AI prompts.

Iterative Prompt Development

The process of iterative prompt development involves continuously refining and optimizing prompts through experimentation and evaluation. By starting with simple commands and gradually moving towards complex reasoning, you can develop prompts that are more effective and better suited to a wide range of AI tasks.

The key aspects of this process are:

- Start simple — Begin with clear, straightforward commands and questions to establish a baseline. Build up prompts from there.

- Gradual complexity — Slowly incorporate more nuanced requests, conditional logic, or requirements for reasoning as the AI demonstrates capabilities.

- Experimentation — Try many prompt variations to identify phrasing, structure, or parameters that work best.

- Evaluation — Assess AI responses at each iteration to determine if the prompt edits are improving relevance, accuracy, and insight.

- Optimization — Use what is learned to refine the prompt further. Repeat experiments with the updates.

Progressing through this iterative process offers invaluable insights into the subtleties of prompt engineering, enhancing your capacity to communicate effectively with AI models.

Six OpenAI Prompt Engineering Strategies

In addition to our tips, OpenAI published an exceptional guide to prompt engineering in late 2023. Here are the notes from this guide.

Prompt Engineering for GPT Models

These are effective strategies for eliciting better responses from large language models like GPT-4. While these methods can be combined for enhanced outcomes, we recommend experimenting to discover the most effective approach for your needs.

If a model struggles with a task, trying a more capable model like gpt-4 or gpt-4-turbo may yield better results. Additionally, you can explore example prompts to understand the capabilities of GPT models.

Clear Instructions for Better Results

To improve the quality of model outputs, it's crucial to provide clear and detailed instructions. The model cannot infer your intentions, so specificity is key. If you require concise responses, request them explicitly. For expert-level content, indicate so. If the format is important, demonstrate your preferred structure. The more precise you are, the less the model has to guess, increasing the likelihood of receiving the desired output.

Tactics for Enhanced Responses

- Detail your query for relevance — Include all necessary context to avoid ambiguity and ensure the model's responses are pertinent.

- Persona adoption — Direct the model to assume a specific persona, which can be defined in the system message, to tailor the tone and style of the response.

- Delimiters for clarity — Use delimiters like triple quotes or XML tags to separate different parts of the input, aiding the model in distinguishing between various elements of the prompt.

- Step-by-step instructions — Break down complex tasks into a series of steps, making it easier for the model to process and respond accurately.

- Example provision — When describing a task is challenging, provide examples to demonstrate the desired outcome, especially when a specific style of response is needed.

- Output length specification — Request outputs of a certain length, whether in words, sentences, paragraphs, or bullet points, to control the verbosity of the model's responses.

- Reference text for accuracy — Supplying reference material can guide the model to provide more accurate and less fabricated information.

Complex Task Simplification

Complex tasks often result in higher error rates. By breaking down a task into simpler components or a sequence of subtasks, you can reduce errors and create a more efficient workflow. This modular approach mirrors best practices in software engineering and can be applied to prompt engineering as well.

Reasoning and "Thinking Time"

Just as humans may need time to work through a problem, models benefit from a "chain of thought" approach. Encouraging the model to reason through a problem before providing an answer can lead to more accurate and reliable responses.

Leveraging External Tools

Complement the model's capabilities by integrating the outputs of other tools. For example, use a text retrieval system to inform the model about relevant documents or a code execution engine to perform calculations. This synergy between tools and the model can yield better results.

Systematic Testing for Improvement

To ensure that changes to prompts lead to improvements, it's essential to measure performance systematically. Define a comprehensive test suite and evaluate model outputs against gold-standard answers. This methodical approach allows for confident assessment of whether modifications are truly beneficial.

Prompt Fine-tuning

Fine-tuning and prompt engineering go hand in hand, as both approaches aim to enhance the performance of AI models. Techniques like prompt tuning and prefix tuning can be employed to refine the model's responses, ensuring that they are accurate, relevant, and contextually appropriate.

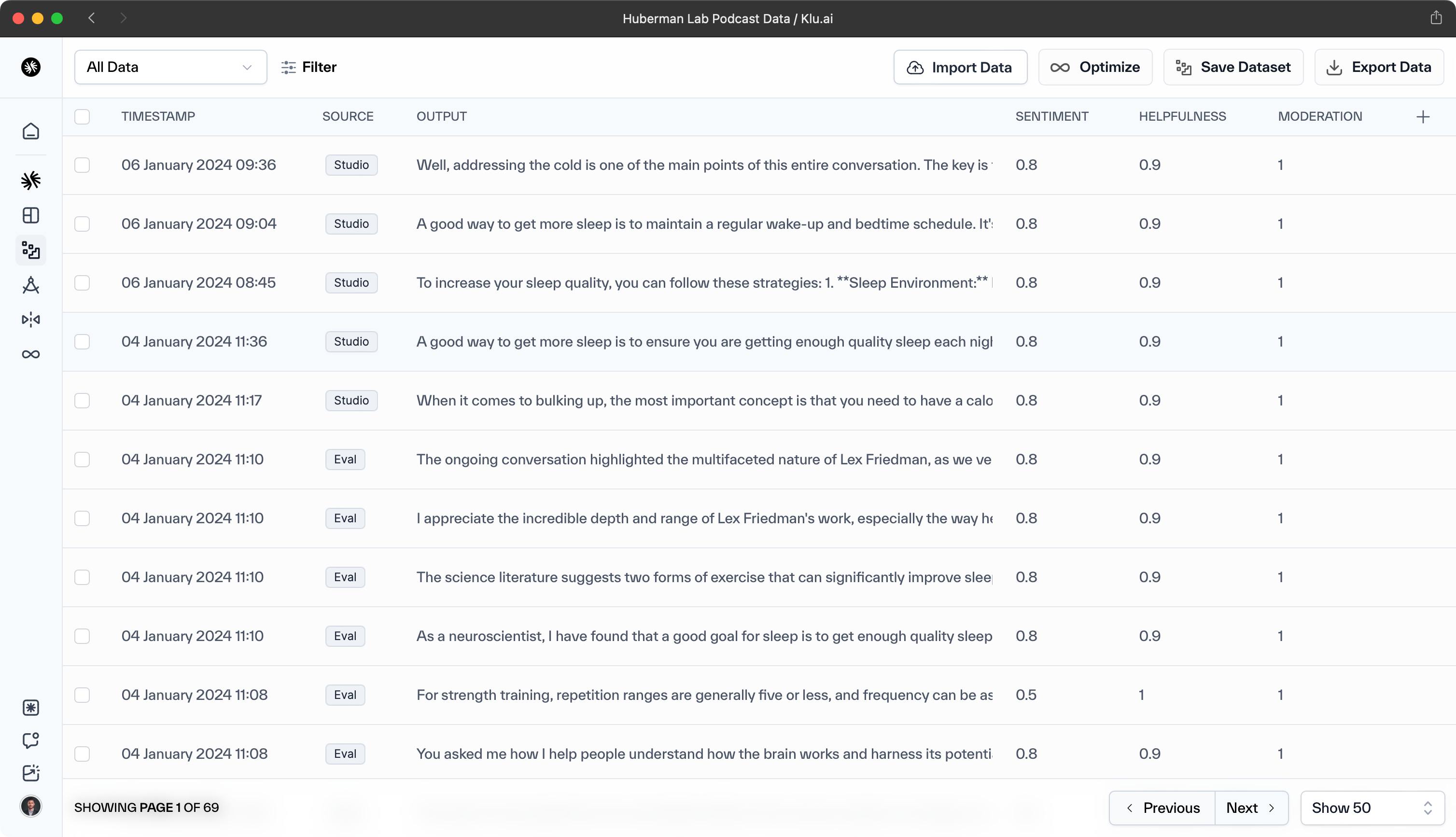

Combining fine-tuning techniques with prompt engineering enables you to fully exploit the potential of AI models, resulting in more engaging and meaningful AI interactions. Teams using Klu are able to rapidly assemble their best data to fine-tune LLMs for their use case.

You can explore a demo fine-tuned model built using Klu at Huberman AI.

Prompt fine-tuning is an approach that enhances LLM performance by combining the principles of prompt engineering with fine-tuning. Unlike traditional fine-tuning, which requires additional training on a task-specific dataset to adjust the model's parameters, prompt fine-tuning refines the model's outputs by using well-crafted prompts as inputs. This method does not necessitate retraining the model or altering its underlying weights, making it a resource-efficient alternative.

This technique is especially useful for organizations with limited datasets, enabling them to customize large-scale models for specific tasks without incurring the high computational costs associated with updating the model's extensive parameters. By strategically crafting prompts, companies can achieve more accurate and relevant AI responses, streamlining the adaptation of AI models for particular applications while conserving computing power and reducing expenses.

What is the difference between prompt tuning and model fine-tuning?

Fine-tuning and prompt tuning optimize large language models (LLMs) differently.

Fine-tuning involves retraining a model on a specific dataset to improve task performance or domain knowledge, requiring substantial computational power and potentially lengthy training times.

In contrast, prompt tuning, or prompt engineering, refines model outputs by crafting detailed prompts without additional model training, utilizing structured data and minimal computing resources.

While fine-tuning adjusts the model's internal parameters, prompt tuning strategically guides the model's existing capabilities, offering a resource-efficient method to tailor AI behavior and responses to specific needs.

The Mechanics Behind Prompt Generation

Understanding the mechanics behind prompt generation is crucial for creating precise and effective prompts. Key mechanics include temperature and top_p control, output length and structure management, and frequency and presence penalties for diverse outputs.

Mastering these mechanics enables you to fine-tune your prompts and reach the desired balance of creativity and determinism in your AI interactions.

Temperature and Top_p: Balancing Creativity and Determinism

Temperature and top_p are two parameters that can be used to control the randomness or determinism of AI-generated prompts. By adjusting these parameters, developers can find the right balance between creativity and determinism in AI-generated prompts, providing a more engaging and valuable AI interaction experience.

For instance, higher temperature values result in more diverse and creative outputs, while lower values encourage more focused and deterministic responses.

Controlling Output Length and Structure

Controlling the output length and structure of AI-generated prompts is essential for ensuring that the generated responses are relevant and coherent. Adjusting the 'max length' parameter allows you to control the length of the output, while specifying stop sequences helps manage the structure of the response.

Adjusting these parameters allows you to shape the AI model's output to fit your desired length and structure, leading to more effective AI interactions.

Frequency and Presence Penalties: Enhancing Diversity

Frequency and presence penalties play a crucial role in enhancing the diversity of AI-generated text. By applying penalties to frequently occurring words or words that have already been used in the text, the model is encouraged to generate more diverse and creative responses. Adjusting these penalties can help strike the right balance between creativity and determinism, making AI-generated text more engaging and valuable for users.

The Iterative Process of Refining AI Prompts

The iterative process of refining AI prompts begins with simple commands and gradually progresses towards complex commonsense reasoning, with experimentation and evaluation being the key steps towards mastery. By continually refining and improving your prompts based on feedback and results, you'll be better equipped to tackle a wide range of AI tasks and challenges.

This process not only improves AI interactions but also aids in the development of more advanced AI systems capable of comprehending and reasoning about the complexities of human language.

From Simple Commands to Complex Commonsense Reasoning

As you progress in your prompt engineering journey, moving from simple commands to complex commonsense reasoning is essential for creating more effective AI interactions. Complex commonsense reasoning involves the AI's ability to understand and reason about subtle aspects of everyday knowledge and situations, ultimately enabling AI systems to take on a wide range of tasks.

By incorporating complex reasoning into AI prompts, you'll enhance the model's understanding and production of natural language interactions, making AI-generated text more engaging and valuable for users.

Experimentation and Evaluation: Key Steps to Mastery

Experimentation and evaluation are crucial steps in the iterative process of refining AI prompts. By setting clear constraints, trying different prompts, incorporating external information, and combining prompts, you can optimize your AI interactions and improve the quality of AI-generated text.

Continual refinement of your prompts based on performance evaluation and identification of improvement areas will help you create more effective and engaging AI interactions, leading to mastery in prompt engineering.

Leveraging Courses and Resources for Prompt Engineering

Succeeding in prompt engineering necessitates learning and experimentation. Leveraging available tools and resources, including guides, online courses, and community collaboration may help accelerate that for you. By tapping into these resources, you can enhance your prompt engineering skills and stay up-to-date with the latest developments in the field.

In addition to this guide, numerous online courses can help you develop the skills needed to excel in prompt engineering. Here are some notable options:

-

Prompt Engineering (OpenAI) — This guide shares strategies and tactics for getting better results from large language models like GPT-4.

-

Prompt Design (Anthropic) — This guide shares strategies and tactics for getting better results from large language models like Claude 2.

-

Prompt Engineering (Coursera) — This course introduces students to the patterns and approaches for writing effective LLM prompts. It covers how to apply prompt engineering to work with LLMs like ChatGPT and provides access to hands-on projects and job-ready certificate programs.

-

dair-ai/Prompt-Engineering-Guide (GitHub) — This GitHub repository contains resources and guides for prompt engineering, including examples and techniques for optimizing prompts to efficiently use language models.

For a more extensive list of prompt engineering courses and certifications, you can explore resources on websites like Class Central, edX, and DeepLearning.AI.

Summary

Prompt engineering is a critical skill for optimizing Large Language Models (LLMs) such as GPT-4, enabling precise and relevant outputs that align with human intent. It involves a cycle of designing effective prompts, evaluating their performance, and refining them through systematic iteration and experimentation. Utilizing tools like Klu Studio can streamline this process.

Prompt engineering involves understanding the model's capabilities and limitations to provide clear, context-rich instructions that avoid bias and assign specific roles to the model. It's about choosing the right words and being both concise and informative to elicit the desired response.

Techniques range from basic to advanced, preparing prompts for complex tasks and ensuring they are optimized for precision and context awareness.

The evaluation of prompts is systematic, involving the design of metrics and test cases to conduct error analysis and enhance relevance and accuracy.

Starting with simple prompts, the development process gradually introduces complexity through extensive experimentation, continual testing, and optimization.

Prompt engineering techniques vary from zero-shot to few-shot, and chain-of-thought, with techniques evolving from basic to advanced. Continuous evaluation and refinement are key to optimizing prompt effectiveness.

In addition to this guide, you can access more specific prompt engineering examples in the Klu Documentation.

Prompt engineering encompasses various techniques, tools, and methodologies, such as:

- Basic Prompts and Prompt Formatting — Crafting precise and context-specific instructions or queries to ensure consistency and coherence.

- N-shot Prompting — Include example outputs in the prompt itself to increase the alignment of generated responses.

- Self-Consistency — Ensuring that the model consistently generates the same output for the same input.

- Domain-Specific Knowledge — Integrating specialized domain knowledge in the prompt or through retrieval to enhance the performance of LLMs.

Prompt engineering is a multidimensional field that requires a blend of technical skills and the ability to translate business objectives into AI-compatible instructions.

A wealth of online courses and community forums are available to support the education of prompt engineers, ensuring skills remain current with industry advancements.

As LLMs continues to evolve, prompt engineering will likely become more nuanced for each model, with new techniques and tools emerging to further enhance and maximize performance.

Mastering the art of prompt engineering allows professionals to tap into the vast capabilities of AI and LLMs. It enables generating customized data and code, performing various analytical tasks, creating engaging content, and building context-aware assistants.

Start exploring, versioning, and evaluating prompts in Klu Studio →

Frequently Asked Questions

What does prompt engineering do?

Prompt engineering is a crucial aspect of optimizing the use of Large Language Models (LLMs) like OpenAI's GPT-4. It involves the process of designing, creating, and refining prompts to effectively instruct the model to produce the desired output. This can significantly enhance the model's performance in specific tasks by guiding it towards more accurate results.

Prompt engineering plays a vital role in leveraging the power of artificial intelligence (AI) in natural language understanding and generation, making it an essential tool in AI application development. Prompt engineering can include techniques such as specifying the format of the answer, explicitly asking the model to think step-by-step or debate pros and cons before settling on an answer, or providing examples of the desired output.

How much can a Prompt Engineer make?

On average, in the United States, a Prompt Engineer can make anywhere from $112,806 to $160,000 annually according to data from Glassdoor and Indeed. However, those with extensive experience and expertise in prompt engineering, especially in the context of LLMs like OpenAI's GPT-4, can command higher salaries, however a salaries will vary based on experience, location, and the complexity of the projects.

There are reports of AI Prompt Engineers in the San Francisco Bay Area earning salaries over $300,000, and the range for a Prompt Engineer's annual salary can be between $175,000 to $335,000. At Anthropic, that salary can go up to $375,000 a year.

It's also worth noting that this is a rapidly evolving field, and the demand for professionals with skills in AI and LLMs is on the rise, which may impact future salary trends.

Is Prompt Engineer an actual role?

Yes and no — depending on the company. At comapmnies using Klu, prompt engineering responsibilities are distributed across various roles, from founders to product managers to AI Engineers. The process is highly collaborative, with domain experts leading the creation of specialized prompts.

For instance, non-technical team members handle prompts related to HR or legal matters, while technical team members manage prompts for code generation. However, companies ranging from Anthropic to McKinsey hire dedicated prompt engineers to spearhead their projects.

What is ChatGPT prompt engineering?

ChatGPT prompt engineering refers to the process of refining and optimizing the prompts or questions submitted to the ChatGPT AI model to generate the most accurate, relevant, and comprehensive responses. This involves careful selection of the input language, phrasing, context, and format to effectively guide the AI's response.

ChatGPT, developed by OpenAI, is a Large Language Model (LLM) trained using Reinforcement Learning from Human Feedback (RLHF). It uses patterns in the data it was trained on to predict and produce textual responses. The aim of prompt engineering is to make these responses as useful and contextually accurate as possible. This process is crucial in leveraging the capabilities of LLMs like ChatGPT to the fullest extent, whether in customer service, content generation, or other applications.

How do I start learning prompt engineering?

To start learning prompt engineering, first, familiarize yourself with AI and Large Language Models (LLMs) like OpenAI's GPT-4. Understand the basics of natural language processing and machine learning to familiarize yourself with the concepts and vocabulary. Next, learn how to structure prompts effectively. This includes understanding the desired outcome, inputting explicit instructions, and setting the context. Experiment with different types of prompts such as questions or statements. Finally, analyze the response of the model and iterate the prompts accordingly. Regular practice and experimentation are key to mastering prompt engineering.

What are some types of prompts used in AI interactions?

Prompts used in AI interactions range from zero-shot, one-shot, few-shot, to chain-of-thought prompting, each offering unique characteristics and applications.

Style

- Question Prompts — These prompts involve asking a specific question, prompting the AI model to generate a related response.

- Instruction Prompts — These prompts provide explicit instructions for the AI model to follow, specifying the desired outcome. often lack detail or structure.

- Direct Prompting (Zero-shot) — This is the simplest type of prompt, providing no examples to the model, just the instruction.

- Prompting with Examples (One-, Few-, and Multi-shot) — These prompts involve providing one or more examples of the desired output to guide the AI model.

- Roleplay Prompts — These prompts involve establishing enough context for success, allowing the AI to fine-tune an outcome-aligned prompt simply by adding a name.

- Creative Prompts — These prompts involve providing specific descriptions, shapes, colors, textures, patterns, and artistic styles for AI-generated art.

Structure

- Zero-Shot Prompts — These prompts are simple questions or requests that do not provide extra context or guidance to the AI. While they can yield basic answers, these prompts often lack detail or structure.

- Single-Shot Prompting — This method involves giving the AI a single example of the desired output, usually in the form of a question-answer pair. By showing the AI model this pertinent background data, you assign a character to the AI that matches the response you want.

- Chain-of-Thought Prompting — These prompts involve breaking down complex tasks into a sequence of simpler prompts, instructing the model to evaluate or check its own responses before producing them.

Using these prompts, you can guide AI models to generate more informative and detailed responses, improving the overall quality of AI-generated content.

How can engaging with the community enhance my prompt engineering skills?

For prompt engineers, active participation in communities and collaboration with peers is crucial for skill development and staying updated with the latest advancements. Engaging with online communities such as Reddit provides opportunities to connect with fellow engineers, exchange experiences, and learn from collective successes and challenges. Such collaborations empower prompt engineers to navigate the complexities of their field and craft more effective AI interactions.

Is there a way to automate prompt engineering?

Yes, however, performance improvements are minor, and take excessive time and tokens. A leading automation technique is APE: Automated Prompt Engineering.

Automated Prompt Engineering (APE) is a framework for generating and selecting effective instructions for large language models (LLMs). It aims to optimize the quality of the prompt used to steer the model, as task performance depends significantly on the quality of the prompt. APE works by treating the instruction as a "program" and optimizing it through a search over a pool of instructions.

Key aspects of APE include:

-

Inputs — APE generates optimized prompts based on three inputs: the expected input data, the desired output, and a prompt template.

-

Process — APE performs its task in two steps. First, a LLM is used to generate a set of candidate prompts. Second, a prompt evaluation function considers the quality of each candidate prompt and returns the prompt with the highest evaluation score.

-

Applications — APE-engineered prompts can be applied to improve few-shot learning performance and steer models toward truthfulness and/or informativeness.

Experiments on 24 NLP tasks show that APE-generated instructions outperform previous methods. APE provides a systematic way to generate effective text prompts for LLMs, making it a valuable tool for researchers and developers working with these models.

What are some challenges that prompt engineering faces, and how can they be addressed?

Prompt engineering, like any evolving field, has its own set of challenges. Two significant ones include mitigating security risks like prompt injection and ensuring the ethical use of AI models. To address these challenges, it's essential to employ countermeasures such as input filtering, output filtering, and learning from human feedback to safeguard against prompt injection attacks.

By incorporating ethical considerations like fairness, accountability, transparency, and privacy into the design and development of AI prompts, and utilizing techniques like Tree of Thoughts (ToT) prompting, we can promote the responsible use of AI models and develop consistent explanation trees enhancing the quality and coherence of AI-generated text.

What skills are necessary for a successful career as a prompt engineer?

A career in prompt engineering requires a combination of technical and non-technical skills, including expertise in AI, machine learning, and natural language processing, strong communication, problem-solving, and analytical abilities, proficiency in programming languages, and a willingness to learn and adapt to new technologies.

Technical Skills: Understanding of AI, machine learning, and natural language processing is fundamental, as it allows for the creation of effective prompts and comprehension of model responses. Proficiency in programming, particularly in languages like Python, and the ability to analyze data enhance the ability to optimize prompts and outputs.

Linguistic and Creative Skills: A strong grasp of language structure and the ability to craft engaging prompts are essential. These skills ensure clarity and contextual relevance, tailoring interactions to diverse applications and audiences.

Problem-Solving and Iterative Development: The role demands the ability to dissect complex issues and iteratively refine prompts to achieve accurate and relevant AI responses. This involves a cycle of testing, analysis, and adjustments.

Soft Skills: Effective communication and collaboration with interdisciplinary teams are crucial. Ethical awareness is also paramount, as AI's societal impact necessitates consideration of biases and ethical implications in content generation.

Learning and Adaptability: With the rapid evolution of AI, continuous learning is vital to stay abreast of new developments and techniques. Formal education in fields like computer science or linguistics can be beneficial, but practical experience, whether through internships, entry-level roles, or personal projects, is equally valuable.

As the landscape of prompt engineering careers continues to evolve with advancing AI, staying current with the latest developments is essential for success in this exciting field.

Organizations like Anthropic, Aldrin, Boston Children's Hospital, and CloudWalk are actively seeking professionals skilled in AI prompt engineering, making it an exciting time to pursue a career in this field.

prompt engineering guide

prompt engineering

large language models

language models

prompt engineering skills

complex tasks

generative ai tools

directional stimulus prompting

common and complex tasks

prompt engineer

ai model

generative ai

human level prompt engineers

prompt engineers

generated knowledge prompting

new prompt engineering guide

engineering skills

advanced prompting techniques

prompt engineering techniques

input data

prompt injection attacks

large language model

language model

natural language processing

tools related

all the latest papers

relatively new discipline

following prompt

create prompts

desired output

prompt engineering important

effective prompting techniques

inconsistent explanation trees

text to image models

writing prompts

same prompt

domain knowledge

prompting techniques

complex commonsense reasoning

least to most prompting

expected response

wall street journal

in context learning

image prompting

large language models llms

ai tools

final answer

detailed instructions

generative ai model

optimizing prompts

most commonly reached conclusion

complexity based prompting

natural language text

other tools

design robust

design robust

desired response

commonly reached conclusion

research topics

existing code

chain of thought rollouts

stable diffusion

desired keywords

learning guides

arithmetic reasoning

maieutic prompting

specific task

high demand

high interest

engineering guide

latest papers

same way

word order

first prompt

directional stimulus

multiple steps

question answering

relevant facts