Mistral Large

by Stephen M. Walker II, Co-Founder / CEO

What is Mistral Large?

Mistral Large is a highly optimized large language model developed by Mistral AI. It is part of a suite of models that includes Mistral Small, which is optimized for latency and cost. Mistral Large incorporates innovations such as RAG-enablement and function calling, similar to Mistral Small. These models are part of Mistral AI's simplified endpoint offering, which aims to provide a range of solutions from lightweight to flagship models.

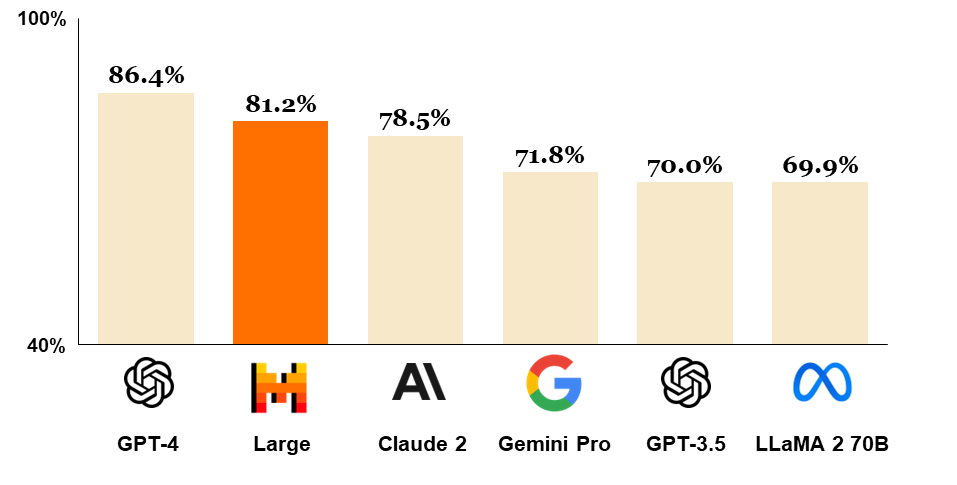

Mistral Large, a state-of-the-art text generation model, excels in complex multilingual reasoning tasks such as text understanding, transformation, and code generation. It ranks as the world's second-best model accessible via API, closely following GPT-4, based on its performance in widely recognized benchmarks.

Key features of Mistral Large include:

- Multilingual fluency in English, French, Spanish, German, and Italian, offering a deep understanding of grammar and cultural nuances.

- A 32K token context window for accurate information retrieval from extensive documents.

- Advanced instruction-following capabilities that allow for custom moderation policy design, demonstrated in the system-level moderation of le Chat.

- Native function calling ability, combined with a constrained output mode on la Plateforme, facilitating large-scale application development and technological stack modernization.

Mistral Large Capabilities

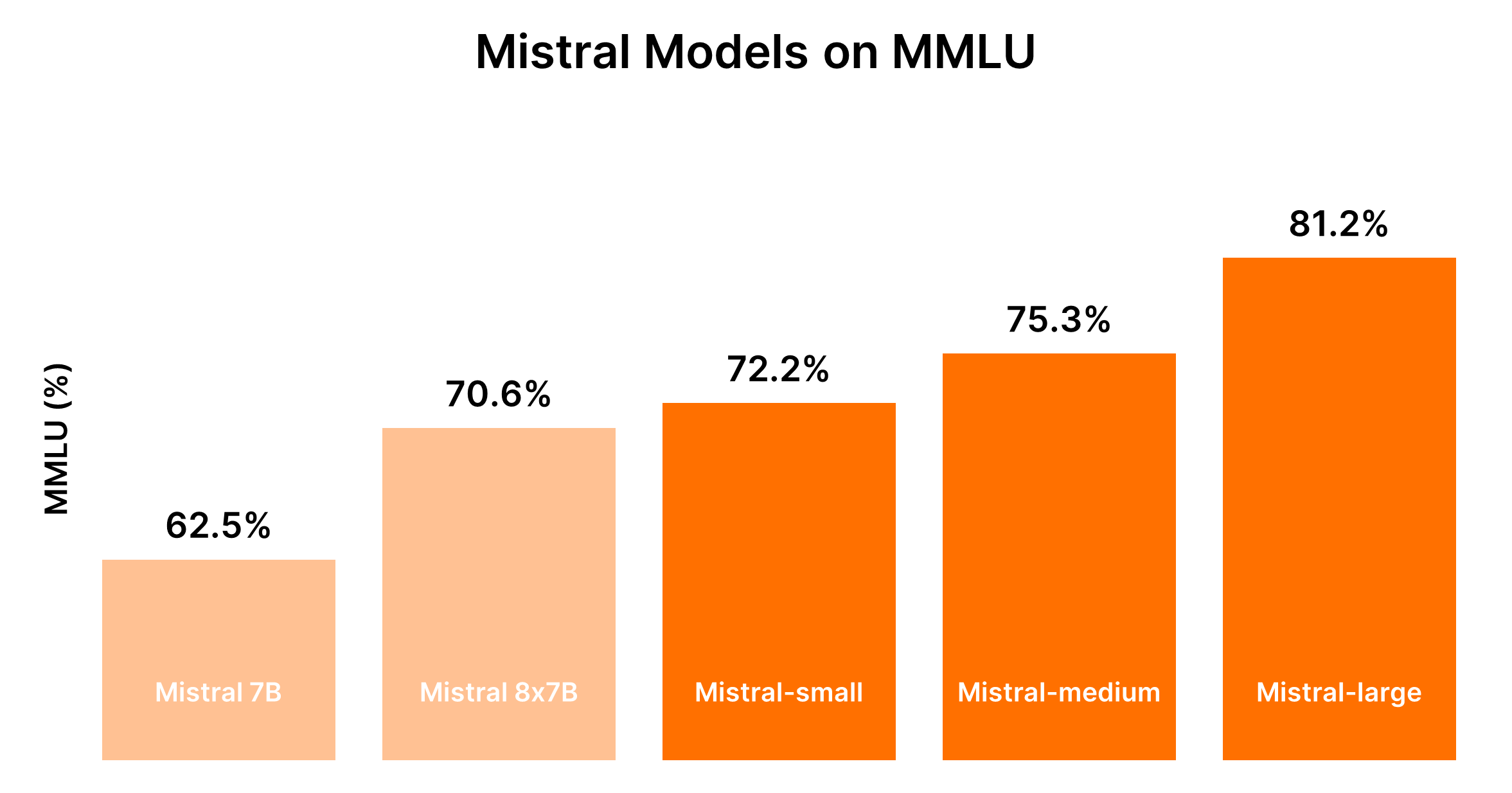

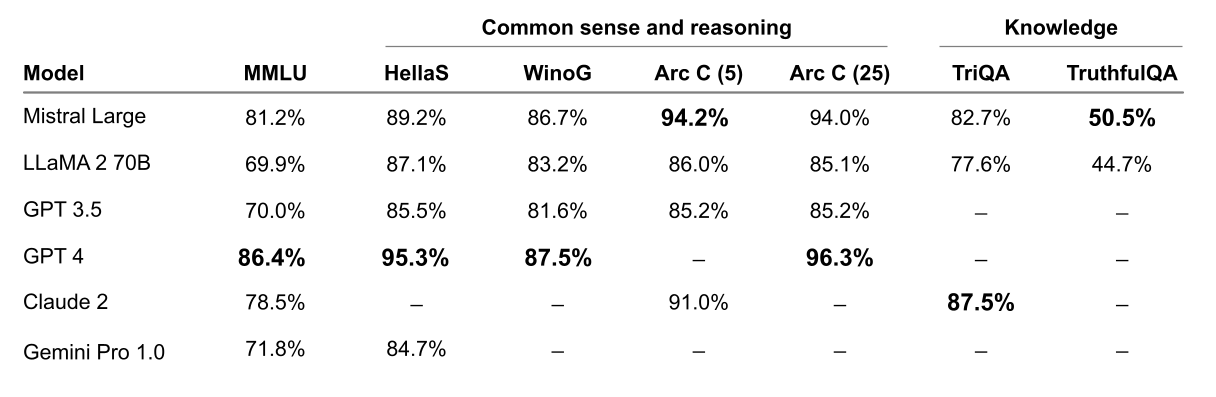

Mistral Large's capabilities have been rigorously evaluated against the industry's leading Large Language Models (LLMs) across a variety of benchmarks to determine its performance in reasoning, knowledge, multilingual tasks, math, and coding.

In reasoning and knowledge, Mistral Large has proven its exceptional ability to understand and process complex concepts, significantly outperforming other pre-trained models in benchmarks such as MMLU, HellaSwag, Wino Grande, Arc Challenge, TriviaQA, and TruthfulQA.

When it comes to multilingual capabilities, Mistral Large demonstrates unparalleled proficiency. It significantly exceeds the performance of LLaMA 2 70B in benchmarks conducted in French, German, Spanish, and Italian, showcasing its versatility and adaptability in understanding and generating content in multiple languages (Figure 3).

In the domain of math and coding, Mistral Large stands out for its superior performance. It leads in a suite of popular benchmarks designed to test coding and math skills, including HumanEval, MBPP, and GSM8K, highlighting its effectiveness in technical tasks.

These findings collectively underscore Mistral Large's leading position in the LLM landscape, showcasing its broad and versatile capabilities across a wide range of tasks and languages.

Function calling and JSON format

Function calling allows developers to use Mistral endpoints alongside their own tools for advanced interactions with internal code, APIs, or databases. Detailed information is available in our function calling guide.

The JSON format mode ensures language model outputs are in valid JSON, facilitating easier integration and manipulation of data within developers' pipelines.

These features are currently exclusive to mistral-small and mistral-large models. We plan to extend these capabilities to all endpoints soon, including more detailed format options.

Partnership with Microsoft

Microsoft has formed a multi-year partnership with Mistral AI, a French AI startup, to enhance its AI portfolio on the Azure cloud platform.

This move, expanding beyond its investment in OpenAI, includes integrating Mistral AI's large language models (LLMs) into Azure and acquiring a minority stake in Mistral AI. These models, akin to OpenAI's, excel in processing and generating human-like text.

The first model to be available on Azure will be Mistral's proprietary model, Mistral Large, with plans to expand to other cloud platforms.

Mistral AI, established by former Meta and Google's DeepMind personnel, will benefit from Microsoft's $2.1 billion investment aimed at boosting its commercial growth, market expansion, and the development of AI models for Europe's public sector. Additionally, Mistral AI plans to introduce a ChatGPT-like chatbot, "Le Chat."

This partnership emerges as Microsoft faces regulatory scrutiny in Europe and the U.S. over its OpenAI investments, underscoring its commitment to diversifying its AI offerings and supporting open-source AI initiatives.

How to Access Mistral Large?

There are three ways to access Mistral offerings:

-

La Plateforme — Hosted on Mistral's secure European infrastructure, this platform allows developers to build applications using our extensive model library.

-

Azure Integration — Access Mistral Large via Azure AI Studio and Azure Machine Learning. This option has already proven successful with beta testers.

-

Self-deployment — For highly sensitive applications, deploy Mistral models in your own environment. This option includes access to the model weights.

Mistral Large Use Cases

Mistral Large model excels in a wide range of NLP tasks, including:

- Code Debugging — Mistral Large supports developers with precise code suggestions and error identification, enhancing coding efficiency and accuracy through its logical analysis rather than real-time feedback.

- Translation & Summarization — Provides robust support for translating multiple languages and efficiently condenses text to highlight key points.

- Text Generation & Classification — Capable of generating coherent text from prompts and accurately categorizing content into specific groups.

- Customer Service Excellence — Mistral Large drives superior customer service by offering immediate, precise, and tailored responses via automated chatbots, boosting customer satisfaction and engagement through quick and effective query resolution.

- Content Creation & Education — Enhances marketing content creation and automates educational processes, including grading and learning enhancements.

These applications underscore Mistral Large's capability to handle diverse tasks involving text understanding, processing, generation, and user interaction across multiple domains.