OpenAI GPT-5

by Stephen M. Walker II, Co-Founder / CEO

OpenAI GPT-5 Model Card (Forecast)

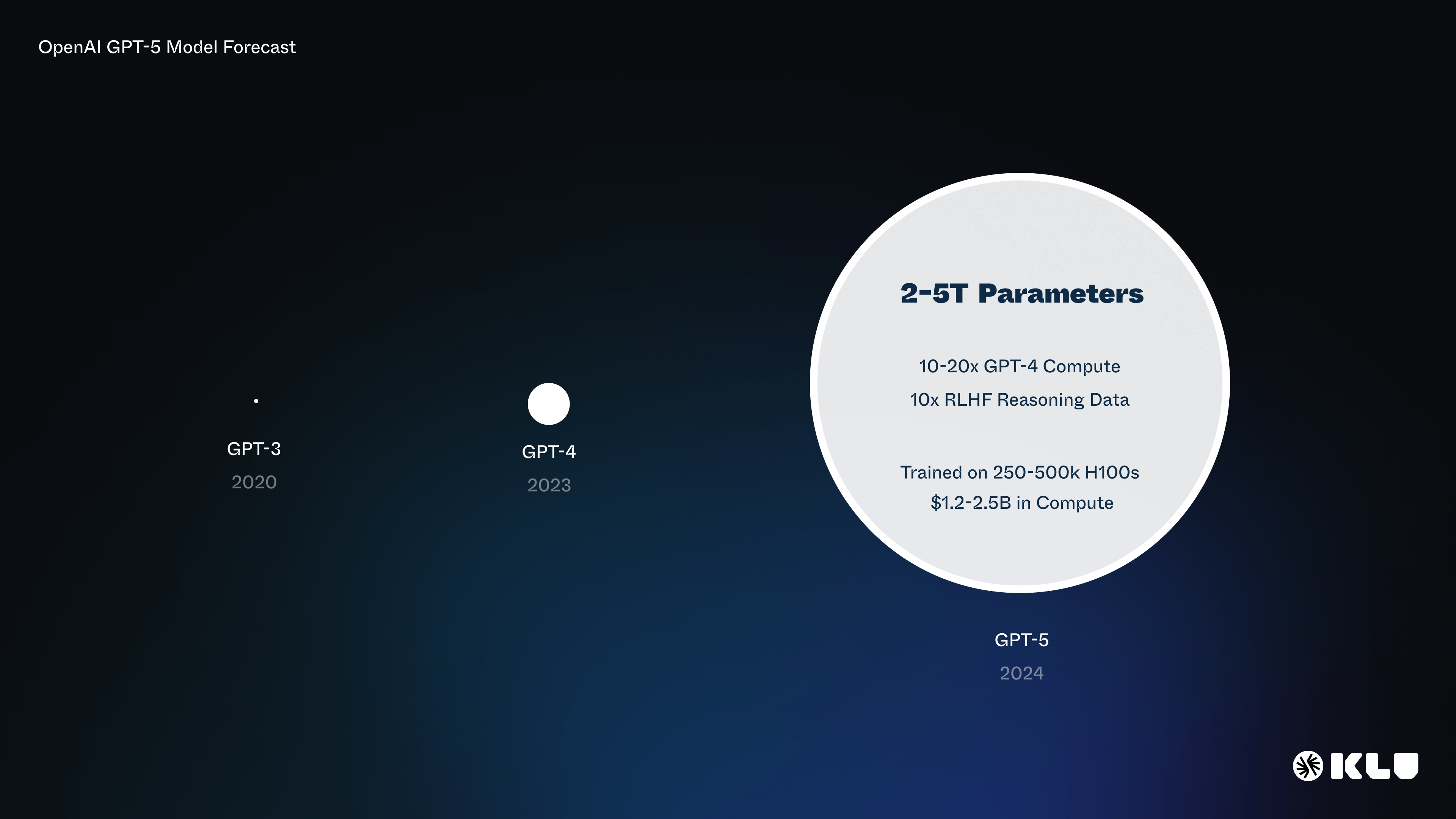

| Attribute | Details |

|---|---|

| Organization | OpenAI |

| Model name | GPT-5 |

| Model type | Frontier Multimodal Reasoning Model |

| Codename | Q* (Strawberry) / Orion / Gobi / Arrakis (GPT-4.5) |

| Scaling Principle | 10-20x GPT-4 Compute, 10x RLHF/AIF Reasoning Data |

| Parameter count | 2T-5T (2,000B-5,000B) |

| Training Hardware | 250-500k H100s |

| Dataset size (tokens) | 40T-100T (80TB-200TB) |

| Training data end date | Dec/2023 |

| Training start date | Jan25/2024 |

| Total training time | 3 Months |

| Training cost | 1.25-2.5 Billion USD |

| Release date (public) | Nov/2024 |

| Paper | TBA |

| Playground | TBA |

What is OpenAI GPT-5?

OpenAI GPT-5 is the anticipated successor to the GPT-4 language model developed by OpenAI. As of now, there are no official details about GPT-5's capabilities, but it's expected to be a significant upgrade from its predecessor, potentially redefining AI and approaching Artificial General Intelligence (AGI).

Speculations about GPT-5 suggest that it might be more powerful and capable, with improved abilities to generate realistic and coherent text and perform more complex tasks. It's also expected to have enhanced multilingual capabilities and be a multisensory AI model that can handle text, audio, images, videos, and in-depth data analysis.

OpenAI's focus has evolved to emphasize the development of AGI (Artificial General Intelligence), suggesting a broader transformation in the company's approach. The tech community is eagerly awaiting further details about GPT-5, and there is a strong interest in how evolving technologies will be balanced with ethical considerations.

Strawberry

OpenAI's latest endeavor, code-named Strawberry, represents a significant leap in AI reasoning capabilities, aiming to enhance the performance of its chatbot business. Strawberry, previously known as Q*, is designed to tackle complex problems, including those in mathematics and programming, which current AI models struggle with. This new model is not only expected to improve the reasoning abilities of the upcoming Orion large language model but also to generate high-quality synthetic data for training purposes. By doing so, Strawberry could help reduce the hallucinations often seen in AI responses, leading to more accurate and reliable outputs.

The development of Strawberry is part of OpenAI's strategy to maintain its competitive edge in the rapidly evolving field of conversational AI. With its ability to solve intricate problems and provide insights into subjective topics like marketing strategies, Strawberry is poised to open new revenue streams and applications for AI. As OpenAI continues to grow its business, the successful launch of Strawberry could play a crucial role in solidifying its position as a leader in AI technology, especially as it races against well-funded rivals in the industry.

Orion

Orion, OpenAI's next flagship large language model, is being developed with the aid of Strawberry, a significant technical breakthrough. Strawberry's advanced reasoning capabilities are crucial for generating high-quality training data, which is expected to reduce the hallucinations or errors that AI models typically produce. By providing more accurate examples of complex reasoning, Strawberry enhances the learning process for Orion, potentially leading to a more reliable and efficient AI model.

Moreover, OpenAI's strategic demonstration of Strawberry to American national security officials underscores the importance of transparency and collaboration with policymakers. This move not only aims to secure the technology against foreign adversaries but also sets a precedent for AI developers in addressing national security concerns. As OpenAI continues to refine Strawberry through distillation, it may soon be integrated into chat-based products, offering improved reasoning capabilities for applications that do not require immediate responses, such as noncritical coding error fixes in GitHub.

GPT-5 Training Timeline

OpenAI's CEO, Sam Altman, confirmed in April 2023 that the company was not training GPT-5 at that time. However, by November 2023, Altman confirmed that the company had started working on GPT-5. Despite this, as of January 2024, there is no official release date for GPT-5.

Greg Brock tweeted on January 25:

Building at OpenAI is an exercise in maximally harnessing each available computing resource, scientifically predicting and understanding the resulting systems, searching for new ideas or old ones that are now ready to work, and scaling beyond precedent.

If this tweet hints at training beginning now, it is likely that the training run will finish in April. Model testing and red teaming are scheduled to begin and continue throughout the summer.

The development of GPT-5 is speculated to be in its early stages, with OpenAI focusing on setting up the training approach, coordinating annotators, and curating a dataset. OpenAI is also using a web crawler named GPTBot to collect a robust dataset from publicly available information online, which will likely enhance the quality and diversity of the training data for GPT-5.

Based on these signals, we believe the following timeline to be viable based on past performance:

- Scaling Principle — 10-20x GPT-4 Scale (25k A100 80GB)

- GPT-5 Training Run — December 2023 to February 2024 (±2 Months)

- GPT-5 RLHF Run — February 2024 to April 2024 (±2 Months)

- GPT-5 Red Teaming — April 2024 to October 2024 (±2 Months)

- GPT-5 Training Hardware — 250-500k Nvidia H100s

- GPT-5 Training Cost — $1.25-2.5 Billion USD

- GPT-5 Announcement — OpenAI DevDay 2 (November 2024)

These estimates were confirmed by source information leaks provided by Martin Shkreli.

January 17 2024: Sam Altman GPT-5 Davos WEF Comments

During the World Economic Forum in Davos, OpenAI CEO Sam Altman discussed the future of AI and the GPT-5 model. He highlighted the potential of AI to expedite scientific breakthroughs and transform knowledge work, including tasks like email management. Altman emphasized that launching GPT-5 is his main focus, though he remained non-committal on whether it would exclusively use licensed and attributed content.

"In envisioning the future of AI, I see a necessity for products to support extensive individual customization. This level of personalization, while potentially unsettling for some, is crucial as AI will tailor responses based on the unique values and preferences of each user, and possibly their geographical location. There are clear ethical boundaries we won't cross, such as any directives that harm individuals based on identity. However, there may be cultural nuances that challenge our personal beliefs but are considered acceptable elsewhere. As creators, we must navigate these complexities, understanding that the application of AI will vary significantly among users with different values. The distinction between countries per se is less critical than aligning with the diverse values of individuals worldwide," - Sam Altmam, Davos 2024

He confirmed OpenAI's decision to permit military applications of its AI models to support the U.S. government, acknowledging the need for a cautious approach in some areas. Altman pointed out the necessity for significant energy innovations to meet the growing demands of AI technologies.

Furthermore, Altman announced a collaboration with Common Sense Media to develop AI usage guidelines and educational resources aimed at creating "family-friendly" AI models. Despite facing a temporary dismissal from OpenAI, he thanked his team and shareholders for their support during the event.

January 2024: Altman Discussion With Gates

In a recent episode of the Unconfuse Me podcast, OpenAI CEO Sam Altman, who was speaking with Microsoft co-founder Bill Gates, confirmed that video capabilities are being considered for ChatGPT. This development follows the successful integration of image and audio features, which were well-received by users.

Altman emphasized that the current priority is enhancing the reasoning abilities of GPT-4, as it currently has limited reasoning capacity. Additionally, efforts are underway to improve ChatGPT's reliability in providing accurate responses, addressing concerns over a perceived decline in the chatbot's performance and its impact on user satisfaction.

"Right now, GPT-4 can reason in only extremely limited ways. Also, reliability. If you ask GPT-4 most questions 10,000 times, one of those 10,000 is probably pretty good, but it doesn't always know which one, and you'd like to get the best response of 10,000 each time, and so that increase in reliability will be important. Customizability and personalization will also be very important. People want very different things out of GPT-4: different styles, different sets of assumptions. We'll make all that possible, and then also the ability to have it use your own data. The ability to know about you, your email, your calendar, how you like appointments booked, connected to other outside data sources, all of that. Those will be some of the most important areas of improvement." - Sam Altman

In the Unconfuse Me podcast episode, OpenAI CEO Sam Altman discussed the evolution of ChatGPT and the forthcoming GPT-5. Altman revealed that GPT-5 will be a multimodal model capable of processing speech, images, code, and video, significantly broadening the scope of generative AI applications.

The integration of video capabilities into ChatGPT, as confirmed by Altman, marks a substantial advancement from the current model's image and audio features.

What is the current GPT-5 training timeline and news?

OpenAI CEO Sam Altman has confirmed that GPT-5 is in the early stages of development, suggesting that the model's training has not yet begun and is likely in the planning phase. The initial stages of GPT-5 development include setting up the training approach, coordinating annotators, and curating a dataset, indicating a focus on preparing the infrastructure and processes needed for model training. OpenAI is also using a web crawler named GPTBot to collect a robust dataset from publicly available information online, which will likely enhance the quality and diversity of the training data for GPT-5.

OpenAI has made a strategic decision to proceed with the development of GPT-5, which includes trademarking the term "GPT-5" in August. This decision reflects the company's commitment to advancing AI capabilities and contradicts Sam Altman's earlier hesitations and statements that suggested a pause in the progression beyond GPT-4. This change may be due to new insights or shifts in strategy.

There is an emphasis on predicting capabilities from a safety perspective, implying that rigorous testing and safety considerations will be integral to GPT-5's development. However, the specific timeline for the release of GPT-5 and its capabilities have not been disclosed, leaving much to speculation. There is anticipation about GPT-5's potential impact across various industries, but also concerns regarding bias, misinformation, and malicious use.

OpenAI's focus has evolved to emphasize the development of AGI (Artificial General Intelligence), suggesting a broader transformation in the company's approach. The tech community is eagerly awaiting further details about GPT-5, and there is a strong interest in how evolving technologies will be balanced with ethical considerations.

What are the Conflicting Reports?

However, there have been conflicting reports about the timeline for GPT-5. Elon Musk, a co-founder of OpenAI, suggested in an interview that GPT-5 could be released by the end of 2023. This claim is in contrast to Altman's statement that they haven't begun work on GPT-5 yet.

The development and launch timeline of GPT-5 are heavily influenced by two critical factors — the data required for training and the financial resources. OpenAI has been impacted by the high demand for NVIDIA's H100 chips, essential for building data centers needed to train AI models. However, the situation is expected to improve next year, with players like AMD and Microsoft developing their hardware to compete with NVIDIA.

Given these factors, it's difficult to predict exactly when GPT-5 training will begin. However, it's clear that OpenAI is actively preparing for the development of GPT-5, and more information is expected to emerge in the coming months.

In a recent discussion, Sam Altman, a prominent figure in the field of artificial intelligence, emphasized the significant improvement that the next generation model of natural language processing is expected to bring. This model, known as a large language model, aims to outperform humans in reasoning capabilities, offering a wider range of responses with more accuracy. Just days ago, Bill Gates echoed this sentiment, highlighting the importance of AI safety in future versions of such models. The goal is to consistently provide the best response out of a multitude of possibilities, which is a challenge that the current GPT-4 model faces when dealing with more questions. The anticipated GPT store is set to be a hub for these advancements, marking a milestone in the journey towards more sophisticated artificial intelligence systems.

Just days ago, Bill Gates emphasized the importance of AI safety, highlighting that the advancements in AI should be carefully considered to ensure they are aligned with ethical standards and public well-being.