LLM App Frameworks

by Stephen M. Walker II, Co-Founder / CEO

LLM App Frameworks

LLM app frameworks are libraries and tools that help developers integrate and manage AI language models in their software. They provide the necessary infrastructure to easily deploy, monitor, and scale LLM models across various platforms and applications.

These frameworks are essential for AI Engineers, providing a suite of functionalities for data and prompt management, API connectors, and model deployment.

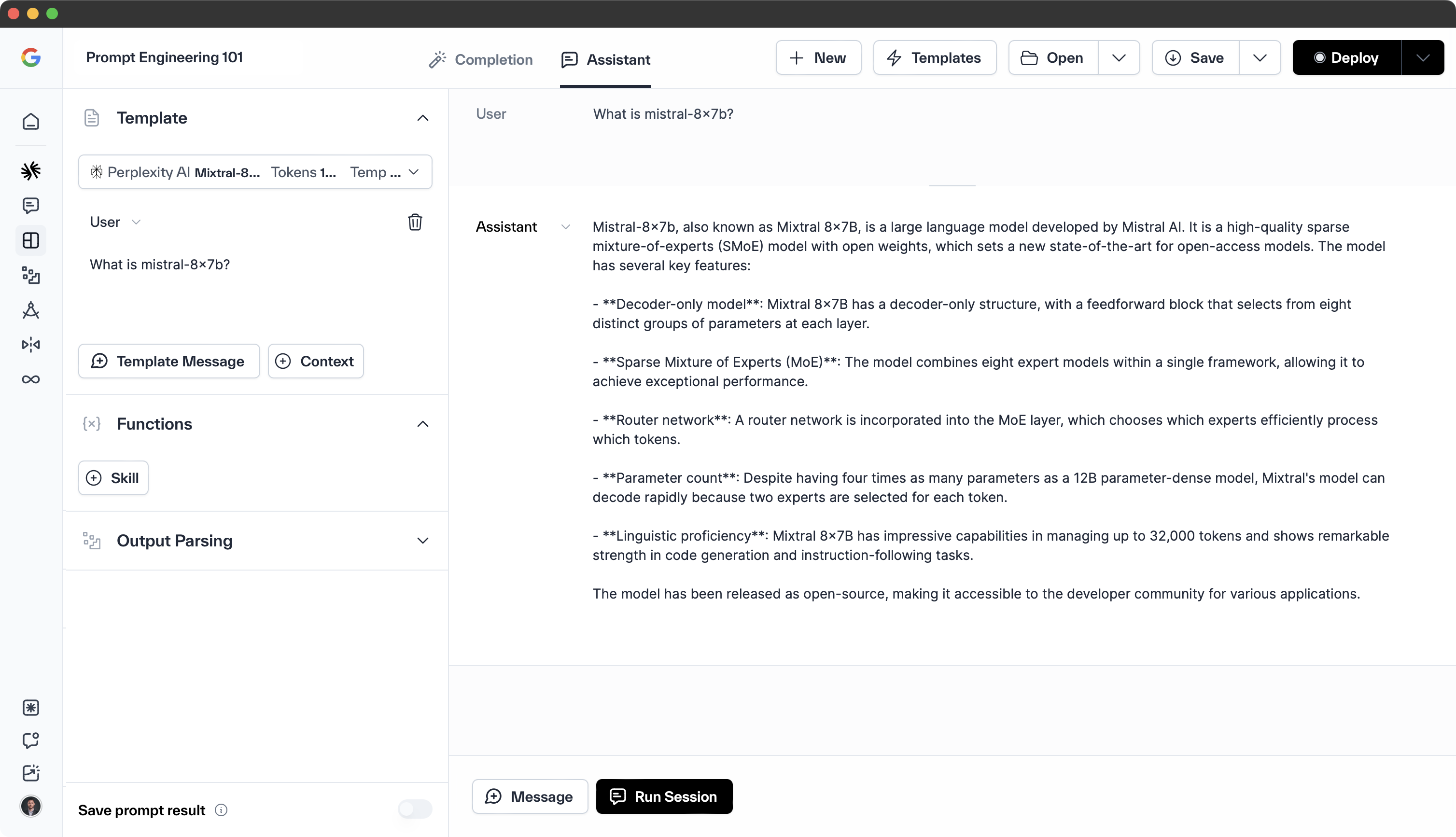

Frameworks like LangChain offer prompt engineering, conversation memory management, and prompt formulation, streamlining the development process for LLM-based applications. LlamaIndex complements these capabilities with additional tools for AI engineers.

Integration with various APIs, such as OpenAI, Anthropic, Together, and Perplexity Labs, allows for seamless incorporation of natural language processing features into applications, enhancing their intelligence and user interaction.

LLM App Frameworks

Here are the leading LLM app frameworks. Each framework offers unique strengths and is suited to different use cases, so the optimal choice will vary based on the specific needs of your application.

Best all-in-one for AI Teams

Klu.ai is an all-in-one LLM App Platform that enables AI teams to experiment, version, and fine-tune GPT-4 Apps. It provides a platform for collaborative prompt engineering, allowing teams to explore, save, and collaborate on their projects.

Klu also offers insights into usage and system performance across features and teams, helping users understand user preference, prompt performance, and label their data. It also provides a high-performance, private platform for building custom AI systems, simplifying model deployment and reducing overhead.

If you're not going to use Klu, here are our favorite alternatives.

Best performance for chained prompts

- Dust.ai is an AI platform that allows non-developers to build AI apps quickly and easily. It offers features like chained LLM apps, multiple inputs, model choice, and semantic search. Dust.ai provides an intuitive interface and pre-trained models, making it easy for users to create AI apps. It also offers easy deployment, enabling users to deploy their models quickly and easily to their favorite platforms.

Best Typescript framework

- Axflow is a TypeScript-first set of open-source modules that power the full AI lifecycle of AI applications, including LLM utilities, dataset management, continuous evaluation, fine-tuning, and model serving. It provides a family of modular libraries, which can be incrementally adopted, and together form an end-to-end opinionated framework for LLM development.

Best Python RAG framework

- LlamaIndex is a library designed for building search and retrieval applications with hierarchical indexing, increased control, and wider flexibility. It's particularly tailored for indexing and retrieving data, making it ideal for applications such as semantic search and context-aware query.

Best for ReACT agents

-

LangChain is an open-source framework and developer toolkit that helps developers get started with LLMs. It provides extensive control and adaptability for various use cases, making it a more comprehensive framework compared to LlamaIndex.

-

Flowise A drag & drop UI to build your customized LLM flow using LangchainJS.

-

Dify An Open-Source Assistants API and GPTs alternative. Dify.AI is an LLM application development platform. It integrates the concepts of Backend as a Service and LLMOps, covering the core tech stack required for building generative AI-native applications, including a built-in RAG engine.

Best alternative to LangChain

- Griptape is an open-source Python framework and a managed cloud platform for building and deploying enterprise-class AI applications. It allows developers to create simple LLM-powered agents, compose sequential event-driven pipelines, or orchestrate complex DAG-based workflows.

Best RAG framework for data-intensive projects

- Haystack is an open-source LLM framework for building production-ready applications. It provides all tooling in one place, including preprocessing, pipelines, agents & tools, prompts, evaluation, and fine-tuning. It also allows developers to choose their favorite database and scale to millions of documents.

Best connector framework for platforms using multiple models

- LiteLLM is a relatively new framework that attempts to mitigate the pain when migrating between different AI APIs. It provides smart features for managing timeouts, cooldowns, and retries, ensuring your app is efficient, reliable, and user-friendly.

LLM Serving Frameworks

LLM Serving Frameworks are specialized tools that help developers deploy and manage AI language models. They make it easier to integrate these models into their private cloud, ensuring they run smoothly and efficiently.

-

vLLM — A framework for LLM inference and serving. It's one of the open-source libraries for LLM inference and serving.

-

Ray Serve — Ray is a unified framework for scaling AI and Python applications. Ray consists of a core distributed runtime and a set of AI Libraries for accelerating ML workloads. Used by OpenAI for internal projects.

-

MLC LLM — A universal deployment solution that enables LLMs to run efficiently on consumer devices, leveraging native hardware acceleration.

-

DeepSpeed-MII — A framework to use if you already have experience with the DeepSpeed library and wish to continue using it for deploying LLMs.

-

OpenLLM — A framework that supports connecting multiple adapters to only one deployed LLM. It allows the use of different implementations: Pytorch, Tensorflow, or Flax.

-

FlexGen — A tool for running large language models on a single GPU for throughput-oriented scenarios.

-

lanarky — A FastAPI framework to build production-grade LLM applications.

-

Xinference — A framework that gives you the freedom to use any LLM you need. It empowers you to run inference with any open-source language models, speech recognition models, and multimodal models.

-

Giskard — A testing framework dedicated to ML models, from tabular to LLMs.

Remember, the best framework for a particular application will depend on the specific requirements of that application.

Top LLM Providers

As of 2024, the landscape of Large Language Model (LLM) providers is diverse, with several key players offering a range of services from API access to open-source models. Here are the top LLM vendors to consider:

-

OpenAI — The creator of the GPT series, including GPT-3.5 and GPT-4, OpenAI is the leader in the field and has popularized LLMs with ChatGPT.

-

TogetherAI — Offers a range of generative AI models and tools that are both fast and cost-efficient, including Mixtral-8x7B LLM.

-

Anthropic — Known for their work on AI safety and interpretability, Anthropic is a significant contributor to the LLM space.

-

Mistral — Known for their Mistral 7b and Mixtral models, their recently launched platform also offerings Mistral Medium, outperforming GPT-3.5, Claude, and Gemini Pro.

-

Cohere — Offers a platform for building AI applications with natural language understanding capabilities.

-

Google — A major player with its models like LaMDA and PaLM, and the recently unveiled Gemini LLM.

-

AI21Labs — Offers Jurassic-2, a model with quality improvements and additional capabilities.

These companies are at the forefront of LLM development, each contributing to the advancement of natural language processing and generation technologies. They provide tools that enable a wide range of applications, from text generation to sentiment analysis, information retrieval, and even code generation.

For developers and businesses looking to integrate LLMs into their operations, these providers offer a mix of commercial and open-source options to suit various needs. Whether you're looking to enhance customer interactions, expedite development cycles, or leverage AI for creative endeavors, these LLM vendors are key players to consider in 2024.

Popular AI Assistants

-

ChatGPT — A specific LLM developed by OpenAI, designed for use in chatbots. It's trained on a massive dataset of text and code, enabling it to learn the patterns of human conversation and generate natural and engaging responses.

-

Bard — Google Bard is an experimental conversational AI chat service developed by Google, designed to simulate human-like conversations and provide information by drawing from the web. Bard also includes a Google Search button to allow users to fact-check or explore topics further.

-

Claude — Claude is a family of LLMs and the name of the assistant developed by Anthropic. Claude's primary differentiation is its creativity, large context window, and advanced AI alignment (aka censorship) making it valuable in organizations with a minimal risk appetite.

Open Source AI Assistants

The top open source AI assistants, include:

-

Ollama — Ollama is an open-source project that allows you to run large language models locally. It provides a simple HTML UI for interaction and customization.

-

Flowise — Flowise is an open-source low-code LLM Apps Builder. It provides a visual interface for building customized LLM orchestration flows and AI agents.

-

Chatbot UI — This is an open-source AI chat interface that can be used by anyone. It provides a simple and user-friendly interface for building chatbots.

-

Mycroft — Mycroft is a privacy-focused open-source voice assistant. It is customizable and can run on many platforms, including desktop and smart speakers.

-

Leon — Leon is an open-source personal assistant that can live on your server. It is built on top of Node.js, Python, and artificial intelligence concepts.

These open-source AI assistants cater to different interaction modes: Ollama and Chatbot UI are tailored for text-based communication, while Mycroft and Leon are voice-oriented. Flowise uniquely enables the construction of customized LLM orchestration flows.