Data Annotation for LLMs

by Stephen M. Walker II, Co-Founder / CEO

What is Data Annotation for LLMs?

Data Annotation for Large Language Models (LLMs) refers to the process of labeling, categorizing, and tagging data to facilitate machine learning algorithms in understanding and generating accurate predictions.

This process is crucial for training LLMs, which are a type of artificial intelligence (AI) program that can recognize and generate text, among other tasks.

Data annotation involves labeling raw data with specific information such as metadata, labels, semantic information, etc., that a machine learning algorithm can use to learn from.

For instance, in the context of text data, it must be tagged with entities, sentiment, or topic information in natural language processing.

The process of data annotation is essential for several reasons:

- Specialized Tasks — LLMs by themselves cannot perform specialized or business-specific tasks. Data annotation allows the customization of LLMs to understand and generate accurate predictions in specific domains or industries.

- Bias Mitigation — LLMs are susceptible to biases present in the data they are trained on, which can impact the accuracy and fairness of their responses. Through data annotation, biases can be identified and mitigated.

- Quality Control — Data annotation enables quality control by ensuring that the data used to train the LLMs is accurate and reliable.

- Compliance and Regulation — Data annotation allows for the inclusion of compliance measures and regulations specific to an industry. By annotating data with legal, ethical, or regulatory considerations, LLMs can be trained to provide responses that adhere to industry standards and guidelines.

In the context of LLMs, data annotation can also be used to fine-tune these models to understand and generate accurate predictions in domains or industries with specific requirements. This helps improve the reliability and trustworthiness of the LLMs in practical applications.

Moreover, some LLMs are capable of zero-shot or few-shot learning, which enables the model to make predictions without any need for fine-tuning. In data labeling, this capability allows LLMs to perform manual labeling tasks without lengthy preparation.

However, it's important to note that the process of data annotation can be challenging due to issues such as subjectivity and bias, as well as the dimension and complexity of the data. Therefore, it's crucial to establish clear guidelines for annotators to follow to ensure the accuracy and consistency of annotations.

What are some common techniques used for data annotation in LLMs?

Data annotation for Large Language Models (LLMs) involves a variety of techniques, each with its own strengths and applications. Here are some of the most common methods:

-

Manual Annotation — This involves human annotators reviewing and assigning data labels or annotations. It ensures the creation of high-quality labeled datasets, but can be time-consuming and less scalable.

-

Automatic Annotation — LLMs can convert raw data into labeled data by leveraging their natural language processing capabilities. This method is faster than manual annotation and can handle large datasets effectively.

-

Semi-automatic Annotation — This is a hybrid approach that combines human assistance with LLMs for the most desirable labeling result. It can balance the speed of automatic annotation with the accuracy of manual annotation.

-

Instruction Tuning — This involves fine-tuning publicly available LLMs through the curation of additional labeled data. For example, OpenAI uses human labelers to collect data for fine-tuning its GPT models.

-

Zero-shot Learning — Through zero-shot learning, labels can be obtained on unlabeled data using only the output of the LLM, rather than having to ask a human to obtain the labels. This can significantly lower the cost of obtaining labels and makes the process far more scalable.

-

Prompting — This involves giving the LLM a description of the task, some examples, and a new example to generate a label. The data generated from this is generally going to be noisy and of lower quality than human labels.

-

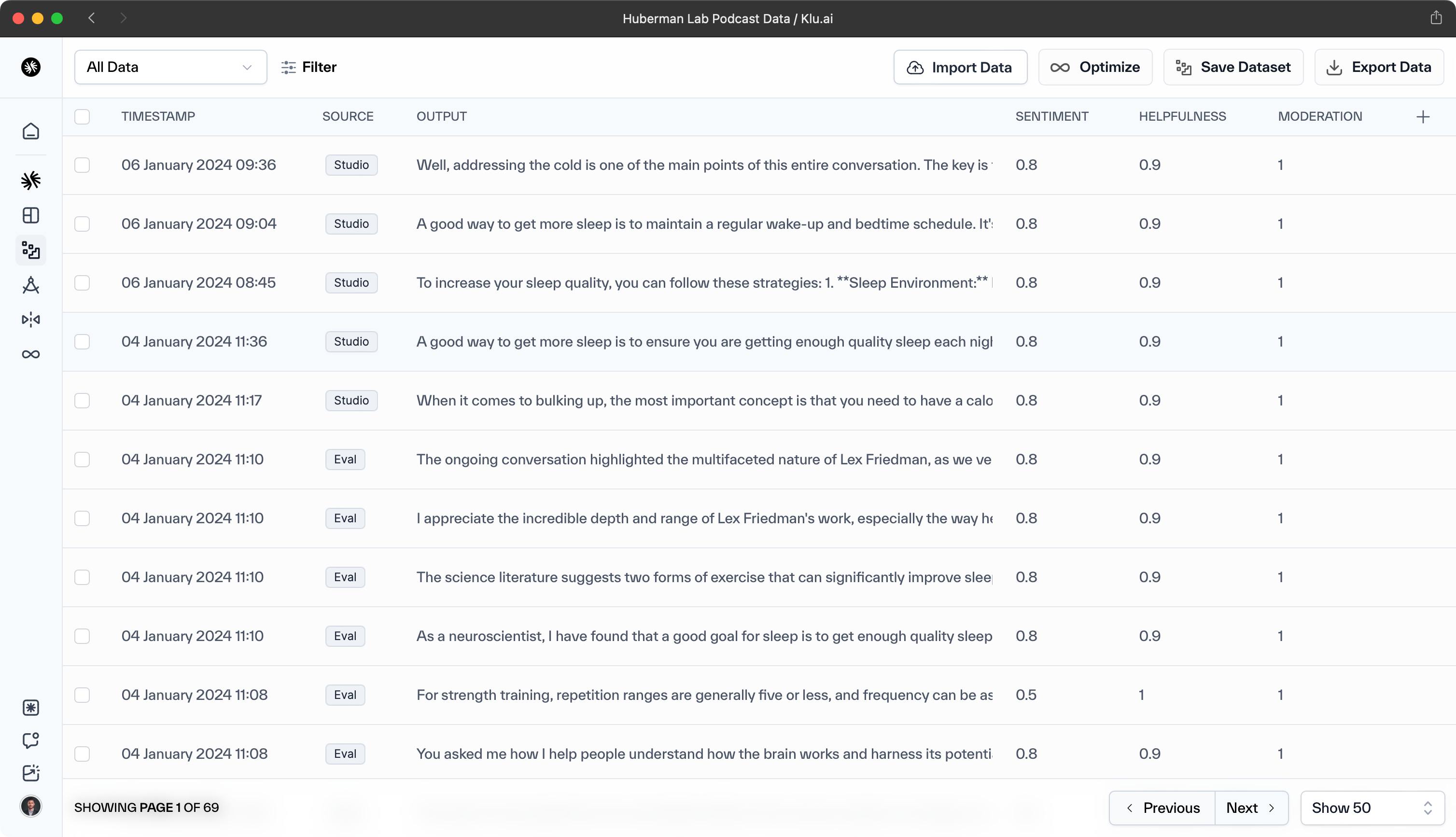

Programmatic Labeling — With tools like Klu.ai Data, organizations can generate labeled data programmatically. This method accelerates the process of generating labeled data.

-

In-house, Outsourced, Crowdsourced, and AI-driven Annotation — These are different approaches to who performs the annotation. In-house annotation involves using your own team of dedicated annotators, while outsourced and crowdsourced annotation involve external parties. AI-driven annotation leverages machine learning models to automate the process.

The choice of annotation technique depends on the specific requirements of the LLM, the nature of the data, and the context in which it is being used.

What is Data Annotation?

Data annotation is the process of labeling or tagging data to make it understandable and usable for machine learning (ML) and artificial intelligence (AI) models. This data can be in various forms such as text, images, audio, or video. The process involves adding informative labels to raw data, providing context that a machine learning model can learn from.

For instance, in an image, objects like traffic lights, pedestrians, vehicles, and buildings can be labeled to train the model to recognize these elements.

There are several types of data annotation, including image and video annotation, text categorization, semantic annotation, and content categorization. These annotations help machines identify various entities in the data and understand the context. For example, in text annotation, additional information, labels, and definitions are attached to texts. I

n image annotation, objects and entities in an image are marked and outlined, making the image readable for machines.

Data annotation is a crucial part of supervised learning, a type of machine learning where the model learns from labeled training data. The quality of the annotated data directly impacts the performance and accuracy of the ML models.

High-quality data annotation can lead to more reliable ML models, thereby improving the efficiency and effectiveness of the project. Conversely, poor data quality can significantly impact the accuracy and effectiveness of ML models.

Data annotation can be done manually by human annotators or automatically using advanced machine learning techniques. However, manual annotation can be time-consuming and labor-intensive, making it one of the top limitations of AI implementation for organizations.

Despite these challenges, the importance of data annotation in the field of AI and ML cannot be overstated. It is the backbone of these technologies, enabling models to understand and interpret data, recognize patterns, and make accurate predictions.

What are the different types of data annotation?

Data annotation is a critical process in machine learning and artificial intelligence, and it comes in various types depending on the nature of the data and the specific requirements of the project. Here are the different types of data annotation:

-

Text Annotation — This involves attaching additional information, labels, and definitions to texts. It's essential for Natural Language Processing (NLP) tasks and can include sentiment annotation, text classification, and entity annotation.

-

Image Annotation — This is the task of marking and outlining objects and entities in an image, making it readable for machines. It's crucial for computer vision applications such as object recognition and scene understanding. Techniques used in image annotation include bounding boxes, where the image is enclosed in a rectangular box defined by x and y axes.

-

Video Annotation — Similar to image annotation, but applied to video data. It involves marking and outlining objects and entities in a video over a sequence of frames.

-

Audio Annotation — This involves identifying and tagging parameters within audio data, such as language, speaker demographics, mood, intention, and emotion.

-

Semantic Annotation — This involves adding metadata to the data that provides more context and meaning. It's often used in text and image data to help machines understand the context and relationships between different entities.

-

Content Categorization — This involves classifying data into predefined categories. It can be applied to various types of data, including text, images, and videos.

-

Key-point Annotation — This involves marking key points or landmarks in an image or video. It's often used in applications such as pose estimation and facial recognition.

The effectiveness of machine learning models is closely tied to the chosen data annotation method, which should align with the data's nature and the machine learning task's specific needs.

What are some common applications for Data Annotation for LLMs?

Data Annotation is primarily used in the training and fine-tuning of Large Language Models (LLMs). It is a critical step in the development of these models, as it provides the labeled data that the models need to learn.

In the field of natural language processing (NLP), data annotation is used to label text data for tasks such as sentiment analysis, named entity recognition, and machine translation.

For instance, annotators might label sentences with their sentiment (positive, negative, neutral) or identify entities in the text (such as people, places, and organizations).

With computer vision, data annotation is used to label image or video data for tasks such as object detection, image segmentation, and image classification. For example, annotators might draw bounding boxes around objects in an image or label each pixel in an image with its corresponding class (such as "car", "person", "tree").

Data Annotation for LLMs is also used in other domains. In healthcare, it is used to label medical images or electronic health records for tasks such as disease detection or patient risk prediction. In autonomous driving, it is used to label sensor data for tasks such as object detection, lane detection, and traffic sign recognition.

Despite its wide range of applications, Data Annotation for LLMs does have some challenges. It can be time-consuming and expensive, especially for large datasets or complex tasks. It also requires a high level of expertise to ensure the accuracy and consistency of the labels.

How does Data Annotation for LLMs work?

Data Annotation for LLMs involves labeling data with the correct labels that the model needs to learn. This process can be done manually by human annotators, semi-automatically with the help of machine learning algorithms, or automatically with machine learning algorithms.

Manual annotation involves human annotators labeling the data. This is the most accurate method, but it can be time-consuming and expensive, especially for large datasets.

Semi-automatic annotation involves using machine learning algorithms to pre-label the data, and then human annotators review and correct the labels. This method can be faster and cheaper than manual annotation, but it still requires human involvement to ensure the accuracy of the labels.

Automatic annotation involves using machine learning algorithms to label the data without human involvement. This method can be the fastest and cheapest, but it may not be as accurate as the other methods, especially for complex tasks or low-quality data.

The choice of annotation method depends on the specific requirements of the task, the quality and quantity of the data, and the resources available.

What are some challenges associated with Data Annotation for LLMs?

While Data Annotation for LLMs is a critical aspect of AI safety, it also comes with several challenges:

-

Quality Control — Ensuring the quality and consistency of the labels can be challenging, especially for large datasets or complex tasks. This requires a high level of expertise and careful quality control processes.

-

Time and Cost — Data annotation can be time-consuming and expensive, especially for manual annotation. This can be a barrier to the development of LLMs, especially for small organizations or researchers with limited resources.

-

Privacy and Ethics — Data annotation often involves handling sensitive data, such as personal information or medical records. This raises privacy and ethical issues that need to be carefully managed.

-

Scalability — Scaling up data annotation to handle large datasets or complex tasks can be challenging. This requires efficient processes, effective use of technology, and careful management of resources.

Despite these challenges, researchers and practitioners are developing various methods and tools to improve the efficiency, quality, and scalability of data annotation for LLMs.

What are some current state-of-the-art methods for Data Annotation for LLMs?

There are many different methods and tools available for data annotation, each with its own advantages and disadvantages. Some of the most popular methods include the following:

-

Manual Annotation — This involves human annotators labeling the data. While this method can be time-consuming and expensive, it is often the most accurate.

-

Semi-Automatic Annotation — This involves using machine learning algorithms to pre-label the data, and then human annotators review and correct the labels. This method can be faster and cheaper than manual annotation, but it still requires human involvement to ensure the accuracy of the labels.

-

Automatic Annotation — This involves using machine learning algorithms to label the data without human involvement. This method can be the fastest and cheapest, but it may not be as accurate as the other methods, especially for complex tasks or low-quality data.

-

Crowdsourcing — This involves using a large crowd of people, often through an online platform, to annotate the data. This method can be a cost-effective way to annotate large datasets, but it requires careful quality control to ensure the accuracy and consistency of the labels.

-

Active Learning — This involves using machine learning algorithms to identify the most informative examples for annotation. This method can be an efficient way to use limited annotation resources, but it requires a good initial model to start the active learning process.

While these data annotation methods are foundational to AI applications, it's crucial to manage them effectively to maintain label quality and consistency. Not all methods are appropriate for every task or dataset, so careful consideration is needed when choosing the right approach.

How do LLMs compare to traditional annotation pipelines?

Large Language Models (LLMs) have introduced a paradigm shift in data annotation pipelines compared to traditional methods. Here's how they compare:

Efficiency and Scalability

LLMs, particularly those capable of zero-shot or few-shot learning, can perform manual labeling tasks without extensive preparation, which accelerates the annotation process. They can handle large datasets more efficiently than human-only annotation workflows, as demonstrated in medical information extraction where time-efficiency gains were quantified.

Quality of Annotations

While LLMs can generate annotations quickly, the quality of these annotations may not always match expert human level, especially in specialized domains. Traditional pipelines often rely on human expertise to ensure high-quality annotations, but LLMs can assist in pre-annotation tasks to improve efficiency without fully replacing human judgment.

Cost-Effectiveness

The economic comparison between LLM-assisted pipelines and traditional methods shows that LLMs can be more cost-effective, especially when they are used to annotate a small portion of data which is then used to train other models like Graph Neural Networks (GNNs). However, the cost savings are not always straightforward, as the quality and specific requirements of the task can influence the overall cost.

Customization and Specialization

Traditional annotation pipelines are often tailored to specific tasks with data annotated accordingly for high accuracy. LLMs, on the other hand, are pre-trained on diverse datasets and may require fine-tuning to perform specialized tasks effectively.

Integration with Human Expertise

LLMs can be integrated with human annotators to create a hybrid approach, leveraging the strengths of both. This can lead to an optimal mix of speed and accuracy, where LLMs pre-annotate data and humans refine the output.

Use in Real-World Applications

Comparisons of LLM performance on real-world projects are necessary to get a clear picture of their effectiveness. Testing multiple LLMs on actual data labeling projects has shown that they may not always meet quality expectations set by traditional human-only annotation methods.

Annotation for Specific Domains

In domains where high-quality annotations are required but resources are limited, integrating LLMs into data labeling workflows can be particularly beneficial. For instance, in medical NLP, LLMs have shown promise in accelerating the annotation process while maintaining quality.

While LLMs enhance data annotation with their efficiency and scalability, they are not a complete solution. They work best when combined with human expertise to maintain annotation quality, particularly in specialized fields. The decision to use LLM-assisted annotation or traditional methods should be based on the task's specific needs, the required annotation quality, and resource availability.