LLM Playground

by Stephen M. Walker II, Co-Founder / CEO

What is an LLM Playground?

An LLM (Large Language Model) playground is a platform where developers can experiment with, test, and deploy prompts for large language models.

These playgrounds are designed to facilitate the process of writing, code generation, troubleshooting, and brainstorming.

They allow for rapid iteration, testing, refining, and re-testing in quick succession, and enable the comparison of the performance of different models side-by-side. Some key features of LLM playgrounds include:

- User-friendly interface — LLM playgrounds provide an intuitive and accessible environment for developers to interact with large language models.

- Model comparison — Users can compare the performance of different models by running the same prompt across multiple models or running multiple prompts simultaneously.

- Data curation and governance — LLM playgrounds often offer features for data curation, data governance, and security, allowing developers to manage, version, and debug their data.

- Customizability — LLM playgrounds enable high customizability, giving developers the freedom to create an LLM that meets their specific needs.

Some popular LLM playgrounds include Vercel AI Playground, Perplexity Labs, OpenAI's Codex Playground, and Klu's Studio. These playgrounds offer access to top-of-the-line models, such as Llama 3.1, Claude 3.5 Sonnet, GPT-4 Turbo, and more, allowing developers to experiment and evaluate various LLMs and prompts.

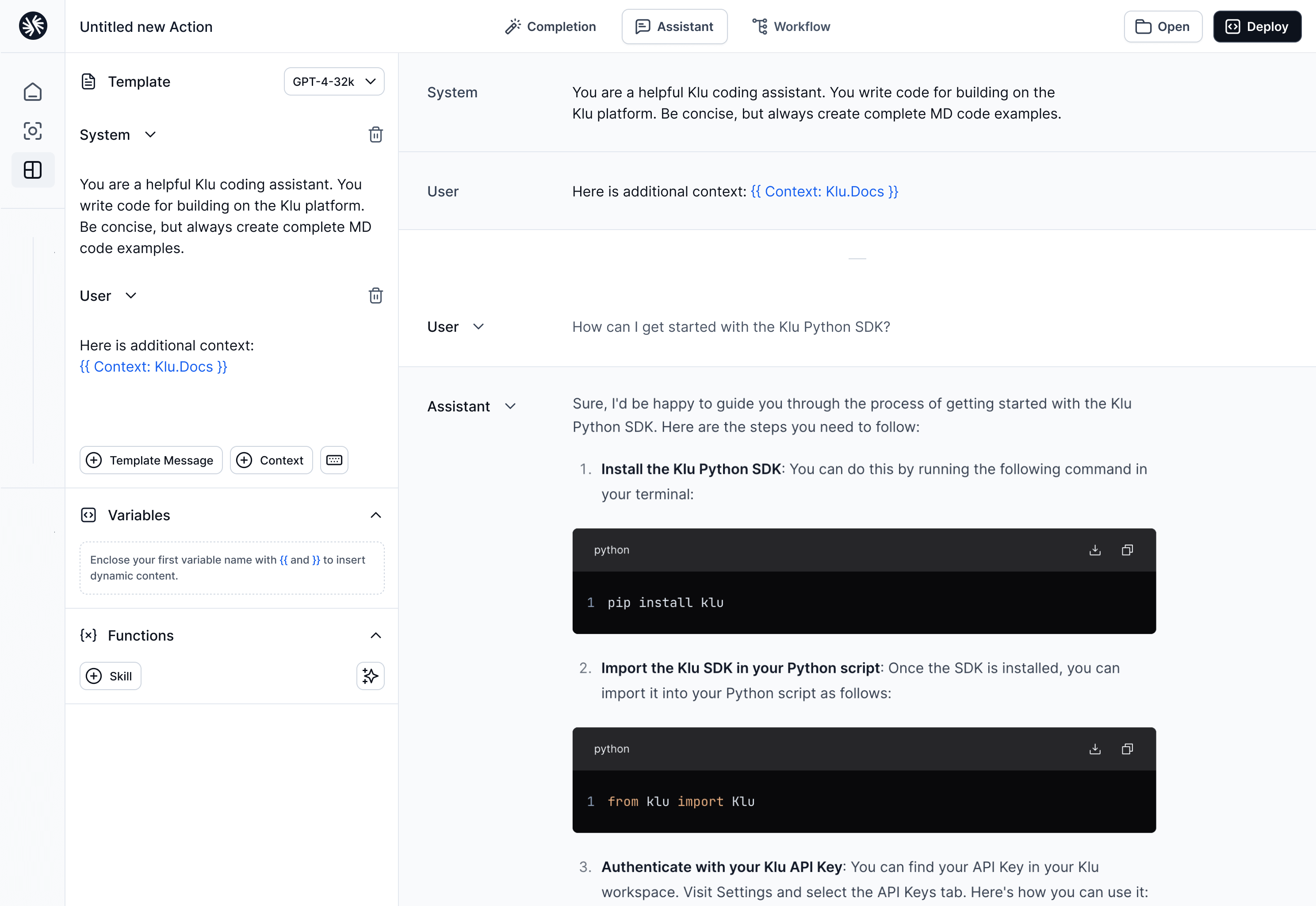

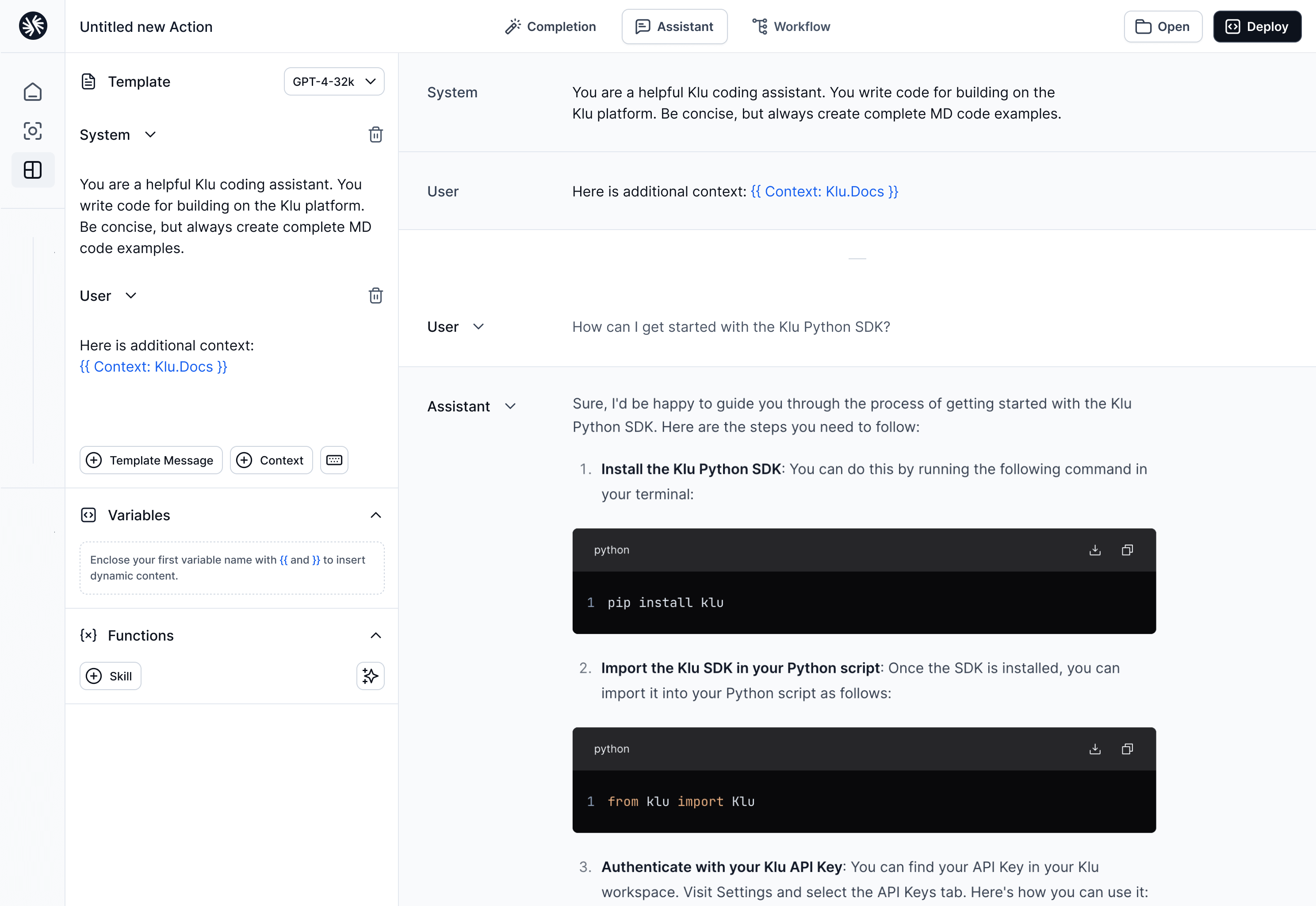

Klu Studio

Klu.ai Studio serves as a shared workspace for AI Teams, offering the ability to save, version, and test prompts with any LLMs. Klu Studio is an interactive editor environment for domain experts, PMs, and AI engineers to collaborate on prompts, retrieval pipelines, and evaluations. It enables model provider side-by-side comparison and evaluation of function calling. It also enables prompt deployment via an endpoint or a no-code UI. It features three primary modes: assistant, completion, and document.

OpenAI Playground

This playground provides access to OpenAI's API and allows users to interact with models like GPT-4o and GPT-4 Turbo. It offers controls like model selection, prompt structure, function calling, and temperature adjustment. In November 2023, OpenAI added Assistants mode to the playground.

Google AI Studio

Google AI Studio (fka Makersuite) is a comprehensive platform that provides access to Google's advanced AI models, including Gemini 1.5 and Bard. It offers a range of controls for model selection, prompt customization, and real-time feedback. The studio emphasizes user-friendly interfaces and robust security features, allowing developers to experiment with AI capabilities while ensuring data privacy. As of November 2023, Google AI Studio introduced a new collaborative mode for enhanced team interactions.

Anthropic Playground

The Anthropic Playground is a platform designed for experimenting with Anthropic's advanced language models. It provides users with the ability to interact with models like Claude3, offering features such as model selection, prompt customization, and real-time feedback. The playground emphasizes safety and alignment, allowing developers to explore AI capabilities while ensuring ethical use. As of July 2024, the Anthropic Playground includes enhanced tools for prompt engineering and model evaluation, making it a valuable resource for AI researchers and developers.

Perplexity Labs

Perplexity Labs, offer a platform for users to test and experiment with large language models (LLMs) for free. Perplexity Labs offers two online models, pplx-7b-online and pplx-70b-online, which are focused on delivering helpful, up-to-date, and factual responses. As of September 2024, the playground supports:

- llama-3.1-sonar-large-128k-online

- llama-3.1-sonar-small-128k-online

- llama-3.1-sonar-large-128k-chat

- llama-3.1-sonar-small-128k-chat

- llama-3.1-8b-instruct

- llama-3.1-70b-instruct

Vercel AI Playground

This playground provides access to state-of-the-art models like Google Gemini 1.5 Flash, Meta Llama 3.1, Anthropic Claude 3, Cohere Command Nightly, OpenAI GPT-4o, and many more. Users can compare these models' performance side-by-side or chat with them like any other chatbot. It also offers API and SDK code for building apps.

Examples of LLM Playgrounds

One example of an LLM playground is Klu, an American company that offers a platform for developers to test various LLM prompts and deploy the best ones into an application with full DevOps-style measurement and monitoring.

Another example is openplayground, a Python package that allows you to experiment with various LLMs on your laptop. It provides a user-friendly interface where you can play around with parameters, make model comparisons, and trace the log history.

There are also other LLM playgrounds available, such as the PromptTools Playground by Hegel AI, which allows developers to experiment with multiple prompts and models simultaneously, and the Vercel AI Playground, which provides access to top-of-the-line models like Llama2, Claude2, and GPT-4.

LLM Playgrounds Workflow

LLM playgrounds are interactive platforms for developers to experiment with, test, and evaluate LLMs. These environments support prompt writing, code generation, troubleshooting, and collaborative brainstorming.

Developers use these playgrounds to prototype LLM applications, offering a browser-based interface for interaction with models, understanding their randomness, and recognizing their limitations.

Rapid iteration is a key feature, enabling quick cycles of testing, refinement, and retesting. Developers can also compare different models' performances directly.

Beyond model testing, LLM playgrounds provide tools for data management, governance, and security, along with extensive customization options to tailor LLMs to specific requirements.

To use an LLM playground, developers typically start by setting the LLM blueprint configuration, including the base LLM and, optionally, a system prompt and vector database. They can then interact with the LLM by sending prompts and receiving responses, fine-tuning the system prompt and settings until they are satisfied. Once multiple LLM blueprints are saved, developers can use the playground's Comparison tab to compare them side-by-side.

There are several LLM playgrounds available, including Vercel AI Playground, Klu.ai, SuperAnnotate's LLM toolbox, and DataRobot's playground. These platforms provide user-friendly interfaces and a range of features to assist developers in working with LLMs.

Popular Open LLM Playgrounds

Here are some popular LLM (Large Language Model) playgrounds where developers can experiment with, test, and deploy prompts for large language models:

-

Vercel AI Playground — This platform allows access to top-of-the-line models like Llama2, Claude2, Command Nightly, GPT-4, and even open-source models from HuggingFace. You can compare these models' performance side-by-side or just chat with them like any other chatbot.

-

Chatbot Arena — This platform lets you experience a wide variety of models like Vicuna, Koala, RMKV-4-Raven, Alpaca, ChatGLM, LLaMA, Dolly, StableLM, and FastChat-T5. You can compare the model performance, and according to the leaderboard, Vicuna 13b is winning with an 1169 elo rating.

-

Open Playground — This Python package allows you to use all of your favorite LLM models on your laptop. It offers models from OpenAI, Anthropic, Cohere, Forefront, HuggingFace, Aleph Alpha, and llama.cpp.

-

LiteLLM — This is a Python tool that allows you to create a playground to evaluate multiple LLM Providers in less than 10 minutes.

These platforms provide a controlled environment for developers to experiment with and test large language models, facilitating the development and deployment of AI applications.

How does Klu Studio help AI Teams?

Klu.ai: An LLM App Platform for building, versioning, and fine-tuning GPT-4 applications.

- Collaboration: Provides tools for team-based prompt engineering, prototyping, and workflow development.

- Deployment: Enables the launch of LLM apps with A/B testing, performance evaluations, and model fine-tuning capabilities.

- Data Management: Features tracking for system performance, user preferences, and data curation for model training.

- Security: Offers SOC2-compliant self-hosting and private cloud options for enhanced data protection.

- Pricing: Includes a free tier for personal projects and scalable plans for enterprise needs, with complimentary GPT-4-turbo trials.

- Search Integration: Provides an AI-powered search engine to integrate and query across multiple work applications.

The benefits of using Klu.ai over other comparable products are centered around its comprehensive suite of capabilities tailored for rapid development, deployment, and optimization of AI systems. Here are some key advantages:

- Rapid Prototyping — Klu.ai enables teams to gather insights and iterate on LLM apps in under 10 minutes, promoting a faster development cycle.

- Collaboration and Versioning — Team collaboration features and change versioning, which can be crucial for managing complex AI projects.

- Deployment Environments — Ddeploy environments that can help in managing different stages of app development from testing to production.

- Optimization Tools — Platform includes A/B experiments, evaluations, and fine-tuning capabilities to optimize AI applications.

- Security and Compliance — Private cloud option and SOC2 compliance, which is important for enterprise-scale applications that require high security and adherence to regulatory standards.

- Scalability — With plans that support from 1k to 100k+ monthly runs and unlimited documents, Klu.ai is designed to scale with the needs of the project or organization.

- Free Tier — Klu.ai has a free tier suitable for hobby projects, which is a great starting point for individuals or small teams to experiment with AI apps without initial investment.