What is Data Labeling in Machine Learning?

by Stephen M. Walker II, Co-Founder / CEO

Top tip

Data Labeling

Data labeling is the process of assigning labels to raw data, transforming it into a structured format for training machine learning models. This step is essential for models to classify data, recognize patterns, and make predictions. It involves annotating data types like images, text, audio, or video with relevant information, which is critical for supervised learning algorithms such as classification and object detection.

The process is often laborious, requiring extensive human effort, particularly for complex data types. The quality of labeling directly affects model performance, making clear guidelines, skilled annotators, and stringent quality control imperative. Innovations like active learning and crowdsourcing, along with sophisticated data labeling tools, have streamlined the process, enhancing label quality and efficiency while reducing costs.

Why is data labeling important?

Data labeling is a critical step in the development of machine learning (ML) models, particularly for supervised learning. It involves identifying raw data (like images, text, audio, etc.) and adding informative labels to provide context, which helps the ML model make accurate predictions. The importance of data labeling lies in its direct impact on the quality and performance of ML models.

Enhances Accuracy

Accurate data labeling ensures better quality assurance within ML algorithms, allowing the model to train and yield the expected output. It can dramatically increase the accuracy of data used to train machines and run algorithms.

Facilitates Better Predictions

More accurate data generally improves model predictions, so despite its high cost, the value that it provides is usually well worth the investment.

Enables Real-World Understanding

Data labeling allows AI and ML algorithms to build an accurate understanding of real-world environments and conditions.

Improves Quality of Training Data

Through accurate data labeling, AI and ML systems take less time and offer greater output.

Captures Edge Cases

Manual data labeling can capture edge cases that may be easily overlooked by automated systems.

However, data labeling is not without its challenges. It can be time-consuming, expensive, and prone to human error. Both automated and manual data labeling methods have their strengths and weaknesses. Automated data labeling can handle large datasets efficiently but may struggle with complex tasks. Manual data labeling, while more accurate and flexible, can be time-intensive and vulnerable to inconsistencies.

To optimize data labeling, best practices include collecting diverse and representative data, setting up an annotation process, and ensuring a quality assurance process. For large-scale projects or cases where accuracy is of utmost importance, using a combination of both manual and automated methods can be beneficial.

What is Data Labeling in Machine Learning?

Data labeling is a critical step in the development of machine learning algorithms. It involves the process of tagging or annotating data, which can be in various forms like text, images, or videos, with correct and informative labels. These labels, which can range from simple tags like 'cat' or 'dog' for an image recognition algorithm to more complex metadata for more advanced machine learning systems, serve as ground truth data.

This information enables the machine learning model to learn from these pre-defined inputs and outputs, improve its predictive accuracy, and perform tasks such as pattern recognition, image classification, language translation, and more with greater precision.

Data labeling, essentially, is the human intervention in the machine learning data preparation process that plays a fundamental role in teaching the algorithms and improving AI performance.

What are the different types of data labeling techniques?

Data labeling techniques can be categorized based on whether humans or computers are performing the labeling, and they can take various forms:

Human-Based Labeling

-

Internal Labeling —

- Performed in-house by expert data scientists within a company.

- Offers higher security and accuracy due to direct control over the process.

-

Crowdsourcing —

- Outsourcing tasks to a large group of people, often through online platforms.

- Can be cost-effective and scalable but may require quality control measures.

-

Professional Annotation Services —

- Specialized companies provide data labeling services with experienced labelers.

- This approach can ensure high-quality labels but may be more expensive.

Computer-Assisted Labeling

-

Semi-Automated Labeling —

- Combines human oversight with machine learning models to label data.

- Can improve efficiency and reduce the cognitive load on human labelers.

-

Active Learning —

- A machine learning model is trained on a small amount of labeled data and then used to label new data.

- Human labelers review and correct the model's labels, iteratively improving the model.

Quality Control Techniques

-

Labeler Consensus —

- Multiple labelers annotate the same data, and their results are compared to counteract individual error or bias.

-

Label Auditing —

- Regular checks on the accuracy of labels with updates as necessary to maintain quality.

Specialized Techniques

-

Synthetic Data Generation —

- Creating artificial data with known labels to train models, especially useful when real labeled data is scarce or expensive to obtain.

-

Transfer Learning —

- Leveraging pre-trained models on similar tasks to reduce the amount of required labeled data.

-

Categorization, Segmentation, Sequencing, and Mapping —

- Specific types of annotations that are used depending on the nature of the data and the problem being addressed.

A blended approach using both automated and human-based labeling is often recommended to balance efficiency, accuracy, and cost. The choice of technique depends on factors such as the complexity of the task, data volume, team size, and available resources.

What is the importance of Data Labeling in Machine Learning?

Data labeling plays a crucial role in supervised learning tasks as it provides the model with the necessary information to learn from the data. It allows the model to understand the relationship between the input data and the output data, which is essential for the model to make accurate predictions. Data labeling is critically important in machine learning for several reasons:

-

Supervised Learning Requires Labeled Data — Many machine learning algorithms like classification, regression, and neural networks require large amounts of labeled data to learn from. Data labeling provides the labels that allow supervised machine learning models to train and generalize. Without quality labeled data, performance suffers.

-

Enables Deep Learning — Deep learning techniques have achieved state-of-the-art results across many domains including computer vision, NLP, and speech recognition. Their success relies on massive training datasets with billions of high-quality labeled examples like ImageNet and MS-COCO in computer vision. Data labeling enables deep learning models.

-

Removes Bias and Noise — Proper data labeling can help remove inadvertent biases, incorrect assumptions, labeling errors and noise in training data that negatively impact model performance and fairness. High-quality labeling ensures noisy, biased and unrepresentative data isn't used for training.

-

Increases Model Accuracy — More high-quality labeled training data directly translates to machine learning models that are more accurate and better at generalization to real-world use cases. Data labeling is key to performance.

-

Saves Time and Resources — While labeling raw data is still laborious, it's faster and more scalable than developing machine learning models themselves. Investing in data labeling upfront saves immense time and speeds up iterative experiments with different models and algorithms.

Quality labeled data unlocks the potential of machine learning. As models and datasets grow ever larger, scaling data labeling becomes critical to AI advancement and adoption.

How is Data Labeling performed in Machine Learning?

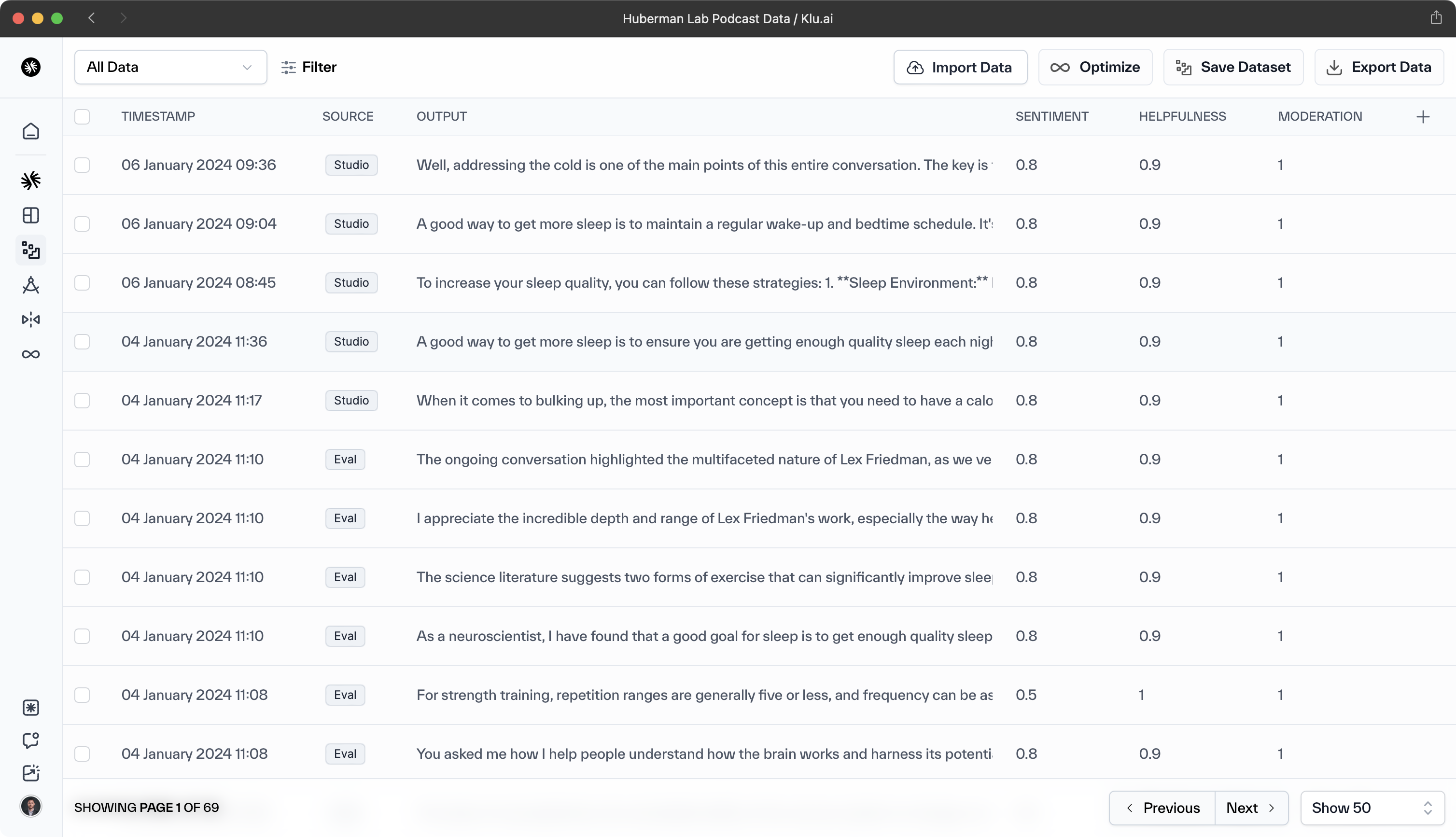

Data labeling, a critical process in machine learning, is often performed manually by human annotators following specific guidelines. However, the advent of automated data labeling techniques, such as those offered by Klu.ai using advanced models like GPT-4, has made the process more efficient. These techniques leverage machine learning algorithms to automate the labeling process.

The process of data labeling for machine learning typically involves several key steps. Initially, the machine learning task is defined, and the required labels, such as classification labels, bounding boxes, segmentation masks, transcripts, sentiment scores, etc., are determined. Detailed guidelines are then created to ensure labeling consistency across large datasets. These guidelines cover aspects like valid label values, handling of edge cases, subjective decisions, and quality assurance.

Following the creation of guidelines, human annotators manually assign labels to raw data, such as images, texts, videos, and audio recordings, using various interfaces and data labeling tools. The quality and throughput of this process heavily depend on the tooling used. Once the labeling is complete, the labels are verified and validated through spot checks, algorithms, and review by expert labelers. Any labels identified as incorrect are flagged and corrected to ensure high quality.

The final step involves preparing and exporting the labeled dataset, complete with metadata like label distributions, for use by machine learning teams. The process doesn't end here, though. It's crucial to continuously test model performance to identify weak points in the labeled training data and use techniques like active learning to add missing labels over time. This iterative improvement of the data labeling process is key to achieving higher data quality, which in turn enables the development of better machine learning models.

What are some of the challenges associated with Data Labeling in Machine Learning?

Data labeling, a critical component of machine learning, faces several challenges. The process can be time-consuming and costly, especially for large datasets. The accuracy of labels is crucial as errors can significantly affect model performance. Furthermore, obtaining necessary labels can be challenging due to privacy concerns or other restrictions.

The main challenges include:

-

Time and Cost — Manual data labeling is a slow and labor-intensive process. The creation of large labeled datasets can require thousands of human hours, making it an expensive task.

-

Label Consistency — Ensuring consistent labels across large teams of human labelers working on massive datasets is a significant challenge.

-

Label Accuracy — It's difficult to ensure all examples are labeled correctly according to the guidelines. Even a small label error rate can significantly impact model accuracy.

-

Bias and Subjectivity — Labels can be influenced by unconscious human biases and subjectivity, leading to unfair skews in the training data.

-

Data Diversity — Labeled datasets often fail to generalize due to a lack of diversity and coverage of niche edge cases.

-

Changing Data and Labels — Labeling guidelines and real-world data distributions change over time, necessitating updates to existing labels.

-

Sensitive Data Labeling — Special care is required when labeling and storing sensitive personal data, such as medical images or conversations.

-

Scalability — As model and dataset sizes grow exponentially, scaling up data labeling becomes a fundamental challenge and bottleneck.

The machine learning community is continuously innovating better data labeling workflows, interfaces, active learning algorithms, and training paradigms that rely less on large-scale human labeling to address these challenges.

How can Data Labeling be used to improve the performance of Machine Learning models?

Data labeling plays a critical role in enabling and enhancing machine learning models. Here's a look at some of the key ways that data labeling helps in building more accurate and robust models:

Provides Training Data Most machine learning models require large volumes of quality, labeled data in order to learn effectively. Data labeling provides the essential input that allows models to identify patterns and make predictions based on examples. The more extensive the labeling, the more adept models typically become at generalizing to new data.

Enables Supervised Learning Many popular machine learning approaches like classification and regression rely on supervised learning. This means that models train on examples that contain the target variable - the thing you want the model to predict. Data labeling provides these targets like categories or numeric values, enabling the models to learn correlations. Without labeling, only unsupervised learning is possible.

Allows Evaluating Model Accuracy Labels give a ground truth benchmark that model predictions can be compared against. Performance metrics like precision, recall and F1 score need to know the true labels for test data in order to calculate how accurate the model's predictions are. Labeling enables this model performance measurement.

Identifies Biases Analyzing where a model's predictions are mistaken can highlight weaknesses, biases or oddly distributed data. Additional labeling can generate training data to improve performance for these types of examples.

Supports Incremental Refinement As models improve over time, new labeled data can continue expanding what a model can handle. New classes and scenarios can be incrementally added to the training data to further teach the models.

Higher quality and larger volumes of labeled data translate to better machine learning models that can adeptly handle complexity and generalize accurately. Investing in labeling pays dividends in enhanced model capabilities.

What are some of the potential applications of Data Labeling in Machine Learning?

Data labeling is a crucial process in training machine learning models, enabling them to understand and interpret the world. It has a wide range of applications, including:

Computer Vision — Labeled image data is essential for tasks such as image classification, object detection, and semantic segmentation. By manually drawing bounding boxes around objects or classifying images, we provide vital training data for computer vision models. These models are used in various applications, from self-driving vehicles to medical image analysis.

Natural Language Processing (NLP) — Manual annotation of text data is required for many NLP tasks, including sentiment analysis, named entity recognition, topic labeling, and intent identification. These human-labeled examples are used to train models for applications like chatbots, search engines, and text summarization, equipping language models with real-world understanding.

Speech Recognition — Manual transcription of audio clips allows us to train machine learning models for speech recognition applications. These models map acoustic signals to text, enabling real-time speech transcription and voice assistants. The performance of these applications heavily depends on labeled samples of human speech.

These are just a few examples of how data labeling can unlock transformative machine learning capabilities. As models require more supervision to improve, scaling data labeling efforts will lead to more opportunities in areas like personalized recommendations and predictive analytics.

FAQs

How can I automatically label data with Klu.ai?

Klu.ai provides tools for automating the data labeling process using LLMs. Enterprise workspaces have automatic labeling of all generative data. By pre-training models on similar datasets, Klu.ai can predict labels for new, unlabeled data with a certain level of accuracy. This can significantly speed up the labeling process, although human verification is recommended to ensure the highest quality of labels.

What is the advantage of data label option?

The advantage of the data label option is that it provides a structured way to organize and interpret raw data. This is essential for training machine learning models, as it allows them to learn from examples and improve their accuracy in making predictions or classifications.

What is Labelled data used for?

Labelled data is used as training material for supervised machine learning models. It serves as a reference point that models use to learn and make predictions. It's crucial for tasks such as image recognition, natural language processing, and any other application where the model needs to understand and categorize input data.

Why is labeling important in AI?

Labeling is important in AI because it provides the ground truth that supervised learning algorithms need to function. Without labels, a model cannot accurately learn to predict or classify new data. Labeling is also essential for evaluating the performance of AI models, as it allows for comparison between the model's predictions and the actual labels.

What is the data label?

A data label is a descriptor that provides information about the nature of the data. It can be a simple tag, such as 'spam' or 'not spam' for emails, or more complex annotations, such as the emotions conveyed in a sentence or the objects contained in an image. Data labels are used to train and evaluate machine learning models.

What are labels in machine learning?

In machine learning, labels are the annotations or tags that provide a clear description of the dataset. They are used to indicate the output you want the machine learning model to predict. For example, in a dataset of emails, labels might indicate which emails are 'spam' or 'not spam.' Labels are essential for supervised learning, as they enable the model to learn from the data and make accurate predictions.