What is connectionism?

by Stephen M. Walker II, Co-Founder / CEO

What is connectionism?

Connectionism is an approach within cognitive science that models mental or behavioral phenomena as the emergent processes of interconnected networks of simple units. These units are often likened to neurons, and the connections (which can vary in strength) are akin to synapses in the brain.

The core idea is that cognitive functions arise from the collective interactions of a large number of processing units, not from single neurons acting in isolation.

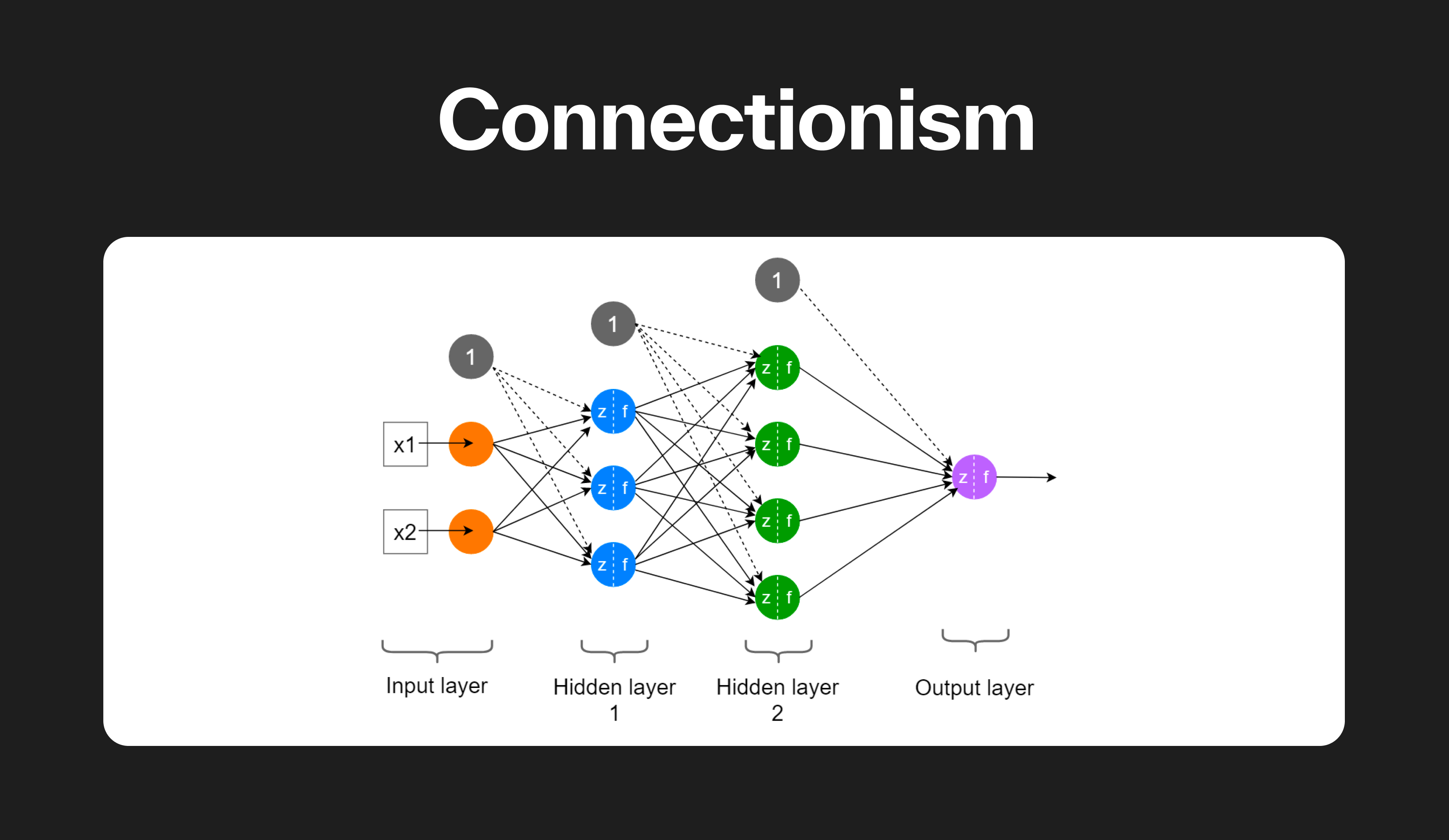

The connectionist models, also known as artificial neural networks, are inspired by the structure and function of the human brain.

They consist of layers of interconnected nodes or "neurons," which process information by responding to input signals and passing on their output to subsequent layers.

Learning in these networks typically occurs through the adjustment of connection weights between nodes, which is analogous to the strengthening or weakening of synapses in biological neural networks.

Connectionism contrasts with symbolic AI, which relies on hand-coded rules and representations. While symbolic AI is effective for tasks with clear, logical structures, it struggles with the variability and complexity of real-world data.

Connectionism, on the other hand, excels at tasks like pattern recognition and can adapt to new information, making it particularly useful in fields like computer vision and natural language processing.

The history of connectionism includes several waves of interest and development. The first wave in the 1940s and 1950s saw the creation of simple models, but enthusiasm waned due to limitations in the models' capabilities. Connectionism experienced a resurgence in the 1980s with the development of more sophisticated algorithms and increased computational power, leading to its current prominence in cognitive science and AI.

Connectionist approaches have been applied to a wide range of cognitive tasks, including language processing, memory, and perception. They offer a more biologically plausible model of cognition compared to classical computational approaches and have inspired a rich body of research and theoretical development.

Connectionism is a theoretical framework in cognitive science and AI that uses neural network models to explain mental processes and behaviors as emergent from the interactions of many simple processing units. It has become a dominant approach in modeling complex cognitive functions and has significantly influenced the development of modern AI.

How does connectionism differ from other approaches to artificial intelligence?

Connectionism provides a more biologically plausible model of cognition and excels at tasks involving pattern recognition and adaptation to new information. Symbolic AI, on the other hand, is effective for tasks with clear, logical structures and allows for explicit rule-based reasoning.

Connectionism and other approaches to artificial intelligence, such as symbolic AI, differ in several key ways:

-

Representation of Information — In symbolic AI, information is represented by strings of symbols, similar to how data is represented in computer memory or on pieces of paper. On the other hand, connectionism posits that information is stored non-symbolically in the weights, or connection strengths, between the units of a neural net.

-

Learning and Reasoning — Symbolic AI emphasizes the use of knowledge in reasoning and learning as critical to producing intelligent behavior. In contrast, connectionist AI postulates that learning of associations from data, with little or no prior knowledge, is crucial for understanding behavior.

-

Modeling Approach — Symbolic AI models are hand-coded by humans and rely on inserting human knowledge and rules into the system. Connectionist AI, however, models AI processes based on how the human brain works and its interconnected neurons. Learning in these models typically occurs through the adjustment of connection weights between nodes, which is analogous to the strengthening or weakening of synapses in biological neural networks.

-

Handling of Real-World Variability — Symbolic AI can struggle with the variability and complexity of real-world data, as it's time-consuming to create rules for every possibility. Connectionist AI, on the other hand, excels at tasks like pattern recognition and can adapt to new information, making it particularly useful in fields like computer vision and natural language processing.

-

Low-Level vs High-Level Modeling — Connectionists engage in "low-level" modeling, trying to ensure that their models resemble neurological structures. In contrast, computationalists (another term for proponents of symbolic AI) posit symbolic models that are structurally similar to underlying brain structure.

-

Focus of Study — Connectionists focus on learning from environmental stimuli and storing this information in the network's weights. Computationalists, on the other hand, generally focus on the structure of explicit symbols (mental models) and rules.

What is the connectionism model of learning?

The connectionism model of learning, also known as Parallel Distributed Processing (PDP), is an approach that takes inspiration from the way information processing occurs in the brain.

It involves the propagation of activation among simple units (artificial neurons) organized in networks, linked to each other through weighted connections representing synapses or groups thereof.

Each unit transmits its activation level to other units in the network by means of its connections to those units.

Connectionist models are often used to model aspects of human perception, cognition, and behavior, the learning processes underlying such behavior, and the storage and retrieval of information from memory.

Connectionism was initially proposed by Edward Thorndike, an American psychologist, in the late 19th and early 20th centuries.

Thorndike's theory posits that all behavior is the result of a connection between a stimulus and a response, and that these connections are strengthened or weakened based on the consequences of the behavior.

This principle forms the basis of the reinforcement theory of learning, which is widely used in psychology and education today.

Connectionist models have been applied successfully to educational issues such as word reading, single-digit multiplication, and prime-number detection. These applications underscore the importance of practice, feedback, prior knowledge, and well-structured lessons.

Connectionist models provide a new paradigm for understanding how information might be represented in the brain and have demonstrated an ability to learn skills such as face reading and the detection of simple grammatical structure.

However, it's important to note that while connectionism provides a powerful framework for understanding learning and cognition, it does not capture all aspects of human learning. For instance, the social aspects of learning are not addressed by current connectionist modeling.

Despite these limitations, connectionism remains a valuable tool in cognitive science and educational psychology, offering insights into the mechanisms of learning and memory.

What is the difference between behaviorism and connectionism?

Behaviorism and connectionism are two different approaches to understanding human behavior and cognition.

Behaviorism is a school of psychology that focuses on observable behavior as the primary subject of study. It posits that all behaviors are acquired through conditioning, which occurs through interaction with the environment.

Behaviorists believe that psychology should concern itself with the behavior of organisms (human and nonhuman) and should not concern itself with mental states or events or with constructing internal information processing accounts of behavior. They demand behavioral evidence for any psychological hypothesis.

Behaviorism is often associated with the work of psychologists like B.F. Skinner, who developed the concept of operant conditioning, which is about reinforcing desired behaviors to encourage their repetition.

On the other hand, connectionism is a movement in cognitive science that aims to explain intellectual abilities using artificial neural networks.

These networks are simplified models of the brain composed of large numbers of units (the analogs of neurons), interconnected in a way that models the effects of the synapses that link one neuron to another.

Connectionism attempts to model cognitive processes such as learning, memory, and comprehension using these networks. It is often associated with the study of human cognition using mathematical models known as connectionist networks or artificial neural networks.

The key difference between the two lies in their approach to understanding cognition. Behaviorism disavows interest in mental states, emphasizing a direct association between stimulus and response. It focuses on observable behavior and the environmental factors that shape it.

In contrast, connectionism invests considerable energy into determining what their networks know at various points in learning, focusing on the internal neural processes that underlie cognition and learning.

What are the benefits of connectionism?

Connectionism, inspired by the human brain's neural structure, offers several benefits in artificial intelligence (AI). Its systems learn and adapt by recognizing patterns in data, which is crucial in dynamic environments.

Unlike other models, connectionist AI can withstand damage to some nodes and still operate effectively, reflecting the resilience of biological networks. The parallel processing capability of connectionist models enables efficient handling of complex tasks.

These models are versatile, applicable across various AI functions, and particularly adept at pattern recognition, making them valuable in computer vision and natural language processing.

Memory retrieval in connectionist systems is efficient, avoiding exhaustive searches. With built-in learning capabilities, these systems refine their performance over time by adjusting connection weights in response to data.

Despite these strengths, connectionism is not without limitations. Abstract reasoning tasks such as planning or problem-solving can be challenging for connectionist models, and their decision-making processes can be opaque. Nonetheless, connectionism's ability to leverage large datasets for learning has significantly propelled AI advancements.

What are the limitations of connectionism?

Connectionism has propelled artificial intelligence (AI) forward but faces several challenges. The models, particularly deep neural networks, lack interpretability, making it hard to discern how they process information or make decisions. They often fall short in abstract reasoning tasks like problem-solving or planning, areas where symbolic AI with explicit rules excels. Training these networks demands substantial computational power, posing difficulties for deployment on less powerful devices or in resource-constrained environments.

Generalization is another issue; while adept at learning from training examples, connectionist models may not perform well on completely new tasks or situations that diverge from the training data. Critics also question the biological plausibility of these models, noting they overlook complex features of real neural systems, such as diverse neuron types and the influence of neurotransmitters. Moreover, connectionist models excel in associative processing but may not suffice for higher cognitive functions like language and reasoning, which require more than mere association.

Tasks involving fast variable binding, essential for symbolic reasoning, also pose a challenge for connectionist models. Additionally, these models are prone to overfitting, where they perform well on training data but poorly on unseen data, necessitating careful design and validation. Despite these issues, connectionist approaches are invaluable for pattern recognition and learning from extensive datasets, underscoring the need for continued research to overcome these hurdles.

How can connectionism be used in AI applications?

Connectionism, an AI paradigm, draws from the brain's neural architecture, utilizing networks of simple, interconnected processing units akin to neurons. These units learn by altering the strengths of their interconnections.

Connectionist approaches enable pattern recognition and data-driven learning, foundational to many machine learning algorithms, while also providing models for understanding brain functions and advancing AI applications.

What is the future of connectionism?

The future of connectionism in AI is poised for multifaceted evolution. Anticipated developments include the integration with symbolic AI to form Hybrid AI systems, leveraging the complementary strengths of both paradigms to address complex challenges. This synergy aims to enhance AI's problem-solving capabilities and interpretability.

Connectionist models are also set to further impact cognitive development research, informed by advances in developmental neuroscience. These models, which have already contributed to empirical research, are adapting to incorporate new findings and methodologies.

In the realm of natural language processing, connectionist models continue to make strides despite existing limitations. Their ability to process intricate linguistic data positions them as key players in advancing the field.

Moreover, the growth of non-symbolic AI systems like neural networks and deep learning underscores the significance of connectionist approaches. These systems mimic the brain's functionality and have become increasingly prominent for their problem-solving prowess.

While connectionist models have propelled AI forward, they are not without shortcomings. A notable issue is the lack of transparency in their decision-making processes, which poses challenges in scenarios where understanding the 'how' and 'why' behind AI decisions is essential. Nonetheless, connectionism's adaptability and power maintain its status as an indispensable tool in AI research and development.

FAQs

What are some real-world applications of connectionist models?

Connectionist models are widely used in various fields such as computer vision for image recognition, natural language processing for language translation and sentiment analysis, and recommendation systems that personalize user experiences on platforms like Netflix and Amazon.

How do you actually build and train a connectionist model?

Building and training a connectionist model involves defining the network architecture, initializing weights, and iteratively adjusting these weights using algorithms like backpropagation combined with gradient descent based on the error between the predicted and actual outputs.

What types of tasks are connectionist models best suited for?

Connectionist models excel at tasks involving pattern recognition and processing ambiguous or noisy data, such as speech recognition, handwriting recognition, and anomaly detection in cybersecurity.

How do connectionist models learn?

Connectionist models learn by using backpropagation to calculate the error gradient with respect to the weights, and then using gradient descent to update the weights in a direction that minimizes the error.

How do you evaluate the performance of a connectionist model?

The performance of a connectionist model is evaluated using metrics such as accuracy, precision, recall, and the F1 score, which provide insights into the model's predictive capabilities and classification performance.

What are some ethical concerns surrounding connectionist AI systems?

Ethical concerns include the potential for bias in decision-making, lack of transparency in how decisions are derived, and challenges in ensuring accountability for errors or unintended consequences.

What breakthroughs are still needed in connectionist models?

Further research is needed to improve generalization capabilities, enable abstract reasoning, and develop models that can perform complex cognitive tasks similar to human thought processes.

How is connectionism similar to and different from neuroscience?

Connectionism is similar to neuroscience in that both study networks of interconnected units resembling neurons; however, connectionism simplifies these networks and does not account for the full complexity of biological brains, such as the role of neurotransmitters and the diversity of neuron types.